---

license: apache-2.0

base_model:

- Qwen/Qwen3-VL-2B-Instruct

language:

- en

new_version: goodman2001/colqwen3-v0.1

pipeline_tag: visual-document-retrieval

---

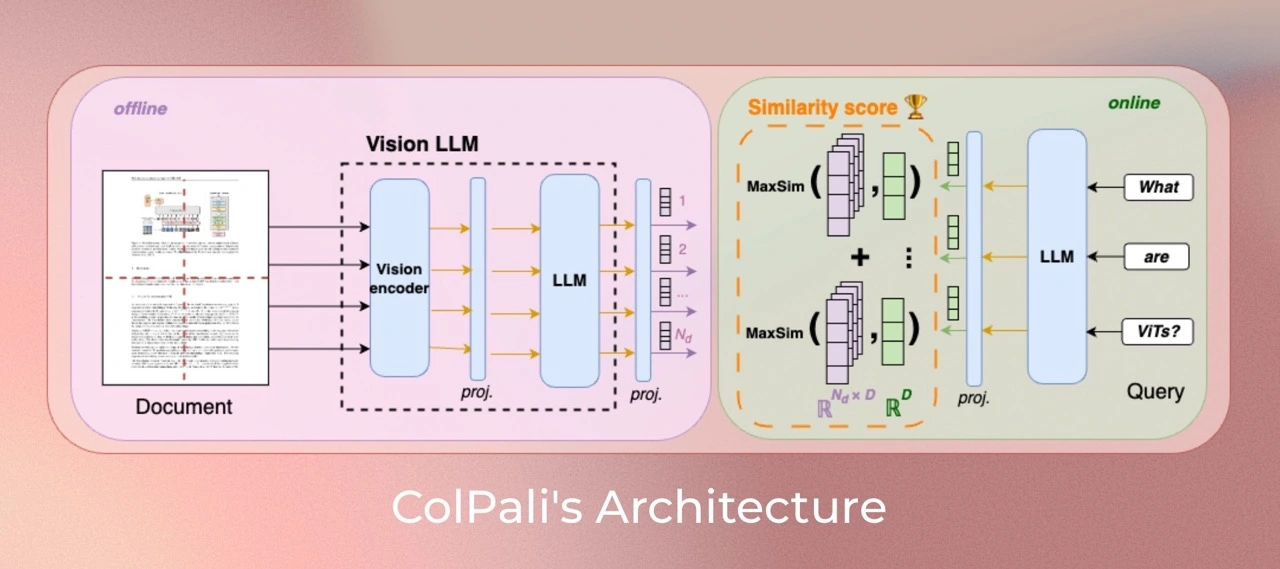

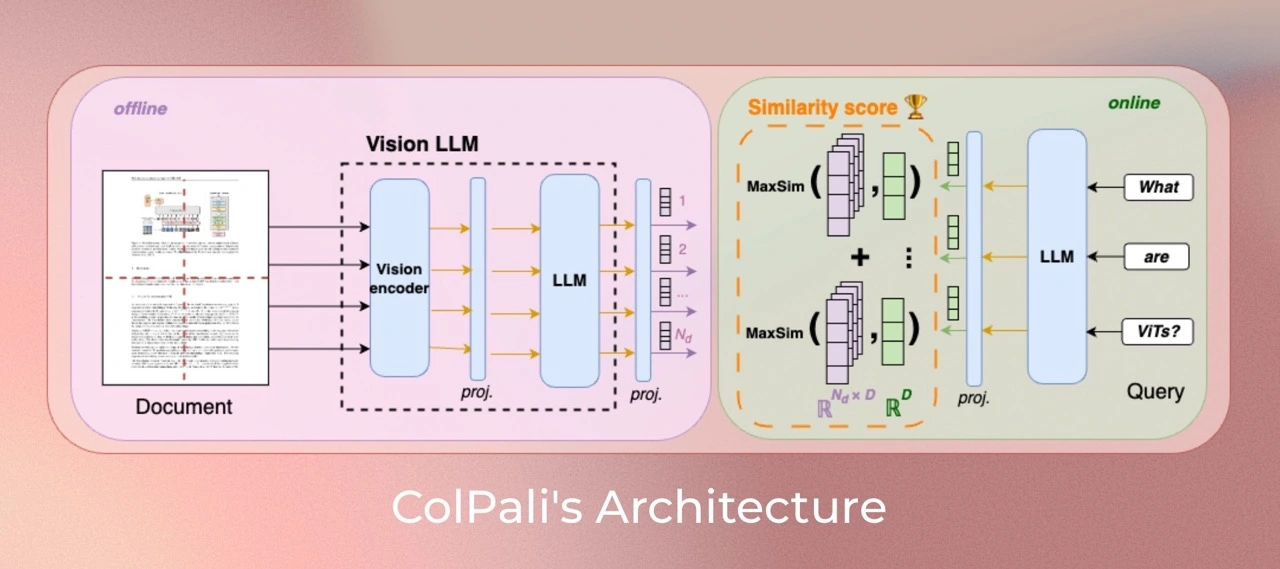

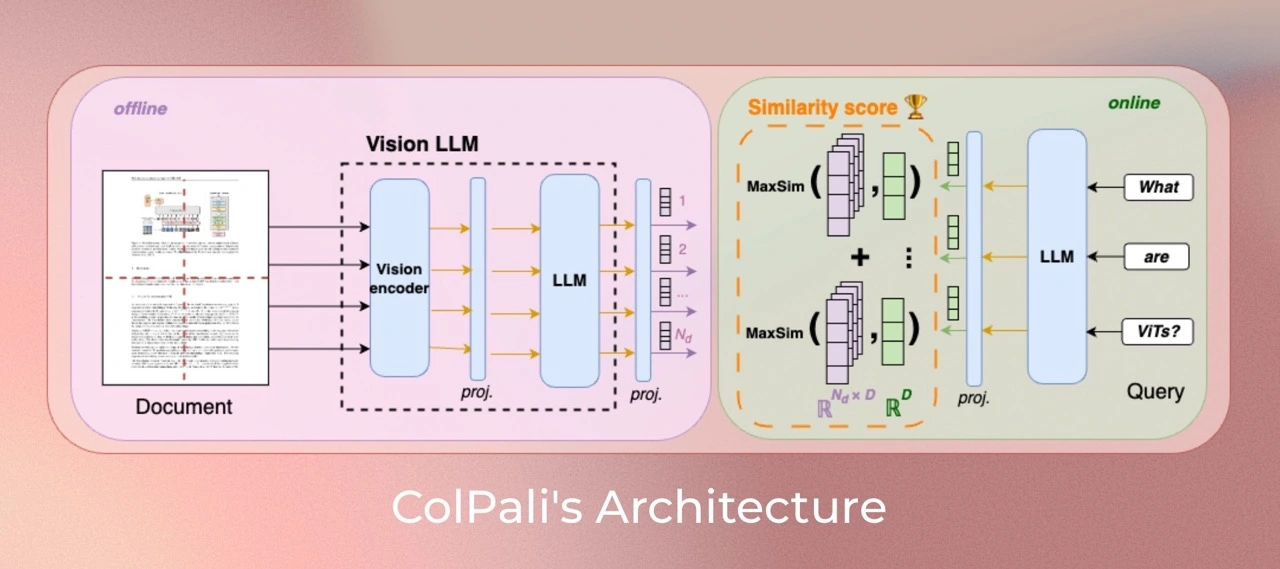

# ColQwen3: Visual Retriever based on Qwen3-VL-2B-Instruct with ColBERT strategy

### source code: [Mungeryang/colqwen3](https://github.com/Mungeryang/colqwen3)

ColQwen is a model based on a novel model architecture and training strategy based on Vision Language Models (VLMs) to efficiently index documents from their visual features.

It is a [Qwen3-VL-2B-Instruct](https://huggingface.co/Qwen/Qwen3-VL-2B-Instruct) extension that generates [ColBERT](https://arxiv.org/abs/2004.12832)- style multi-vector representations of text and images.

It was introduced in the paper [ColPali: Efficient Document Retrieval with Vision Language Models](https://arxiv.org/abs/2407.01449) and first released in [this repository](https://github.com/ManuelFay/colpali)

This version is the untrained base version to guarantee deterministic projection layer initialization.

## Usage

> [!WARNING]

> This version should not be used: it is solely the base version useful for deterministic LoRA initialization.

## Contact

- Mungeryang: mungerygm@gmail.com/yangguimiao@iie.ac.cn

## Acknowledgments

❤️❤️❤️

> [!WARNING]

> Thanks to the **Colpali team** and **Qwen team** for their excellent open-source works!

> I accomplished this work by **standing on the shoulders of giants~**

## Citation

If you use any datasets or models from this organization in your research, please cite the original dataset as follows:

```bibtex

@misc{faysse2024colpaliefficientdocumentretrieval,

title={ColPali: Efficient Document Retrieval with Vision Language Models},

author={Manuel Faysse and Hugues Sibille and Tony Wu and Bilel Omrani and Gautier Viaud and Céline Hudelot and Pierre Colombo},

year={2024},

eprint={2407.01449},

archivePrefix={arXiv},

primaryClass={cs.IR},

url={https://arxiv.org/abs/2407.01449},

}

```