'

return tensor;

^~~~~~

Target //mediapipe/examples/desktop/hand_tracking:hand_tracking_cpu failed to build

[Environment]

Machine: Ubuntu 18.04 (running on Amazon Lightsail), 4GB ram 2 vCPUs, 80 GB SSD

gcc Version: 7.5.0

Bazel Version: 3.7.2

OpenCV Version: 3.2.0

python Version: 2.7.17

python3 Version: 3.6.9

Note: Hello World builds and runs correctly.

",0,mediapipe build error building c command line example mediapipe hands error building mediapipe hands example c bazel build c opt define mediapipe disable gpu mediapipe examples desktop hand tracking hand tracking cpu actions running error home ubuntu mediapipe mediapipe calculators tensor build c compilation of rule mediapipe calculators tensor image to tensor converter o pencv failed exit gcc failed error executing command usr bin gcc u fortify source fstack protector wall wunused but set parameter wno free nonh eap object fno omit frame pointer d fortify source dndebug ffunction sections remaining argument s skipped use sandbox debug to see verbose messages from the sandbox gcc failed error executing command usr bin gcc u fortify source fstack protector wall wun used but set parameter wno free nonheap object fno omit frame pointer d fortify source dndebug ffunction sections remaining argumen t s skipped use sandbox debug to see verbose messages from the sandbox mediapipe calculators tensor image to tensor converter opencv cc in member function virtual absl lts statusor mediapipe an onymous opencvprocessor convert const mediapipe image const mediapipe rotatedrect const mediapipe size float float mediapipe calculators tensor image to tensor converter opencv cc error could not convert tensor from mediapipe tensor to absl lts statusor return tensor target mediapipe examples desktop hand tracking hand tracking cpu failed to build machine ubuntu running on amazon lightsail ram vcpus gb ssd gcc version bazel version opencv version python version version note hello world builds and runs correctly ,0

270999,23576654294.0,IssuesEvent,2022-08-23 01:58:16,lowRISC/opentitan,https://api.github.com/repos/lowRISC/opentitan,opened,[rom-e2e] rom_e2e_bootstrap_enabled_not_requested,Priority:P2 Type:Task SW:ROM Milestone:V2 Component:RomE2eTest,"### Test point name

[rom_e2e_bootstrap_enabled_not_requested](https://cs.opensource.google/opentitan/opentitan/+/master:sw/device/silicon_creator/rom/data/rom_testplan.hjson?q=rom_e2e_bootstrap_enabled_not_requested)

### Host side component

Unknown

### OpenTitanTool infrastructure implemented

Unknown

### Contact person

@alphan

### Checklist

Please fill out this checklist as items are completed. Link to PRs and issues as appropriate.

- [ ] Check if existing test covers most or all of this testpoint (if so, either extend said test to cover all points, or skip the next 3 checkboxes)

- [ ] Device-side (C) component developed

- [ ] Bazel build rules developed

- [ ] Host-side component developed

- [ ] HJSON test plan updated with test name (so it shows up in the dashboard)

- [ ] Test added to dvsim nightly regression (and passing at time of checking)

### Verify that ROM does not enter bootstrap when enabled in OTP but not requested.

`OWNER_SW_CFG_ROM_BOOTSTRAP_EN` OTP item must be `kHardenedBoolTrue` (`0x739`).

- Do not apply bootstrap pin strapping.

- Reset the chip.

- Verify that the chip outputs the expected `BFV`: `0142500d` over UART.

- ROM will continously reset the chip and output the same `BFV`.

- Verify that the chip does not respond to `READ_STATUS` (`0x05`).

- The data on the CIPO line must be `0xff`.

",1.0,"[rom-e2e] rom_e2e_bootstrap_enabled_not_requested - ### Test point name

[rom_e2e_bootstrap_enabled_not_requested](https://cs.opensource.google/opentitan/opentitan/+/master:sw/device/silicon_creator/rom/data/rom_testplan.hjson?q=rom_e2e_bootstrap_enabled_not_requested)

### Host side component

Unknown

### OpenTitanTool infrastructure implemented

Unknown

### Contact person

@alphan

### Checklist

Please fill out this checklist as items are completed. Link to PRs and issues as appropriate.

- [ ] Check if existing test covers most or all of this testpoint (if so, either extend said test to cover all points, or skip the next 3 checkboxes)

- [ ] Device-side (C) component developed

- [ ] Bazel build rules developed

- [ ] Host-side component developed

- [ ] HJSON test plan updated with test name (so it shows up in the dashboard)

- [ ] Test added to dvsim nightly regression (and passing at time of checking)

### Verify that ROM does not enter bootstrap when enabled in OTP but not requested.

`OWNER_SW_CFG_ROM_BOOTSTRAP_EN` OTP item must be `kHardenedBoolTrue` (`0x739`).

- Do not apply bootstrap pin strapping.

- Reset the chip.

- Verify that the chip outputs the expected `BFV`: `0142500d` over UART.

- ROM will continously reset the chip and output the same `BFV`.

- Verify that the chip does not respond to `READ_STATUS` (`0x05`).

- The data on the CIPO line must be `0xff`.

",0, rom bootstrap enabled not requested test point name host side component unknown opentitantool infrastructure implemented unknown contact person alphan checklist please fill out this checklist as items are completed link to prs and issues as appropriate check if existing test covers most or all of this testpoint if so either extend said test to cover all points or skip the next checkboxes device side c component developed bazel build rules developed host side component developed hjson test plan updated with test name so it shows up in the dashboard test added to dvsim nightly regression and passing at time of checking verify that rom does not enter bootstrap when enabled in otp but not requested owner sw cfg rom bootstrap en otp item must be khardenedbooltrue do not apply bootstrap pin strapping reset the chip verify that the chip outputs the expected bfv over uart rom will continously reset the chip and output the same bfv verify that the chip does not respond to read status the data on the cipo line must be ,0

204778,15531592090.0,IssuesEvent,2021-03-14 00:30:02,backend-br/vagas,https://api.github.com/repos/backend-br/vagas,closed,[Remoto/Campinas] Node.js developer @HDN.Digital,Alocado CI Express MongoDB NodeJS Pleno Remoto Stale Testes Unitários,"

## Nossa empresa

Somos uma consultoria de transformação digital que está no mercado há 12 anos, e estamos em franca expansão.

Temos um time especializado em entregar soluções de tecnologia que agregam valor, otimizam o tempo e trazem inovação ao seu modo de trabalho. Nossa missão é colaborar, disseminar o conhecimento e a cultura de empresas através de novas tecnologias e processos ágeis.

Há mais de 10 anos temos a experiência em desenvolvimento de softwares e soluções digitais.

Faz parte do DNA da HDN entender a dor dos nossos clientes, se colocar no lugar dele e oferecer as soluções que melhor se adequam às específicas necessidades.

Toda mudança e transformação digital requer um primeiro passo, e estamos preparados para caminhar lado a lado.

Prezamos pelo desenvolvimento do colaborador tanto a nível técnico quanto a nível pessoal, temos um ambiente leve e divertido, queremos que você tenha voz e seja ouvido, tenha participação nos processos da empresa e encontre um ambiente amigável para crescer profissionalmente e construir sua carreira conosco!

## Descrição da vaga

Você vai atuar junto ao nosso time de engenheiros back-end para arquitetar e implementar soluções para diversos clientes (nacionais e internacionais) em projetos ágeis e desruptivos, com impacto direto no negócio. Queremos um profissional comunicativo, que ame codar e tenha responsabilidade sobre suas entregas.

## Local

Remoto ou Escritório, Campinas - Nova Campinas

## Requisitos

**Obrigatórios:**

- Experiência com Node e Express

**Desejáveis:**

- Conhecimentos com MongoDB e Postgres,

- Experiência com Testes unitários com Jest

- Experiência com testes de integração

- Conhecimentos em CI/CD

- Conhecimentos em React e/ou Vue

- Experiência com microsserviços

**Diferenciais:**

- Inglês avançado

## Benefícios

- 30 dias de férias remuneradas

**Diferenciais:**

- Sala de jogos

- Acesso aos cursos internos disponibilizados pela empresa

## Contratação

Cooperativa, a combinar

## Como se candidatar

Por favor envie um email para marcelo.pinheiro@hdnit.com.br com seu CV anexado - enviar no assunto: Vaga NodeJS

## Tempo médio de feedbacks

Costumamos enviar feedbacks em até 2 dias após cada processo.

E-mail para contato em caso de não haver resposta: contato@hdnit.com.br

## Labels

#### Alocação

- Alocado

- Remoto

#### Regime

- Cooperativa

#### Nível

- Júnior

- Pleno

- Sênior

",1.0,"[Remoto/Campinas] Node.js developer @HDN.Digital -

## Nossa empresa

Somos uma consultoria de transformação digital que está no mercado há 12 anos, e estamos em franca expansão.

Temos um time especializado em entregar soluções de tecnologia que agregam valor, otimizam o tempo e trazem inovação ao seu modo de trabalho. Nossa missão é colaborar, disseminar o conhecimento e a cultura de empresas através de novas tecnologias e processos ágeis.

Há mais de 10 anos temos a experiência em desenvolvimento de softwares e soluções digitais.

Faz parte do DNA da HDN entender a dor dos nossos clientes, se colocar no lugar dele e oferecer as soluções que melhor se adequam às específicas necessidades.

Toda mudança e transformação digital requer um primeiro passo, e estamos preparados para caminhar lado a lado.

Prezamos pelo desenvolvimento do colaborador tanto a nível técnico quanto a nível pessoal, temos um ambiente leve e divertido, queremos que você tenha voz e seja ouvido, tenha participação nos processos da empresa e encontre um ambiente amigável para crescer profissionalmente e construir sua carreira conosco!

## Descrição da vaga

Você vai atuar junto ao nosso time de engenheiros back-end para arquitetar e implementar soluções para diversos clientes (nacionais e internacionais) em projetos ágeis e desruptivos, com impacto direto no negócio. Queremos um profissional comunicativo, que ame codar e tenha responsabilidade sobre suas entregas.

## Local

Remoto ou Escritório, Campinas - Nova Campinas

## Requisitos

**Obrigatórios:**

- Experiência com Node e Express

**Desejáveis:**

- Conhecimentos com MongoDB e Postgres,

- Experiência com Testes unitários com Jest

- Experiência com testes de integração

- Conhecimentos em CI/CD

- Conhecimentos em React e/ou Vue

- Experiência com microsserviços

**Diferenciais:**

- Inglês avançado

## Benefícios

- 30 dias de férias remuneradas

**Diferenciais:**

- Sala de jogos

- Acesso aos cursos internos disponibilizados pela empresa

## Contratação

Cooperativa, a combinar

## Como se candidatar

Por favor envie um email para marcelo.pinheiro@hdnit.com.br com seu CV anexado - enviar no assunto: Vaga NodeJS

## Tempo médio de feedbacks

Costumamos enviar feedbacks em até 2 dias após cada processo.

E-mail para contato em caso de não haver resposta: contato@hdnit.com.br

## Labels

#### Alocação

- Alocado

- Remoto

#### Regime

- Cooperativa

#### Nível

- Júnior

- Pleno

- Sênior

",0, node js developer hdn digital nossa empresa somos uma consultoria de transformação digital que está no mercado há anos e estamos em franca expansão temos um time especializado em entregar soluções de tecnologia que agregam valor otimizam o tempo e trazem inovação ao seu modo de trabalho nossa missão é colaborar disseminar o conhecimento e a cultura de empresas através de novas tecnologias e processos ágeis há mais de anos temos a experiência em desenvolvimento de softwares e soluções digitais faz parte do dna da hdn entender a dor dos nossos clientes se colocar no lugar dele e oferecer as soluções que melhor se adequam às específicas necessidades toda mudança e transformação digital requer um primeiro passo e estamos preparados para caminhar lado a lado prezamos pelo desenvolvimento do colaborador tanto a nível técnico quanto a nível pessoal temos um ambiente leve e divertido queremos que você tenha voz e seja ouvido tenha participação nos processos da empresa e encontre um ambiente amigável para crescer profissionalmente e construir sua carreira conosco descrição da vaga você vai atuar junto ao nosso time de engenheiros back end para arquitetar e implementar soluções para diversos clientes nacionais e internacionais em projetos ágeis e desruptivos com impacto direto no negócio queremos um profissional comunicativo que ame codar e tenha responsabilidade sobre suas entregas local remoto ou escritório campinas nova campinas requisitos obrigatórios experiência com node e express desejáveis conhecimentos com mongodb e postgres experiência com testes unitários com jest experiência com testes de integração conhecimentos em ci cd conhecimentos em react e ou vue experiência com microsserviços diferenciais inglês avançado benefícios dias de férias remuneradas diferenciais sala de jogos acesso aos cursos internos disponibilizados pela empresa contratação cooperativa a combinar como se candidatar por favor envie um email para marcelo pinheiro hdnit com br com seu cv anexado enviar no assunto vaga nodejs tempo médio de feedbacks costumamos enviar feedbacks em até dias após cada processo e mail para contato em caso de não haver resposta contato hdnit com br labels alocação alocado remoto regime cooperativa nível júnior pleno sênior ,0

1386,19985127338.0,IssuesEvent,2022-01-30 14:45:53,lkrg-org/lkrg,https://api.github.com/repos/lkrg-org/lkrg,closed,Build fails on OpenSUSE leap's Linux 5.3.18-59.37,portability,"```

/__w/lkrg/lkrg/src/modules/database/JUMP_LABEL/p_arch_jump_label_transform_apply/p_arch_jump_label_transform_apply.c: In function 'p_arch_jump_label_transform_apply_entry':

/__w/lkrg/lkrg/src/modules/database/JUMP_LABEL/p_arch_jump_label_transform_apply/p_arch_jump_label_transform_apply.c:94:19: error: 'p_text_poke_loc {aka struct text_poke_loc}' has no member named 'detour'

&& p_tmp->detour) {

^~

make[3]: *** [/usr/src/linux-5.3.18-59.37/scripts/Makefile.build:288: /__w/lkrg/lkrg/src/modules/database/JUMP_LABEL

```",True,"Build fails on OpenSUSE leap's Linux 5.3.18-59.37 - ```

/__w/lkrg/lkrg/src/modules/database/JUMP_LABEL/p_arch_jump_label_transform_apply/p_arch_jump_label_transform_apply.c: In function 'p_arch_jump_label_transform_apply_entry':

/__w/lkrg/lkrg/src/modules/database/JUMP_LABEL/p_arch_jump_label_transform_apply/p_arch_jump_label_transform_apply.c:94:19: error: 'p_text_poke_loc {aka struct text_poke_loc}' has no member named 'detour'

&& p_tmp->detour) {

^~

make[3]: *** [/usr/src/linux-5.3.18-59.37/scripts/Makefile.build:288: /__w/lkrg/lkrg/src/modules/database/JUMP_LABEL

```",1,build fails on opensuse leap s linux w lkrg lkrg src modules database jump label p arch jump label transform apply p arch jump label transform apply c in function p arch jump label transform apply entry w lkrg lkrg src modules database jump label p arch jump label transform apply p arch jump label transform apply c error p text poke loc aka struct text poke loc has no member named detour p tmp detour make usr src linux scripts makefile build w lkrg lkrg src modules database jump label ,1

1434,21655175962.0,IssuesEvent,2022-05-06 13:28:25,damccorm/test-migration-target,https://api.github.com/repos/damccorm/test-migration-target,opened,Use portable WindowIntoPayload in DataflowRunner,P3 runner-dataflow portability task,"The Java-specific blobs transmitted to Dataflow need more context, in the form of portability framework protos.

Imported from Jira [BEAM-3514](https://issues.apache.org/jira/browse/BEAM-3514). Original Jira may contain additional context.

Reported by: kenn.

This issue has child subcomponents which were not migrated over. See the original Jira for more information.",True,"Use portable WindowIntoPayload in DataflowRunner - The Java-specific blobs transmitted to Dataflow need more context, in the form of portability framework protos.

Imported from Jira [BEAM-3514](https://issues.apache.org/jira/browse/BEAM-3514). Original Jira may contain additional context.

Reported by: kenn.

This issue has child subcomponents which were not migrated over. See the original Jira for more information.",1,use portable windowintopayload in dataflowrunner the java specific blobs transmitted to dataflow need more context in the form of portability framework protos imported from jira original jira may contain additional context reported by kenn this issue has child subcomponents which were not migrated over see the original jira for more information ,1

13,2577767565.0,IssuesEvent,2015-02-12 19:00:53,magnumripper/JohnTheRipper,https://api.github.com/repos/magnumripper/JohnTheRipper,closed,Can't build on Well,portability,"Some change to formats.c between b647486..5d1a14b has introduced this:

```

gcc version 4.6.3 (Ubuntu/Linaro 4.6.3-1ubuntu5)

gcc -DAC_BUILT -march=native -mavx -c -g -O2 -I/usr/local/include -I/opt/AMDAPP/include -I/usr/local/cuda/include -DARCH_LITTLE_ENDIAN=1 -Wall -Wdeclaration-after-statement -fomit-frame-pointer -Wno-deprecated-declarations -Wno-format-extra-args -D_GNU_SOURCE -DHAVE_CUDA -fopenmp -pthread -DHAVE_OPENCL -pthread -funroll-loops formats.c -o formats.o

/tmp/ccXscU4h.s: Assembler messages:

/tmp/ccXscU4h.s:407: Error: no such instruction: `vfmadd312sd .LC5(%rip),%xmm0,%xmm2'

make[1]: *** [formats.o] Error 1

make[1]: Leaving directory `/space/home/magnum/src/john/src'

make: *** [default] Error 2

```",True,"Can't build on Well - Some change to formats.c between b647486..5d1a14b has introduced this:

```

gcc version 4.6.3 (Ubuntu/Linaro 4.6.3-1ubuntu5)

gcc -DAC_BUILT -march=native -mavx -c -g -O2 -I/usr/local/include -I/opt/AMDAPP/include -I/usr/local/cuda/include -DARCH_LITTLE_ENDIAN=1 -Wall -Wdeclaration-after-statement -fomit-frame-pointer -Wno-deprecated-declarations -Wno-format-extra-args -D_GNU_SOURCE -DHAVE_CUDA -fopenmp -pthread -DHAVE_OPENCL -pthread -funroll-loops formats.c -o formats.o

/tmp/ccXscU4h.s: Assembler messages:

/tmp/ccXscU4h.s:407: Error: no such instruction: `vfmadd312sd .LC5(%rip),%xmm0,%xmm2'

make[1]: *** [formats.o] Error 1

make[1]: Leaving directory `/space/home/magnum/src/john/src'

make: *** [default] Error 2

```",1,can t build on well some change to formats c between has introduced this gcc version ubuntu linaro gcc dac built march native mavx c g i usr local include i opt amdapp include i usr local cuda include darch little endian wall wdeclaration after statement fomit frame pointer wno deprecated declarations wno format extra args d gnu source dhave cuda fopenmp pthread dhave opencl pthread funroll loops formats c o formats o tmp s assembler messages tmp s error no such instruction rip make error make leaving directory space home magnum src john src make error ,1

886,11771579257.0,IssuesEvent,2020-03-16 00:37:30,microsoft/vscode,https://api.github.com/repos/microsoft/vscode,closed,Portable Mode: Support auto update on Windows,feature-request portable-mode,"Issue Type: Bug

Having the portable/zip version of vscode doesn't autoupdate, I have to manually update it by copying the zip.

I'd prefer if auto update worked because I'd imagine you could intelligently remove old files and persist my data - right now I don't know if I should delete everything and paste the new stuff (and lose my settings) or if it's OK to layer.

VS Code version: Code 1.40.0 (86405ea23e3937316009fc27c9361deee66ffbf5, 2019-11-06T17:02:13.381Z)

OS version: Windows_NT x64 10.0.18362

",True,"Portable Mode: Support auto update on Windows - Issue Type: Bug

Having the portable/zip version of vscode doesn't autoupdate, I have to manually update it by copying the zip.

I'd prefer if auto update worked because I'd imagine you could intelligently remove old files and persist my data - right now I don't know if I should delete everything and paste the new stuff (and lose my settings) or if it's OK to layer.

VS Code version: Code 1.40.0 (86405ea23e3937316009fc27c9361deee66ffbf5, 2019-11-06T17:02:13.381Z)

OS version: Windows_NT x64 10.0.18362

",1,portable mode support auto update on windows issue type bug having the portable zip version of vscode doesn t autoupdate i have to manually update it by copying the zip i d prefer if auto update worked because i d imagine you could intelligently remove old files and persist my data right now i don t know if i should delete everything and paste the new stuff and lose my settings or if it s ok to layer vs code version code os version windows nt ,1

207895,16096927998.0,IssuesEvent,2021-04-27 02:09:10,padma-g/info478-project,https://api.github.com/repos/padma-g/info478-project,closed,Identify necessary technical skills and major challenges,documentation,"What new technical skills will we need to learn in order to complete the project?

What major challenges do we anticipate?",1.0,"Identify necessary technical skills and major challenges - What new technical skills will we need to learn in order to complete the project?

What major challenges do we anticipate?",0,identify necessary technical skills and major challenges what new technical skills will we need to learn in order to complete the project what major challenges do we anticipate ,0

1767,26029031687.0,IssuesEvent,2022-12-21 19:08:14,AzureAD/microsoft-authentication-library-for-dotnet,https://api.github.com/repos/AzureAD/microsoft-authentication-library-for-dotnet,closed,[Feature Request] Add support for LogCallback in ApplicationOptions ,Supportability,"

```csharp

var options = new ConfidentialClientApplicationOptions

{

ClientId = TestConstants.ClientId,

LogLevel = LogLevel.Verbose,

EnablePiiLogging = true,

IsDefaultPlatformLoggingEnabled = true,

LogCallback = (level, msg, pii) => { log(msg); } // Not Available!

};

var cca = ConfidentialClientApplicationBuilder.CreateWithApplicationOptions(options)

.Build();

```",True,"[Feature Request] Add support for LogCallback in ApplicationOptions -

```csharp

var options = new ConfidentialClientApplicationOptions

{

ClientId = TestConstants.ClientId,

LogLevel = LogLevel.Verbose,

EnablePiiLogging = true,

IsDefaultPlatformLoggingEnabled = true,

LogCallback = (level, msg, pii) => { log(msg); } // Not Available!

};

var cca = ConfidentialClientApplicationBuilder.CreateWithApplicationOptions(options)

.Build();

```",1, add support for logcallback in applicationoptions csharp var options new confidentialclientapplicationoptions clientid testconstants clientid loglevel loglevel verbose enablepiilogging true isdefaultplatformloggingenabled true logcallback level msg pii log msg not available var cca confidentialclientapplicationbuilder createwithapplicationoptions options build ,1

181973,21664471323.0,IssuesEvent,2022-05-07 01:27:40,scottstientjes/snipe-it,https://api.github.com/repos/scottstientjes/snipe-it,closed,WS-2018-0236 (Medium) detected in mem-1.1.0.tgz - autoclosed,security vulnerability,"## WS-2018-0236 - Medium Severity Vulnerability

Vulnerable Library - mem-1.1.0.tgz

Vulnerable Library - mem-1.1.0.tgz

Memoize functions - An optimization used to speed up consecutive function calls by caching the result of calls with identical input

Library home page: https://registry.npmjs.org/mem/-/mem-1.1.0.tgz

Path to dependency file: /tmp/ws-scm/snipe-it/package.json

Path to vulnerable library: /tmp/ws-scm/snipe-it/node_modules/mem/package.json

Dependency Hierarchy:

- laravel-mix-2.1.11.tgz (Root Library)

- yargs-8.0.2.tgz

- os-locale-2.1.0.tgz

- :x: **mem-1.1.0.tgz** (Vulnerable Library)

Found in HEAD commit: 35f2b36393de933b01f7dd715958a7a89a2d783b

Vulnerability Details

Vulnerability Details

In nodejs-mem before version 4.0.0 there is a memory leak due to old results not being removed from the cache despite reaching maxAge. Exploitation of this can lead to exhaustion of memory and subsequent denial of service.

Publish Date: 2018-08-27

URL: WS-2018-0236

CVSS 2 Score Details (5.5)

CVSS 2 Score Details (5.5)

Base Score Metrics not available

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://bugzilla.redhat.com/show_bug.cgi?id=1623744

Release Date: 2019-05-30

Fix Resolution: 4.0.0

Vulnerable Library - mem-1.1.0.tgz

Vulnerable Library - mem-1.1.0.tgz

Memoize functions - An optimization used to speed up consecutive function calls by caching the result of calls with identical input

Library home page: https://registry.npmjs.org/mem/-/mem-1.1.0.tgz

Path to dependency file: /tmp/ws-scm/snipe-it/package.json

Path to vulnerable library: /tmp/ws-scm/snipe-it/node_modules/mem/package.json

Dependency Hierarchy:

- laravel-mix-2.1.11.tgz (Root Library)

- yargs-8.0.2.tgz

- os-locale-2.1.0.tgz

- :x: **mem-1.1.0.tgz** (Vulnerable Library)

Found in HEAD commit: 35f2b36393de933b01f7dd715958a7a89a2d783b

Vulnerability Details

Vulnerability Details

In nodejs-mem before version 4.0.0 there is a memory leak due to old results not being removed from the cache despite reaching maxAge. Exploitation of this can lead to exhaustion of memory and subsequent denial of service.

Publish Date: 2018-08-27

URL: WS-2018-0236

CVSS 2 Score Details (5.5)

CVSS 2 Score Details (5.5)

Base Score Metrics not available

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://bugzilla.redhat.com/show_bug.cgi?id=1623744

Release Date: 2019-05-30

Fix Resolution: 4.0.0

Vulnerable Library - jquery-1.7.2.min.js

Vulnerable Library - jquery-1.7.2.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.7.2/jquery.min.js

Path to dependency file: /tmp/ws-scm/serverless-mfa-api/node_modules/jmespath/index.html

Path to vulnerable library: /serverless-mfa-api/node_modules/jmespath/index.html

Dependency Hierarchy:

- :x: **jquery-1.7.2.min.js** (Vulnerable Library)

Found in HEAD commit: bc7a5cb545c98937d5fc3a8b979879b0177a757a

Vulnerability Details

Vulnerability Details

jQuery before 1.9.0 is vulnerable to Cross-site Scripting (XSS) attacks. The jQuery(strInput) function does not differentiate selectors from HTML in a reliable fashion. In vulnerable versions, jQuery determined whether the input was HTML by looking for the '<' character anywhere in the string, giving attackers more flexibility when attempting to construct a malicious payload. In fixed versions, jQuery only deems the input to be HTML if it explicitly starts with the '<' character, limiting exploitability only to attackers who can control the beginning of a string, which is far less common.

Publish Date: 2018-01-18

URL: CVE-2012-6708

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: Required

- Scope: Changed

- Impact Metrics:

- Confidentiality Impact: Low

- Integrity Impact: Low

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://nvd.nist.gov/vuln/detail/CVE-2012-6708

Release Date: 2018-01-18

Fix Resolution: jQuery - v1.9.0

Vulnerable Library - jquery-1.7.2.min.js

Vulnerable Library - jquery-1.7.2.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.7.2/jquery.min.js

Path to dependency file: /tmp/ws-scm/serverless-mfa-api/node_modules/jmespath/index.html

Path to vulnerable library: /serverless-mfa-api/node_modules/jmespath/index.html

Dependency Hierarchy:

- :x: **jquery-1.7.2.min.js** (Vulnerable Library)

Found in HEAD commit: bc7a5cb545c98937d5fc3a8b979879b0177a757a

Vulnerability Details

Vulnerability Details

jQuery before 1.9.0 is vulnerable to Cross-site Scripting (XSS) attacks. The jQuery(strInput) function does not differentiate selectors from HTML in a reliable fashion. In vulnerable versions, jQuery determined whether the input was HTML by looking for the '<' character anywhere in the string, giving attackers more flexibility when attempting to construct a malicious payload. In fixed versions, jQuery only deems the input to be HTML if it explicitly starts with the '<' character, limiting exploitability only to attackers who can control the beginning of a string, which is far less common.

Publish Date: 2018-01-18

URL: CVE-2012-6708

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: Required

- Scope: Changed

- Impact Metrics:

- Confidentiality Impact: Low

- Integrity Impact: Low

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://nvd.nist.gov/vuln/detail/CVE-2012-6708

Release Date: 2018-01-18

Fix Resolution: jQuery - v1.9.0

Vulnerable Library - parse-url-5.0.1.tgz

Vulnerable Library - parse-url-5.0.1.tgz

An advanced url parser supporting git urls too.

Library home page: https://registry.npmjs.org/parse-url/-/parse-url-5.0.1.tgz

Path to dependency file: /package.json

Path to vulnerable library: /node_modules/parse-url

Dependency Hierarchy:

- lerna-3.13.4.tgz (Root Library)

- version-3.13.4.tgz

- github-client-3.13.3.tgz

- git-url-parse-11.1.2.tgz

- git-up-4.0.1.tgz

- :x: **parse-url-5.0.1.tgz** (Vulnerable Library)

Found in HEAD commit: b9888ec61e269386b4fab790d7d16670ad49b548

Found in base branch: fix-babel-core-js

Vulnerability Details

Vulnerability Details

Exposure of Sensitive Information to an Unauthorized Actor in GitHub repository ionicabizau/parse-url prior to 7.0.0.

Publish Date: 2022-06-27

URL: CVE-2022-0722

CVSS 3 Score Details (4.8)

CVSS 3 Score Details (4.8)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: High

- Privileges Required: None

- User Interaction: None

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: Low

- Integrity Impact: Low

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://huntr.dev/bounties/2490ef6d-5577-4714-a4dd-9608251b4226

Release Date: 2022-06-27

Fix Resolution: parse-url - 6.0.1

Vulnerable Library - parse-url-5.0.1.tgz

Vulnerable Library - parse-url-5.0.1.tgz

An advanced url parser supporting git urls too.

Library home page: https://registry.npmjs.org/parse-url/-/parse-url-5.0.1.tgz

Path to dependency file: /package.json

Path to vulnerable library: /node_modules/parse-url

Dependency Hierarchy:

- lerna-3.13.4.tgz (Root Library)

- version-3.13.4.tgz

- github-client-3.13.3.tgz

- git-url-parse-11.1.2.tgz

- git-up-4.0.1.tgz

- :x: **parse-url-5.0.1.tgz** (Vulnerable Library)

Found in HEAD commit: b9888ec61e269386b4fab790d7d16670ad49b548

Found in base branch: fix-babel-core-js

Vulnerability Details

Vulnerability Details

Exposure of Sensitive Information to an Unauthorized Actor in GitHub repository ionicabizau/parse-url prior to 7.0.0.

Publish Date: 2022-06-27

URL: CVE-2022-0722

CVSS 3 Score Details (4.8)

CVSS 3 Score Details (4.8)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: High

- Privileges Required: None

- User Interaction: None

- Scope: Unchanged

- Impact Metrics:

- Confidentiality Impact: Low

- Integrity Impact: Low

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://huntr.dev/bounties/2490ef6d-5577-4714-a4dd-9608251b4226

Release Date: 2022-06-27

Fix Resolution: parse-url - 6.0.1

Vulnerable Libraries - jquery-1.7.2.min.js, jquery-1.3.2.min.js, jquery-1.7.1.min.js

Vulnerable Libraries - jquery-1.7.2.min.js, jquery-1.3.2.min.js, jquery-1.7.1.min.js

jquery-1.7.2.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.7.2/jquery.min.js

Path to dependency file: freeCodeCamp/api-server/node_modules/jmespath/index.html

Path to vulnerable library: freeCodeCamp/api-server/node_modules/jmespath/index.html

Dependency Hierarchy:

- :x: **jquery-1.7.2.min.js** (Vulnerable Library)

jquery-1.3.2.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.3.2/jquery.min.js

Path to dependency file: freeCodeCamp/tools/contributor/lib/node_modules/underscore.string/test/test_underscore/temp_tests.html

Path to vulnerable library: freeCodeCamp/tools/contributor/lib/node_modules/underscore.string/test/test_underscore/vendor/jquery.js

Dependency Hierarchy:

- :x: **jquery-1.3.2.min.js** (Vulnerable Library)

jquery-1.7.1.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.7.1/jquery.min.js

Path to dependency file: freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/multiplex/index.html

Path to vulnerable library: freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/multiplex/index.html,freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/echo/index.html,freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/express-3.x/index.html,freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/hapi/html/index.html

Dependency Hierarchy:

- :x: **jquery-1.7.1.min.js** (Vulnerable Library)

Found in HEAD commit: 311e89b65c48c9468ebef29fa6a623b9e24a3093

Found in base branch: master

Vulnerability Details

Vulnerability Details

jQuery before 1.9.0 is vulnerable to Cross-site Scripting (XSS) attacks. The jQuery(strInput) function does not differentiate selectors from HTML in a reliable fashion. In vulnerable versions, jQuery determined whether the input was HTML by looking for the '<' character anywhere in the string, giving attackers more flexibility when attempting to construct a malicious payload. In fixed versions, jQuery only deems the input to be HTML if it explicitly starts with the '<' character, limiting exploitability only to attackers who can control the beginning of a string, which is far less common.

Publish Date: 2018-01-18

URL: CVE-2012-6708

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: Required

- Scope: Changed

- Impact Metrics:

- Confidentiality Impact: Low

- Integrity Impact: Low

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://nvd.nist.gov/vuln/detail/CVE-2012-6708

Release Date: 2018-01-18

Fix Resolution: jQuery - v1.9.0

Vulnerable Libraries - jquery-1.7.2.min.js, jquery-1.3.2.min.js, jquery-1.7.1.min.js

Vulnerable Libraries - jquery-1.7.2.min.js, jquery-1.3.2.min.js, jquery-1.7.1.min.js

jquery-1.7.2.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.7.2/jquery.min.js

Path to dependency file: freeCodeCamp/api-server/node_modules/jmespath/index.html

Path to vulnerable library: freeCodeCamp/api-server/node_modules/jmespath/index.html

Dependency Hierarchy:

- :x: **jquery-1.7.2.min.js** (Vulnerable Library)

jquery-1.3.2.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.3.2/jquery.min.js

Path to dependency file: freeCodeCamp/tools/contributor/lib/node_modules/underscore.string/test/test_underscore/temp_tests.html

Path to vulnerable library: freeCodeCamp/tools/contributor/lib/node_modules/underscore.string/test/test_underscore/vendor/jquery.js

Dependency Hierarchy:

- :x: **jquery-1.3.2.min.js** (Vulnerable Library)

jquery-1.7.1.min.js

JavaScript library for DOM operations

Library home page: https://cdnjs.cloudflare.com/ajax/libs/jquery/1.7.1/jquery.min.js

Path to dependency file: freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/multiplex/index.html

Path to vulnerable library: freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/multiplex/index.html,freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/echo/index.html,freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/express-3.x/index.html,freeCodeCamp/tools/contributor/dashboard-app/client/node_modules/sockjs/examples/hapi/html/index.html

Dependency Hierarchy:

- :x: **jquery-1.7.1.min.js** (Vulnerable Library)

Found in HEAD commit: 311e89b65c48c9468ebef29fa6a623b9e24a3093

Found in base branch: master

Vulnerability Details

Vulnerability Details

jQuery before 1.9.0 is vulnerable to Cross-site Scripting (XSS) attacks. The jQuery(strInput) function does not differentiate selectors from HTML in a reliable fashion. In vulnerable versions, jQuery determined whether the input was HTML by looking for the '<' character anywhere in the string, giving attackers more flexibility when attempting to construct a malicious payload. In fixed versions, jQuery only deems the input to be HTML if it explicitly starts with the '<' character, limiting exploitability only to attackers who can control the beginning of a string, which is far less common.

Publish Date: 2018-01-18

URL: CVE-2012-6708

CVSS 3 Score Details (6.1)

CVSS 3 Score Details (6.1)

Base Score Metrics:

- Exploitability Metrics:

- Attack Vector: Network

- Attack Complexity: Low

- Privileges Required: None

- User Interaction: Required

- Scope: Changed

- Impact Metrics:

- Confidentiality Impact: Low

- Integrity Impact: Low

- Availability Impact: None

For more information on CVSS3 Scores, click here.

Suggested Fix

Suggested Fix

Type: Upgrade version

Origin: https://nvd.nist.gov/vuln/detail/CVE-2012-6708

Release Date: 2018-01-18

Fix Resolution: jQuery - v1.9.0

The tests should perform at least

- Component testing for the components present and their numbers

- Simulation for events like Button Press

- Props testing

The project tests are based on jest and enzyme. Tests like test1 or test2 could serve as references.

",1.0,"Tests for Weather components - WeatherDetail, WeatherList need tests

WeatherDetail.js

WeatherList.js

The tests should perform at least

- Component testing for the components present and their numbers

- Simulation for events like Button Press

- Props testing

The project tests are based on jest and enzyme. Tests like test1 or test2 could serve as references.

",0,tests for weather components weatherdetail weatherlist need tests the tests should perform at least component testing for the components present and their numbers simulation for events like button press props testing the project tests are based on jest and enzyme tests like or could serve as references ,0

312,5732077001.0,IssuesEvent,2017-04-21 14:02:40,mongoclient/mongoclient,https://api.github.com/repos/mongoclient/mongoclient,closed,Loading libssl.1.0.0 fails,portable-linux potential bug,"I'm trying to run the portable linux-x64 2.0.0 version and it gets stuck on the please wait screen.

I get the following error when running from terminal:

```

[MONGOCLIENT] [MONGOD-STDERR] /home/myuser/linux-portable-x64/resources/app/bin/mongod: error while loading shared libraries: libssl.so.1.0.0: cannot open shared object file: No such file or directory

[MONGOCLIENT] [MONGOD-EXIT] 127

```

I'm using fedora 25 with openssl 1.0.2k installed.

I tried linking libssl.so.1.0.0 to libssl.so with no success.

## Possible Solution

Also is it possible to make mongoclient use my systems running mongod instead of running it's own mongod?

## Your Environment):

portable linux-x64 2.0.0 version electron app

Fedora Linux 25 with openssl and openssl-devel 1.0.2k",True,"Loading libssl.1.0.0 fails - I'm trying to run the portable linux-x64 2.0.0 version and it gets stuck on the please wait screen.

I get the following error when running from terminal:

```

[MONGOCLIENT] [MONGOD-STDERR] /home/myuser/linux-portable-x64/resources/app/bin/mongod: error while loading shared libraries: libssl.so.1.0.0: cannot open shared object file: No such file or directory

[MONGOCLIENT] [MONGOD-EXIT] 127

```

I'm using fedora 25 with openssl 1.0.2k installed.

I tried linking libssl.so.1.0.0 to libssl.so with no success.

## Possible Solution

Also is it possible to make mongoclient use my systems running mongod instead of running it's own mongod?

## Your Environment):

portable linux-x64 2.0.0 version electron app

Fedora Linux 25 with openssl and openssl-devel 1.0.2k",1,loading libssl fails i m trying to run the portable linux version and it gets stuck on the please wait screen i get the following error when running from terminal home myuser linux portable resources app bin mongod error while loading shared libraries libssl so cannot open shared object file no such file or directory i m using fedora with openssl installed i tried linking libssl so to libssl so with no success possible solution also is it possible to make mongoclient use my systems running mongod instead of running it s own mongod your environment portable linux version electron app fedora linux with openssl and openssl devel ,1

809,10546870836.0,IssuesEvent,2019-10-02 22:48:27,magnumripper/JohnTheRipper,https://api.github.com/repos/magnumripper/JohnTheRipper,closed,OpenCL formats failing on macOS with Intel HD Graphics 630,notes/external issues portability,"New problems in Mojave (or since last time I checked)

See also #3235, #3434

```

Device 1: Intel(R) HD Graphics 630

Testing: ansible-opencl, Ansible Vault [PBKDF2-SHA256 HMAC-SHA256 OpenCL]... FAILED (cmp_all(49))

Testing: axcrypt-opencl [SHA1 AES OpenCL]... FAILED (get_key(6))

Testing: EncFS-opencl [PBKDF2-SHA1 AES OpenCL]... FAILED (cmp_all(1))

Testing: OpenBSD-SoftRAID-opencl [PBKDF2-SHA1 AES OpenCL]... FAILED (cmp_all(1))

Testing: telegram-opencl [PBKDF2-SHA1 AES OpenCL]... FAILED (cmp_all(2))

Testing: wpapsk-opencl, WPA/WPA2/PMF/PMKID PSK [PBKDF2-SHA1 OpenCL]... FAILED (cmp_all(10))

Testing: wpapsk-pmk-opencl, WPA/WPA2/PMF/PMKID master key [MD5/SHA-1/SHA-2 OpenCL]... FAILED (cmp_all(3))

7 out of 83 tests have FAILED

```",True,"OpenCL formats failing on macOS with Intel HD Graphics 630 - New problems in Mojave (or since last time I checked)

See also #3235, #3434

```

Device 1: Intel(R) HD Graphics 630

Testing: ansible-opencl, Ansible Vault [PBKDF2-SHA256 HMAC-SHA256 OpenCL]... FAILED (cmp_all(49))

Testing: axcrypt-opencl [SHA1 AES OpenCL]... FAILED (get_key(6))

Testing: EncFS-opencl [PBKDF2-SHA1 AES OpenCL]... FAILED (cmp_all(1))

Testing: OpenBSD-SoftRAID-opencl [PBKDF2-SHA1 AES OpenCL]... FAILED (cmp_all(1))

Testing: telegram-opencl [PBKDF2-SHA1 AES OpenCL]... FAILED (cmp_all(2))

Testing: wpapsk-opencl, WPA/WPA2/PMF/PMKID PSK [PBKDF2-SHA1 OpenCL]... FAILED (cmp_all(10))

Testing: wpapsk-pmk-opencl, WPA/WPA2/PMF/PMKID master key [MD5/SHA-1/SHA-2 OpenCL]... FAILED (cmp_all(3))

7 out of 83 tests have FAILED

```",1,opencl formats failing on macos with intel hd graphics new problems in mojave or since last time i checked see also device intel r hd graphics testing ansible opencl ansible vault failed cmp all testing axcrypt opencl failed get key testing encfs opencl failed cmp all testing openbsd softraid opencl failed cmp all testing telegram opencl failed cmp all testing wpapsk opencl wpa pmf pmkid psk failed cmp all testing wpapsk pmk opencl wpa pmf pmkid master key failed cmp all out of tests have failed ,1

1274,17018701024.0,IssuesEvent,2021-07-02 15:28:15,TYPO3-Solr/ext-solr,https://api.github.com/repos/TYPO3-Solr/ext-solr,closed,[BUG] Faked TSFE does not set applicationType in request,BACKPORTABLE,"**Describe the bug**

When initializing the TSFE a TYPO3_REQUEST is also initialized.

A request must always have a applicationType attribute.

If no applicationType is given `ApplicationType::fromRequest($GLOBALS['TYPO3_REQUEST'])`

can't bee used and throws an exception.

**To Reproduce**

Call `ApplicationType::fromRequest($GLOBALS['TYPO3_REQUEST'])` on the

`$GLOBALS['TYPO3_REQUEST']` that is initialized in

`Classes/FrontendEnvironment/Tsfe.php`

This happens for example in https://github.com/networkteam/sentry_client/blob/master/Classes/Client.php#L78

**Expected behavior**

A valid TYPO3_REQUEST should be initialized

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Used versions (please complete the following information):**

- TYPO3 Version: 10.4.16

- EXT:solr Version: 11.0.3

",True,"[BUG] Faked TSFE does not set applicationType in request - **Describe the bug**

When initializing the TSFE a TYPO3_REQUEST is also initialized.

A request must always have a applicationType attribute.

If no applicationType is given `ApplicationType::fromRequest($GLOBALS['TYPO3_REQUEST'])`

can't bee used and throws an exception.

**To Reproduce**

Call `ApplicationType::fromRequest($GLOBALS['TYPO3_REQUEST'])` on the

`$GLOBALS['TYPO3_REQUEST']` that is initialized in

`Classes/FrontendEnvironment/Tsfe.php`

This happens for example in https://github.com/networkteam/sentry_client/blob/master/Classes/Client.php#L78

**Expected behavior**

A valid TYPO3_REQUEST should be initialized

**Screenshots**

If applicable, add screenshots to help explain your problem.

**Used versions (please complete the following information):**

- TYPO3 Version: 10.4.16

- EXT:solr Version: 11.0.3

",1, faked tsfe does not set applicationtype in request describe the bug when initializing the tsfe a request is also initialized a request must always have a applicationtype attribute if no applicationtype is given applicationtype fromrequest globals can t bee used and throws an exception to reproduce call applicationtype fromrequest globals on the globals that is initialized in classes frontendenvironment tsfe php this happens for example in expected behavior a valid request should be initialized screenshots if applicable add screenshots to help explain your problem used versions please complete the following information version ext solr version ,1

163508,13920506105.0,IssuesEvent,2020-10-21 10:32:17,dry-python/returns,https://api.github.com/repos/dry-python/returns,closed,Interfaces documentation,documentation,"This issue is to track what interface we have to document yet, and to split the PRs as well into multiples instead of one with all interfaces:

- [x] Mappable

- [x] Bindable

- [ ] Applicative

- [ ] Container",1.0,"Interfaces documentation - This issue is to track what interface we have to document yet, and to split the PRs as well into multiples instead of one with all interfaces:

- [x] Mappable

- [x] Bindable

- [ ] Applicative

- [ ] Container",0,interfaces documentation this issue is to track what interface we have to document yet and to split the prs as well into multiples instead of one with all interfaces mappable bindable applicative container,0

226,4629425776.0,IssuesEvent,2016-09-28 09:13:11,ocaml/opam-repository,https://api.github.com/repos/ocaml/opam-repository,closed,Package zarith 1.4.1 fails to install in Cygwin+MinGW,portability,"This is related to #5588 : because `gmp.h` is in `/usr/include` and not `/usr/local/include`, `zarith` also fails to find the `gmp` package.

Incidentally, the `opam` file already considers some OSes in a special manner (openbsd, freebsd and darwin), so it suffices to consider cygwin as one of these ""special"" OSes. The `CFLAGS` variable will then be set accordingly.

A second issue I had with zarith 1.4.1 was line 242 in the `configure` file:

if test ""$ocamllibdir"" = ""auto""; then ocamllibdir=`ocamlc -where`; fi

Due to Windows (and possibly a MinGW OCaml compiler) shenanigans, `ocamlc` introduces a `\r` at the end of the command, and so the `ocamllibdir` variable contains the `\r` which prevents the rest from working. I hacked a `| tr -d '\r'` after the `-where` and it worked, but it seems there is already a `echo_n()` function for that.

I didn't submit a pull request because I don't know the exact right way to fix these issues (e.g. I don't have time now to fix them the proper way), but after doing both these patches (and patching my `conf-gmp` as mentioned in #5588) I was able to install zarith on my Cygwin.

I didn't test its actual *usage* though.",True,"Package zarith 1.4.1 fails to install in Cygwin+MinGW - This is related to #5588 : because `gmp.h` is in `/usr/include` and not `/usr/local/include`, `zarith` also fails to find the `gmp` package.

Incidentally, the `opam` file already considers some OSes in a special manner (openbsd, freebsd and darwin), so it suffices to consider cygwin as one of these ""special"" OSes. The `CFLAGS` variable will then be set accordingly.

A second issue I had with zarith 1.4.1 was line 242 in the `configure` file:

if test ""$ocamllibdir"" = ""auto""; then ocamllibdir=`ocamlc -where`; fi

Due to Windows (and possibly a MinGW OCaml compiler) shenanigans, `ocamlc` introduces a `\r` at the end of the command, and so the `ocamllibdir` variable contains the `\r` which prevents the rest from working. I hacked a `| tr -d '\r'` after the `-where` and it worked, but it seems there is already a `echo_n()` function for that.

I didn't submit a pull request because I don't know the exact right way to fix these issues (e.g. I don't have time now to fix them the proper way), but after doing both these patches (and patching my `conf-gmp` as mentioned in #5588) I was able to install zarith on my Cygwin.

I didn't test its actual *usage* though.",1,package zarith fails to install in cygwin mingw this is related to because gmp h is in usr include and not usr local include zarith also fails to find the gmp package incidentally the opam file already considers some oses in a special manner openbsd freebsd and darwin so it suffices to consider cygwin as one of these special oses the cflags variable will then be set accordingly a second issue i had with zarith was line in the configure file if test ocamllibdir auto then ocamllibdir ocamlc where fi due to windows and possibly a mingw ocaml compiler shenanigans ocamlc introduces a r at the end of the command and so the ocamllibdir variable contains the r which prevents the rest from working i hacked a tr d r after the where and it worked but it seems there is already a echo n function for that i didn t submit a pull request because i don t know the exact right way to fix these issues e g i don t have time now to fix them the proper way but after doing both these patches and patching my conf gmp as mentioned in i was able to install zarith on my cygwin i didn t test its actual usage though ,1

147307,13205642297.0,IssuesEvent,2020-08-14 18:23:50,DS4PS/cpp-526-sum-2020,https://api.github.com/repos/DS4PS/cpp-526-sum-2020,opened,Chapter 1 - Arithmetic in R and Function sum(),documentation final-dashboard,"I'm having issues with function `sum()` and `NA` values.

**Expectation:** I expected to get the sum of all values in variable `x`.

",1.0,"Chapter 1 - Arithmetic in R and Function sum() - I'm having issues with function `sum()` and `NA` values.

**Expectation:** I expected to get the sum of all values in variable `x`.

",0,chapter arithmetic in r and function sum i m having issues with function sum and na values expectation i expected to get the sum of all values in variable x ,0

88487,10572714114.0,IssuesEvent,2019-10-07 10:11:46,StefanNieuwenhuis/databindr,https://api.github.com/repos/StefanNieuwenhuis/databindr,closed,Add proper readme,documentation,"As user I want to know how to use this library, so I want to be informed through an elaborate readme.",1.0,"Add proper readme - As user I want to know how to use this library, so I want to be informed through an elaborate readme.",0,add proper readme as user i want to know how to use this library so i want to be informed through an elaborate readme ,0

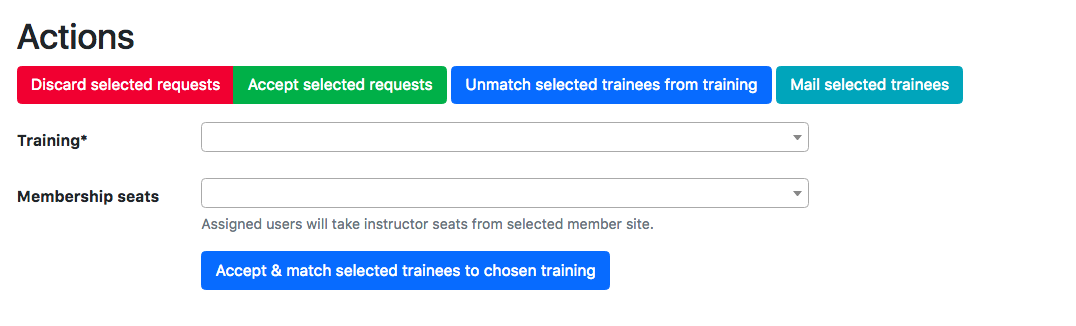

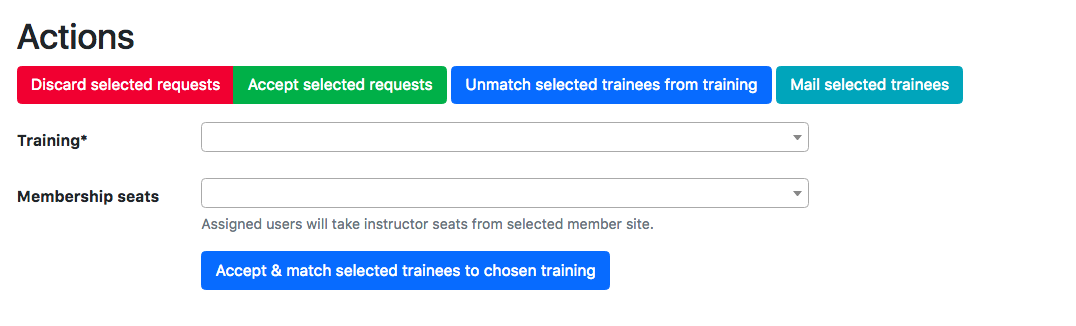

267085,8378961435.0,IssuesEvent,2018-10-06 19:35:33,swcarpentry/amy,https://api.github.com/repos/swcarpentry/amy,closed,open training should be an option for assigning training requests to ttt events,component: user interface (UI) priority: essential type: bug,"Related to https://github.com/swcarpentry/amy/issues/1055

When matching training requests to a ttt event from the training request page, there is no check box to mark them as open training applicants.

This functionality **does** negatively affect usability as @sheraaron's workflow involves going to the request page and assigning people to trainings in bulk.

@karenword @maneesha ",1.0,"open training should be an option for assigning training requests to ttt events - Related to https://github.com/swcarpentry/amy/issues/1055

When matching training requests to a ttt event from the training request page, there is no check box to mark them as open training applicants.

This functionality **does** negatively affect usability as @sheraaron's workflow involves going to the request page and assigning people to trainings in bulk.

@karenword @maneesha ",0,open training should be an option for assigning training requests to ttt events related to when matching training requests to a ttt event from the training request page there is no check box to mark them as open training applicants this functionality does negatively affect usability as sheraaron s workflow involves going to the request page and assigning people to trainings in bulk karenword maneesha ,0

193477,14653875159.0,IssuesEvent,2020-12-28 07:14:06,github-vet/rangeloop-pointer-findings,https://api.github.com/repos/github-vet/rangeloop-pointer-findings,closed,coreos/fleet: fleetctl/destroy_test.go; 25 LoC,fresh small test,"

Found a possible issue in [coreos/fleet](https://www.github.com/coreos/fleet) at [fleetctl/destroy_test.go](https://github.com/coreos/fleet/blob/4522498327e92ffe6fa24eaa087c73e5af4adb53/fleetctl/destroy_test.go#L67-L91)

Below is the message reported by the analyzer for this snippet of code. Beware that the analyzer only reports the first issue it finds, so please do not limit your consideration to the contents of the below message.

> range-loop variable r used in defer or goroutine at line 76

[Click here to see the code in its original context.](https://github.com/coreos/fleet/blob/4522498327e92ffe6fa24eaa087c73e5af4adb53/fleetctl/destroy_test.go#L67-L91)

Click here to show the 25 line(s) of Go which triggered the analyzer.

```go

for _, r := range results {

var wg sync.WaitGroup

errchan := make(chan error)

cAPI = newFakeRegistryForCommands(unitPrefix, len(r.units), false)

wg.Add(2)

go func() {

defer wg.Done()

doDestroyUnits(t, r, errchan)

}()

go func() {

defer wg.Done()

doDestroyUnits(t, r, errchan)

}()

go func() {

wg.Wait()

close(errchan)

}()

for err := range errchan {

t.Errorf(""%v"", err)

}

}

```

Leave a reaction on this issue to contribute to the project by classifying this instance as a **Bug** :-1:, **Mitigated** :+1:, or **Desirable Behavior** :rocket:

See the descriptions of the classifications [here](https://github.com/github-vet/rangeclosure-findings#how-can-i-help) for more information.

commit ID: 4522498327e92ffe6fa24eaa087c73e5af4adb53

",1.0,"coreos/fleet: fleetctl/destroy_test.go; 25 LoC -

Found a possible issue in [coreos/fleet](https://www.github.com/coreos/fleet) at [fleetctl/destroy_test.go](https://github.com/coreos/fleet/blob/4522498327e92ffe6fa24eaa087c73e5af4adb53/fleetctl/destroy_test.go#L67-L91)

Below is the message reported by the analyzer for this snippet of code. Beware that the analyzer only reports the first issue it finds, so please do not limit your consideration to the contents of the below message.

> range-loop variable r used in defer or goroutine at line 76

[Click here to see the code in its original context.](https://github.com/coreos/fleet/blob/4522498327e92ffe6fa24eaa087c73e5af4adb53/fleetctl/destroy_test.go#L67-L91)

Click here to show the 25 line(s) of Go which triggered the analyzer.

```go

for _, r := range results {

var wg sync.WaitGroup

errchan := make(chan error)

cAPI = newFakeRegistryForCommands(unitPrefix, len(r.units), false)

wg.Add(2)

go func() {

defer wg.Done()

doDestroyUnits(t, r, errchan)

}()

go func() {

defer wg.Done()

doDestroyUnits(t, r, errchan)

}()

go func() {

wg.Wait()

close(errchan)

}()

for err := range errchan {

t.Errorf(""%v"", err)

}

}

```

Leave a reaction on this issue to contribute to the project by classifying this instance as a **Bug** :-1:, **Mitigated** :+1:, or **Desirable Behavior** :rocket:

See the descriptions of the classifications [here](https://github.com/github-vet/rangeclosure-findings#how-can-i-help) for more information.

commit ID: 4522498327e92ffe6fa24eaa087c73e5af4adb53

",0,coreos fleet fleetctl destroy test go loc found a possible issue in at below is the message reported by the analyzer for this snippet of code beware that the analyzer only reports the first issue it finds so please do not limit your consideration to the contents of the below message range loop variable r used in defer or goroutine at line click here to show the line s of go which triggered the analyzer go for r range results var wg sync waitgroup errchan make chan error capi newfakeregistryforcommands unitprefix len r units false wg add go func defer wg done dodestroyunits t r errchan go func defer wg done dodestroyunits t r errchan go func wg wait close errchan for err range errchan t errorf v err leave a reaction on this issue to contribute to the project by classifying this instance as a bug mitigated or desirable behavior rocket see the descriptions of the classifications for more information commit id ,0

11354,2649533147.0,IssuesEvent,2015-03-15 00:50:10,Badadroid/badadroid,https://api.github.com/repos/Badadroid/badadroid,closed,insufficient memory! (move ext2system.img),auto-migrated Priority-Medium Type-Defect,"```

can you move the ext2system.img to the sd card?

I can't change theme and run samsung apps application because i haven't enoght

space!

```

Original issue reported on code.google.com by `granzier...@gmail.com` on 14 Dec 2011 at 2:47",1.0,"insufficient memory! (move ext2system.img) - ```

can you move the ext2system.img to the sd card?

I can't change theme and run samsung apps application because i haven't enoght

space!

```

Original issue reported on code.google.com by `granzier...@gmail.com` on 14 Dec 2011 at 2:47",0,insufficient memory move img can you move the img to the sd card i can t change theme and run samsung apps application because i haven t enoght space original issue reported on code google com by granzier gmail com on dec at ,0

581,7986105716.0,IssuesEvent,2018-07-19 00:02:45,rust-lang-nursery/stdsimd,https://api.github.com/repos/rust-lang-nursery/stdsimd,closed,Portable vector shuffles,A-portable,"I've just submitted a PR with an API for portable vector shuffles (https://github.com/rust-lang-nursery/stdsimd/pull/387). From the docs:

```rust

// Shuffle allows reordering the elements of a vector:

let x = i32x4::new(1, 2, 3, 4);

let r = shuffle!(x, [2, 1, 3, 0]);

assert_eq!(r, i32x4::new(3, 2, 4, 1));

// The resulting vector can be smaller than the input:

let r = shuffle!(x, [1, 3]);

assert_eq!(r, i32x2::new(2, 4));

// Equal:

let r = shuffle!(x, [1, 3, 2, 0]);

assert_eq!(r, i32x4::new(2, 4, 3, 1));

// Or larger (at most twice as large):

et r = shuffle!(x, [1, 3, 2, 2, 1, 3, 2, 2]);

assert_eq!(r, i32x8::new(2, 4, 3, 3, 2, 4, 3, 3));

// It also allows reordering elements of two vectors:

let y = i32x4::new(5, 6, 7, 8);

let r = shuffle!(x, y, [4, 0, 5, 1]);

assert_eq!(r, i32x4::new(5, 1, 6, 2));

// And this can be used to construct larger or smaller

// vectors as well.

```

It would be nice to gather feed-back on this.",True,"Portable vector shuffles - I've just submitted a PR with an API for portable vector shuffles (https://github.com/rust-lang-nursery/stdsimd/pull/387). From the docs:

```rust

// Shuffle allows reordering the elements of a vector:

let x = i32x4::new(1, 2, 3, 4);

let r = shuffle!(x, [2, 1, 3, 0]);

assert_eq!(r, i32x4::new(3, 2, 4, 1));

// The resulting vector can be smaller than the input:

let r = shuffle!(x, [1, 3]);

assert_eq!(r, i32x2::new(2, 4));

// Equal:

let r = shuffle!(x, [1, 3, 2, 0]);

assert_eq!(r, i32x4::new(2, 4, 3, 1));

// Or larger (at most twice as large):

et r = shuffle!(x, [1, 3, 2, 2, 1, 3, 2, 2]);

assert_eq!(r, i32x8::new(2, 4, 3, 3, 2, 4, 3, 3));

// It also allows reordering elements of two vectors:

let y = i32x4::new(5, 6, 7, 8);

let r = shuffle!(x, y, [4, 0, 5, 1]);

assert_eq!(r, i32x4::new(5, 1, 6, 2));

// And this can be used to construct larger or smaller

// vectors as well.

```

It would be nice to gather feed-back on this.",1,portable vector shuffles i ve just submitted a pr with an api for portable vector shuffles from the docs rust shuffle allows reordering the elements of a vector let x new let r shuffle x assert eq r new the resulting vector can be smaller than the input let r shuffle x assert eq r new equal let r shuffle x assert eq r new or larger at most twice as large et r shuffle x assert eq r new it also allows reordering elements of two vectors let y new let r shuffle x y assert eq r new and this can be used to construct larger or smaller vectors as well it would be nice to gather feed back on this ,1

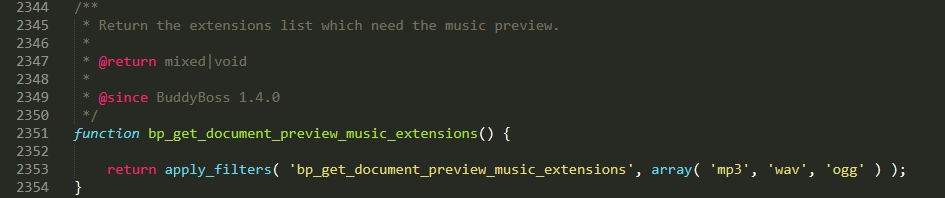

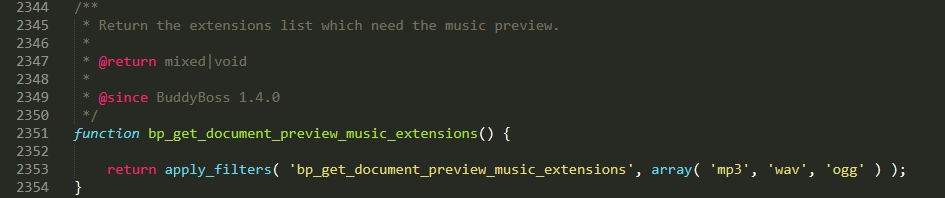

439920,12690494028.0,IssuesEvent,2020-06-21 12:27:06,buddyboss/buddyboss-platform,https://api.github.com/repos/buddyboss/buddyboss-platform,opened,Audio file preview for other formats,feature: enhancement priority: medium,"**Is your feature request related to a problem? Please describe.**

Audio file preview uploaded in documents only supports MP3, WAV, and OGG

**Describe alternatives you've considered**

Audio preview should be based on the MIME type

**Support ticket links**

https://secure.helpscout.net/conversation/1198744310/78453",1.0,"Audio file preview for other formats - **Is your feature request related to a problem? Please describe.**

Audio file preview uploaded in documents only supports MP3, WAV, and OGG

**Describe alternatives you've considered**

Audio preview should be based on the MIME type

**Support ticket links**

https://secure.helpscout.net/conversation/1198744310/78453",0,audio file preview for other formats is your feature request related to a problem please describe audio file preview uploaded in documents only supports wav and ogg describe alternatives you ve considered audio preview should be based on the mime type support ticket links ,0

778507,27318748458.0,IssuesEvent,2023-02-24 17:49:18,GoogleContainerTools/skaffold,https://api.github.com/repos/GoogleContainerTools/skaffold,closed,Not able to reference secrets path to home folder,kind/bug priority/p3,"

### Expected behavior

I want to reference a secret file in `build.artifacts.docker.secret.src` in the home directory. This works well if using the realpath but not ~.

### Actual behavior

Using the realpath /home/username/.npmrc works, but using ~/.npmrc doesn't work because skaffold is appending ~/.npmrc to the actual working directory making it search to the wrong place.

I also tried to reference the home directory using $HOME env variable, but it's not a templated field, so doesn't work

### Information

- Skaffold version: skaffold 2.1.0

- Operating system: MacOS Ventura 13

- Installed via: brew

- Contents of skaffold.yaml:

```yaml

build:

local:

useBuildkit: true

artifacts:

- image: image-name

context: .

docker:

secrets:

- id: npmrc

src: ~/.npmrc

```

",1.0,"Not able to reference secrets path to home folder -

### Expected behavior

I want to reference a secret file in `build.artifacts.docker.secret.src` in the home directory. This works well if using the realpath but not ~.

### Actual behavior

Using the realpath /home/username/.npmrc works, but using ~/.npmrc doesn't work because skaffold is appending ~/.npmrc to the actual working directory making it search to the wrong place.

I also tried to reference the home directory using $HOME env variable, but it's not a templated field, so doesn't work

### Information

- Skaffold version: skaffold 2.1.0

- Operating system: MacOS Ventura 13

- Installed via: brew

- Contents of skaffold.yaml:

```yaml

build:

local:

useBuildkit: true

artifacts:

- image: image-name

context: .

docker:

secrets:

- id: npmrc

src: ~/.npmrc

```

",0,not able to reference secrets path to home folder issues without logs and details are more complicated to fix please help us by filling the template below expected behavior i want to reference a secret file in build artifacts docker secret src in the home directory this works well if using the realpath but not actual behavior using the realpath home username npmrc works but using npmrc doesn t work because skaffold is appending npmrc to the actual working directory making it search to the wrong place i also tried to reference the home directory using home env variable but it s not a templated field so doesn t work information skaffold version skaffold operating system macos ventura installed via brew contents of skaffold yaml yaml build local usebuildkit true artifacts image image name context docker secrets id npmrc src npmrc ,0

639,8578486660.0,IssuesEvent,2018-11-13 05:20:00,chapel-lang/chapel,https://api.github.com/repos/chapel-lang/chapel,opened,Heterogeneous GASNET_DOMAIN_COUNT value causes hangs,area: Third-Party type: Portability,"Under gasnet-aries we set `GASNET_DOMAIN_COUNT` to ``. We've been seeing intermittent hangs on a heterogenous system, which we've narrowed down to a single job getting different node types. As a specific example a job on a 28-core BW and 68-core KNL will hang. However, not all combinations seem to hang as a job on 10-core IV and 20-core IV seems to work.

Here's an awful patch that I've been using to reproduce. It sets `GASNET_DOMAIN_COUNT` to `SLURM_NODEID+1`

```diff

diff --git a/runtime/src/comm/gasnet/comm-gasnet.c b/runtime/src/comm/gasnet/comm-gasnet.c

index 2b1e0c6f25..76971f6014 100644

--- a/runtime/src/comm/gasnet/comm-gasnet.c

+++ b/runtime/src/comm/gasnet/comm-gasnet.c

@@ -766,12 +766,13 @@ static void set_max_segsize() {

}

}

+#include ""chpl-env.h""

static void set_num_comm_domains() {

#if defined(GASNET_CONDUIT_GEMINI) || defined(GASNET_CONDUIT_ARIES)

char num_cpus_val[22]; // big enough for an unsigned 64-bit quantity

int num_cpus;

- num_cpus = chpl_topo_getNumCPUsPhysical(true) + 1;

+ num_cpus = chpl_env_str_to_int(""SLURM_NODEID"", getenv(""SLURM_NODEID""), 0) + 1;

snprintf(num_cpus_val, sizeof(num_cpus_val), ""%d"", num_cpus);

if (setenv(""GASNET_DOMAIN_COUNT"", num_cpus_val, 0) != 0) {

```

This fails for me with 2 or more locales.",True,"Heterogeneous GASNET_DOMAIN_COUNT value causes hangs - Under gasnet-aries we set `GASNET_DOMAIN_COUNT` to ``. We've been seeing intermittent hangs on a heterogenous system, which we've narrowed down to a single job getting different node types. As a specific example a job on a 28-core BW and 68-core KNL will hang. However, not all combinations seem to hang as a job on 10-core IV and 20-core IV seems to work.

Here's an awful patch that I've been using to reproduce. It sets `GASNET_DOMAIN_COUNT` to `SLURM_NODEID+1`

```diff

diff --git a/runtime/src/comm/gasnet/comm-gasnet.c b/runtime/src/comm/gasnet/comm-gasnet.c

index 2b1e0c6f25..76971f6014 100644

--- a/runtime/src/comm/gasnet/comm-gasnet.c

+++ b/runtime/src/comm/gasnet/comm-gasnet.c

@@ -766,12 +766,13 @@ static void set_max_segsize() {

}

}

+#include ""chpl-env.h""

static void set_num_comm_domains() {

#if defined(GASNET_CONDUIT_GEMINI) || defined(GASNET_CONDUIT_ARIES)

char num_cpus_val[22]; // big enough for an unsigned 64-bit quantity

int num_cpus;

- num_cpus = chpl_topo_getNumCPUsPhysical(true) + 1;

+ num_cpus = chpl_env_str_to_int(""SLURM_NODEID"", getenv(""SLURM_NODEID""), 0) + 1;

snprintf(num_cpus_val, sizeof(num_cpus_val), ""%d"", num_cpus);

if (setenv(""GASNET_DOMAIN_COUNT"", num_cpus_val, 0) != 0) {

```

This fails for me with 2 or more locales.",1,heterogeneous gasnet domain count value causes hangs under gasnet aries we set gasnet domain count to we ve been seeing intermittent hangs on a heterogenous system which we ve narrowed down to a single job getting different node types as a specific example a job on a core bw and core knl will hang however not all combinations seem to hang as a job on core iv and core iv seems to work here s an awful patch that i ve been using to reproduce it sets gasnet domain count to slurm nodeid diff diff git a runtime src comm gasnet comm gasnet c b runtime src comm gasnet comm gasnet c index a runtime src comm gasnet comm gasnet c b runtime src comm gasnet comm gasnet c static void set max segsize include chpl env h static void set num comm domains if defined gasnet conduit gemini defined gasnet conduit aries char num cpus val big enough for an unsigned bit quantity int num cpus num cpus chpl topo getnumcpusphysical true num cpus chpl env str to int slurm nodeid getenv slurm nodeid snprintf num cpus val sizeof num cpus val d num cpus if setenv gasnet domain count num cpus val this fails for me with or more locales ,1

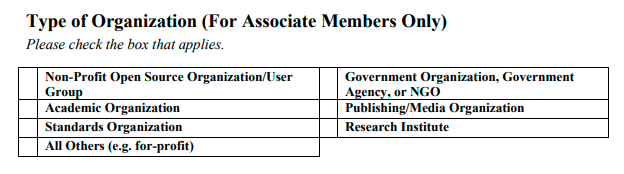

589399,17695950223.0,IssuesEvent,2021-08-24 15:18:18,EclipseFdn/react-eclipsefdn-members,https://api.github.com/repos/EclipseFdn/react-eclipsefdn-members,closed,Add a field to record the type of organization. ,Front End Backend top-priority,"We need to add a field to record the type of organization.

This would be a mandatory drop down list and would appear before the Member Representative section.

The options would include:

- For Profit Organization

- Non-Profit Open Source Organization / User Group

- Academic Organization

- Standards Organization

- Government Organization, Government Agency, or NGO

- Publishing/Media Organization

- Research Institute

- All others

This information would also be useful to have as part of the summary received by the Membership Coordination team.

For reference, please see the screenshot attached.

Thanks,

Zahra

",1.0,"Add a field to record the type of organization. - We need to add a field to record the type of organization.

This would be a mandatory drop down list and would appear before the Member Representative section.

The options would include:

- For Profit Organization

- Non-Profit Open Source Organization / User Group

- Academic Organization

- Standards Organization

- Government Organization, Government Agency, or NGO

- Publishing/Media Organization

- Research Institute

- All others

This information would also be useful to have as part of the summary received by the Membership Coordination team.

For reference, please see the screenshot attached.

Thanks,

Zahra

",0,add a field to record the type of organization we need to add a field to record the type of organization this would be a mandatory drop down list and would appear before the member representative section the options would include for profit organization non profit open source organization user group academic organization standards organization government organization government agency or ngo publishing media organization research institute all others this information would also be useful to have as part of the summary received by the membership coordination team for reference please see the screenshot attached thanks zahra ,0

482,6971598878.0,IssuesEvent,2017-12-11 14:33:06,edenhill/librdkafka,https://api.github.com/repos/edenhill/librdkafka,closed,segfault with latest kafkacat/librdkafka and Kafka 0.11.0,bug portability,"I have built the latest `kafkacat`/`librdkafka` using kafkacat's `bootstrap.sh`.

I got:

```

Version 1.3.1-13-ga6b599 (JSON) (librdkafka 0.11.0-RC1 builtin.features=gzip,snappy,ssl,sasl,regex,lz4,sasl_gssapi,sasl_plain,sasl_scram,plugins)

```

I am using it contact Kafka brokers using Kerberos and SSL, with the -L option.

For brokers running 0.10.2, `kafkacat` works perfectly well, all the time.

Simply changing the broker queried to one running Kafka 0.11.0, I get segfault most of the time (more than 9 times out of 10).",True,"segfault with latest kafkacat/librdkafka and Kafka 0.11.0 - I have built the latest `kafkacat`/`librdkafka` using kafkacat's `bootstrap.sh`.

I got:

```

Version 1.3.1-13-ga6b599 (JSON) (librdkafka 0.11.0-RC1 builtin.features=gzip,snappy,ssl,sasl,regex,lz4,sasl_gssapi,sasl_plain,sasl_scram,plugins)

```

I am using it contact Kafka brokers using Kerberos and SSL, with the -L option.

For brokers running 0.10.2, `kafkacat` works perfectly well, all the time.

Simply changing the broker queried to one running Kafka 0.11.0, I get segfault most of the time (more than 9 times out of 10).",1,segfault with latest kafkacat librdkafka and kafka i have built the latest kafkacat librdkafka using kafkacat s bootstrap sh i got version json librdkafka builtin features gzip snappy ssl sasl regex sasl gssapi sasl plain sasl scram plugins i am using it contact kafka brokers using kerberos and ssl with the l option for brokers running kafkacat works perfectly well all the time simply changing the broker queried to one running kafka i get segfault most of the time more than times out of ,1

27406,21698978381.0,IssuesEvent,2022-05-10 00:24:38,celeritas-project/celeritas,https://api.github.com/repos/celeritas-project/celeritas,closed,Prototype performance portability,infrastructure,Do an initial port of enough core Celeritas components to at least run some demo applications on non-CUDA hardware.,1.0,Prototype performance portability - Do an initial port of enough core Celeritas components to at least run some demo applications on non-CUDA hardware.,0,prototype performance portability do an initial port of enough core celeritas components to at least run some demo applications on non cuda hardware ,0

1534,22157283056.0,IssuesEvent,2022-06-04 01:52:53,apache/beam,https://api.github.com/repos/apache/beam,opened,Convert external transforms to use StringUtf8Coder instead of ByteArrayCoder,portability P3 improvement sdk-java-core clarified sdk-py-core io-java-kafka,"We currently encode Strings using implicit UTF8 byte arrays. Now, we could use the newly introduced StringUtf8Coder ModelCoder.

Imported from Jira [BEAM-7244](https://issues.apache.org/jira/browse/BEAM-7244). Original Jira may contain additional context.

Reported by: mxm.",True,"Convert external transforms to use StringUtf8Coder instead of ByteArrayCoder - We currently encode Strings using implicit UTF8 byte arrays. Now, we could use the newly introduced StringUtf8Coder ModelCoder.

Imported from Jira [BEAM-7244](https://issues.apache.org/jira/browse/BEAM-7244). Original Jira may contain additional context.

Reported by: mxm.",1,convert external transforms to use instead of bytearraycoder we currently encode strings using implicit byte arrays now we could use the newly introduced modelcoder imported from jira original jira may contain additional context reported by mxm ,1