Commit

·

546be9b

0

Parent(s):

Sync: Merge pull request #108 from seanpedrick-case/dev

Browse filesCan now save all output files to a specified S3 bucket and folder. Ad…

This view is limited to 50 files because it contains too many changes.

See raw diff

- .coveragerc +56 -0

- .dockerignore +38 -0

- .gitattributes +8 -0

- .github/scripts/setup_test_data.py +311 -0

- .github/workflow_README.md +183 -0

- .github/workflows/archive_workflows/multi-os-test.yml +109 -0

- .github/workflows/ci.yml +260 -0

- .github/workflows/simple-test.yml +67 -0

- .github/workflows/sync_to_hf.yml +53 -0

- .github/workflows/sync_to_hf_zero_gpu.yml +53 -0

- .gitignore +41 -0

- DocRedactApp.spec +66 -0

- Dockerfile +186 -0

- README.md +1261 -0

- _quarto.yml +28 -0

- app.py +0 -0

- cdk/__init__.py +0 -0

- cdk/app.py +83 -0

- cdk/cdk_config.py +362 -0

- cdk/cdk_functions.py +1482 -0

- cdk/cdk_stack.py +1869 -0

- cdk/check_resources.py +375 -0

- cdk/post_cdk_build_quickstart.py +40 -0

- cdk/requirements.txt +5 -0

- cli_redact.py +1431 -0

- entrypoint.sh +33 -0

- example_config.env +49 -0

- example_data/Bold minimalist professional cover letter.docx +3 -0

- example_data/Difficult handwritten note.jpg +3 -0

- example_data/Example-cv-university-graduaty-hr-role-with-photo-2.pdf +3 -0

- example_data/Lambeth_2030-Our_Future_Our_Lambeth.pdf.csv +0 -0

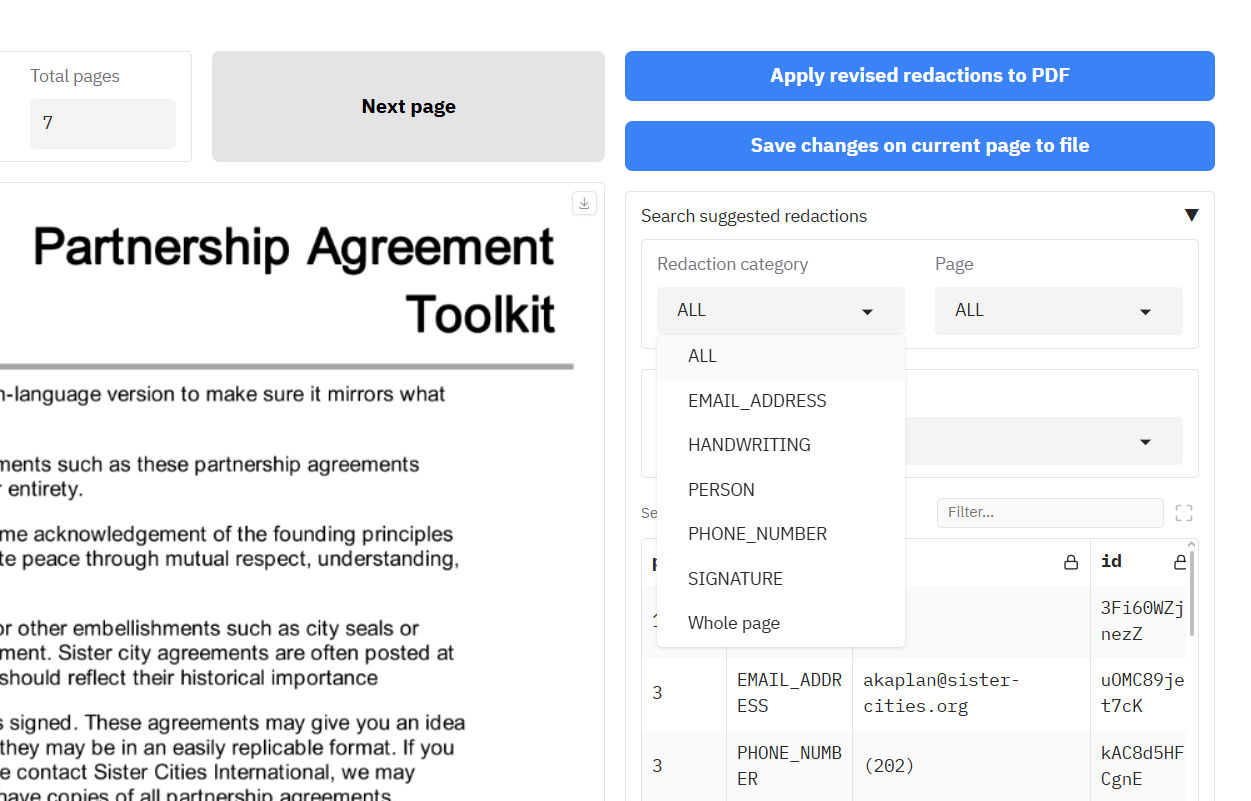

- example_data/Partnership-Agreement-Toolkit_0_0.pdf +3 -0

- example_data/Partnership-Agreement-Toolkit_test_deny_list_para_single_spell.csv +2 -0

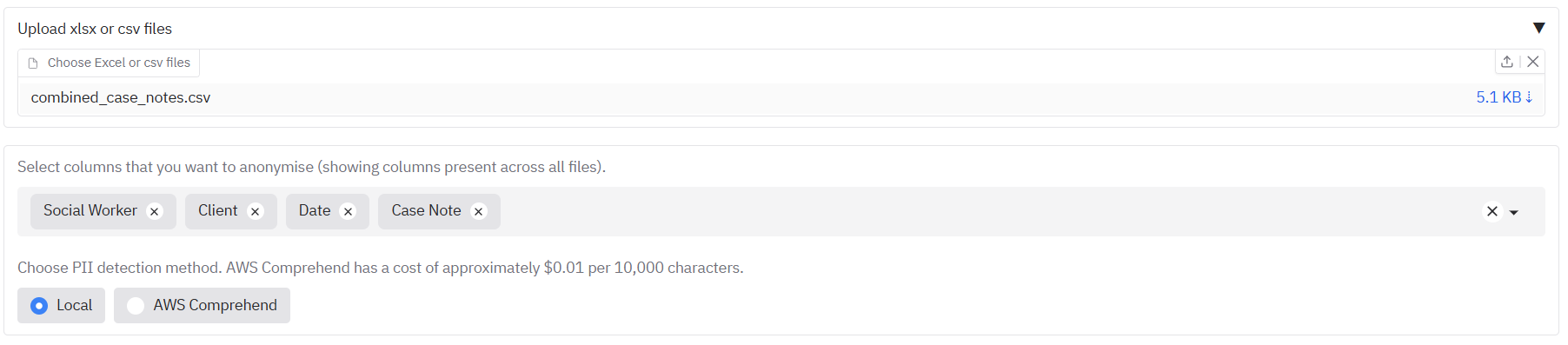

- example_data/combined_case_notes.csv +19 -0

- example_data/combined_case_notes.xlsx +3 -0

- example_data/doubled_output_joined.pdf +3 -0

- example_data/example_complaint_letter.jpg +3 -0

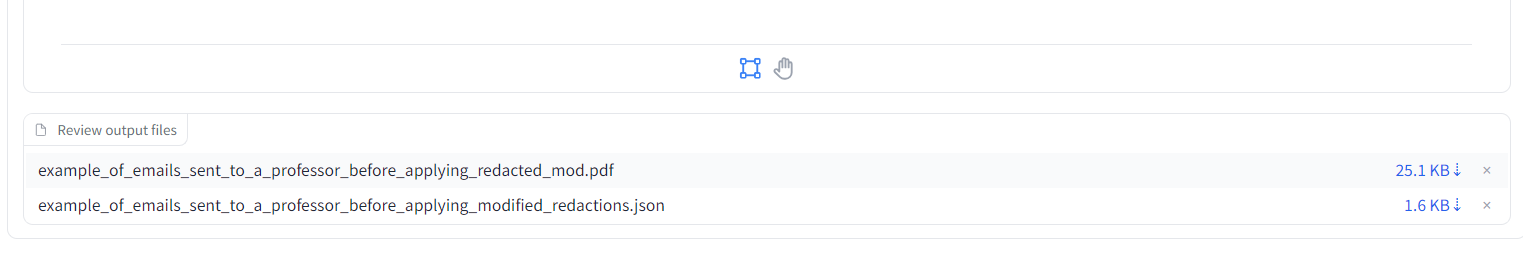

- example_data/example_of_emails_sent_to_a_professor_before_applying.pdf +3 -0

- example_data/example_outputs/Partnership-Agreement-Toolkit_0_0.pdf_ocr_output.csv +277 -0

- example_data/example_outputs/Partnership-Agreement-Toolkit_0_0.pdf_review_file.csv +77 -0

- example_data/example_outputs/Partnership-Agreement-Toolkit_0_0_ocr_results_with_words_textract.csv +0 -0

- example_data/example_outputs/doubled_output_joined.pdf_ocr_output.csv +923 -0

- example_data/example_outputs/example_of_emails_sent_to_a_professor_before_applying_ocr_output_textract.csv +40 -0

- example_data/example_outputs/example_of_emails_sent_to_a_professor_before_applying_ocr_results_with_words_textract.csv +432 -0

- example_data/example_outputs/example_of_emails_sent_to_a_professor_before_applying_review_file.csv +15 -0

- example_data/graduate-job-example-cover-letter.pdf +3 -0

- example_data/partnership_toolkit_redact_custom_deny_list.csv +2 -0

- example_data/partnership_toolkit_redact_some_pages.csv +2 -0

- example_data/test_allow_list_graduate.csv +1 -0

- example_data/test_allow_list_partnership.csv +1 -0

.coveragerc

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[run]

|

| 2 |

+

source = .

|

| 3 |

+

omit =

|

| 4 |

+

*/tests/*

|

| 5 |

+

*/test/*

|

| 6 |

+

*/__pycache__/*

|

| 7 |

+

*/venv/*

|

| 8 |

+

*/env/*

|

| 9 |

+

*/build/*

|

| 10 |

+

*/dist/*

|

| 11 |

+

*/cdk/*

|

| 12 |

+

*/docs/*

|

| 13 |

+

*/example_data/*

|

| 14 |

+

*/examples/*

|

| 15 |

+

*/feedback/*

|

| 16 |

+

*/logs/*

|

| 17 |

+

*/old_code/*

|

| 18 |

+

*/output/*

|

| 19 |

+

*/tmp/*

|

| 20 |

+

*/usage/*

|

| 21 |

+

*/tld/*

|

| 22 |

+

*/tesseract/*

|

| 23 |

+

*/poppler/*

|

| 24 |

+

config*.py

|

| 25 |

+

setup.py

|

| 26 |

+

lambda_entrypoint.py

|

| 27 |

+

entrypoint.sh

|

| 28 |

+

cli_redact.py

|

| 29 |

+

load_dynamo_logs.py

|

| 30 |

+

load_s3_logs.py

|

| 31 |

+

*.spec

|

| 32 |

+

Dockerfile

|

| 33 |

+

*.qmd

|

| 34 |

+

*.md

|

| 35 |

+

*.txt

|

| 36 |

+

*.yml

|

| 37 |

+

*.yaml

|

| 38 |

+

*.json

|

| 39 |

+

*.csv

|

| 40 |

+

*.env

|

| 41 |

+

*.bat

|

| 42 |

+

*.ps1

|

| 43 |

+

*.sh

|

| 44 |

+

|

| 45 |

+

[report]

|

| 46 |

+

exclude_lines =

|

| 47 |

+

pragma: no cover

|

| 48 |

+

def __repr__

|

| 49 |

+

if self.debug:

|

| 50 |

+

if settings.DEBUG

|

| 51 |

+

raise AssertionError

|

| 52 |

+

raise NotImplementedError

|

| 53 |

+

if 0:

|

| 54 |

+

if __name__ == .__main__.:

|

| 55 |

+

class .*\bProtocol\):

|

| 56 |

+

@(abc\.)?abstractmethod

|

.dockerignore

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.url

|

| 2 |

+

*.ipynb

|

| 3 |

+

*.pyc

|

| 4 |

+

examples/*

|

| 5 |

+

processing/*

|

| 6 |

+

tools/__pycache__/*

|

| 7 |

+

old_code/*

|

| 8 |

+

tesseract/*

|

| 9 |

+

poppler/*

|

| 10 |

+

build/*

|

| 11 |

+

dist/*

|

| 12 |

+

docs/*

|

| 13 |

+

build_deps/*

|

| 14 |

+

user_guide/*

|

| 15 |

+

cdk/config/*

|

| 16 |

+

tld/*

|

| 17 |

+

cdk/config/*

|

| 18 |

+

cdk/cdk.out/*

|

| 19 |

+

cdk/archive/*

|

| 20 |

+

cdk.json

|

| 21 |

+

cdk.context.json

|

| 22 |

+

.quarto/*

|

| 23 |

+

logs/

|

| 24 |

+

output/

|

| 25 |

+

input/

|

| 26 |

+

feedback/

|

| 27 |

+

config/

|

| 28 |

+

usage/

|

| 29 |

+

test/config/*

|

| 30 |

+

test/feedback/*

|

| 31 |

+

test/input/*

|

| 32 |

+

test/logs/*

|

| 33 |

+

test/output/*

|

| 34 |

+

test/tmp/*

|

| 35 |

+

test/usage/*

|

| 36 |

+

.ruff_cache/*

|

| 37 |

+

model_cache/*

|

| 38 |

+

sanitized_file/*

|

.gitattributes

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

*.pdf filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

*.jpg filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

*.xls filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

*.xlsx filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

*.docx filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

*.doc filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

*.png filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

*.ico filter=lfs diff=lfs merge=lfs -text

|

.github/scripts/setup_test_data.py

ADDED

|

@@ -0,0 +1,311 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/usr/bin/env python3

|

| 2 |

+

"""

|

| 3 |

+

Setup script for GitHub Actions test data.

|

| 4 |

+

Creates dummy test files when example data is not available.

|

| 5 |

+

"""

|

| 6 |

+

|

| 7 |

+

import os

|

| 8 |

+

import sys

|

| 9 |

+

|

| 10 |

+

import pandas as pd

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def create_directories():

|

| 14 |

+

"""Create necessary directories."""

|

| 15 |

+

dirs = ["example_data", "example_data/example_outputs"]

|

| 16 |

+

|

| 17 |

+

for dir_path in dirs:

|

| 18 |

+

os.makedirs(dir_path, exist_ok=True)

|

| 19 |

+

print(f"Created directory: {dir_path}")

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def create_dummy_pdf():

|

| 23 |

+

"""Create dummy PDFs for testing."""

|

| 24 |

+

|

| 25 |

+

# Install reportlab if not available

|

| 26 |

+

try:

|

| 27 |

+

from reportlab.lib.pagesizes import letter

|

| 28 |

+

from reportlab.pdfgen import canvas

|

| 29 |

+

except ImportError:

|

| 30 |

+

import subprocess

|

| 31 |

+

|

| 32 |

+

subprocess.check_call(["pip", "install", "reportlab"])

|

| 33 |

+

from reportlab.lib.pagesizes import letter

|

| 34 |

+

from reportlab.pdfgen import canvas

|

| 35 |

+

|

| 36 |

+

try:

|

| 37 |

+

# Create the main test PDF

|

| 38 |

+

pdf_path = (

|

| 39 |

+

"example_data/example_of_emails_sent_to_a_professor_before_applying.pdf"

|

| 40 |

+

)

|

| 41 |

+

print(f"Creating PDF: {pdf_path}")

|

| 42 |

+

print(f"Directory exists: {os.path.exists('example_data')}")

|

| 43 |

+

|

| 44 |

+

c = canvas.Canvas(pdf_path, pagesize=letter)

|

| 45 |

+

c.drawString(100, 750, "This is a test document for redaction testing.")

|

| 46 |

+

c.drawString(100, 700, "Email: test@example.com")

|

| 47 |

+

c.drawString(100, 650, "Phone: 123-456-7890")

|

| 48 |

+

c.drawString(100, 600, "Name: John Doe")

|

| 49 |

+

c.drawString(100, 550, "Address: 123 Test Street, Test City, TC 12345")

|

| 50 |

+

c.showPage()

|

| 51 |

+

|

| 52 |

+

# Add second page

|

| 53 |

+

c.drawString(100, 750, "Second page content")

|

| 54 |

+

c.drawString(100, 700, "More test data: jane.doe@example.com")

|

| 55 |

+

c.drawString(100, 650, "Another phone: 987-654-3210")

|

| 56 |

+

c.save()

|

| 57 |

+

|

| 58 |

+

print(f"Created dummy PDF: {pdf_path}")

|

| 59 |

+

|

| 60 |

+

# Create Partnership Agreement Toolkit PDF

|

| 61 |

+

partnership_pdf_path = "example_data/Partnership-Agreement-Toolkit_0_0.pdf"

|

| 62 |

+

print(f"Creating PDF: {partnership_pdf_path}")

|

| 63 |

+

c = canvas.Canvas(partnership_pdf_path, pagesize=letter)

|

| 64 |

+

c.drawString(100, 750, "Partnership Agreement Toolkit")

|

| 65 |

+

c.drawString(100, 700, "This is a test partnership agreement document.")

|

| 66 |

+

c.drawString(100, 650, "Contact: partnership@example.com")

|

| 67 |

+

c.drawString(100, 600, "Phone: (555) 123-4567")

|

| 68 |

+

c.drawString(100, 550, "Address: 123 Partnership Street, City, State 12345")

|

| 69 |

+

c.showPage()

|

| 70 |

+

|

| 71 |

+

# Add second page

|

| 72 |

+

c.drawString(100, 750, "Page 2 - Partnership Details")

|

| 73 |

+

c.drawString(100, 700, "More partnership information here.")

|

| 74 |

+

c.drawString(100, 650, "Contact: info@partnership.org")

|

| 75 |

+

c.showPage()

|

| 76 |

+

|

| 77 |

+

# Add third page

|

| 78 |

+

c.drawString(100, 750, "Page 3 - Terms and Conditions")

|

| 79 |

+

c.drawString(100, 700, "Terms and conditions content.")

|

| 80 |

+

c.drawString(100, 650, "Legal contact: legal@partnership.org")

|

| 81 |

+

c.save()

|

| 82 |

+

|

| 83 |

+

print(f"Created dummy PDF: {partnership_pdf_path}")

|

| 84 |

+

|

| 85 |

+

# Create Graduate Job Cover Letter PDF

|

| 86 |

+

cover_letter_pdf_path = "example_data/graduate-job-example-cover-letter.pdf"

|

| 87 |

+

print(f"Creating PDF: {cover_letter_pdf_path}")

|

| 88 |

+

c = canvas.Canvas(cover_letter_pdf_path, pagesize=letter)

|

| 89 |

+

c.drawString(100, 750, "Cover Letter Example")

|

| 90 |

+

c.drawString(100, 700, "Dear Hiring Manager,")

|

| 91 |

+

c.drawString(100, 650, "I am writing to apply for the position.")

|

| 92 |

+

c.drawString(100, 600, "Contact: applicant@example.com")

|

| 93 |

+

c.drawString(100, 550, "Phone: (555) 987-6543")

|

| 94 |

+

c.drawString(100, 500, "Address: 456 Job Street, Employment City, EC 54321")

|

| 95 |

+

c.drawString(100, 450, "Sincerely,")

|

| 96 |

+

c.drawString(100, 400, "John Applicant")

|

| 97 |

+

c.save()

|

| 98 |

+

|

| 99 |

+

print(f"Created dummy PDF: {cover_letter_pdf_path}")

|

| 100 |

+

|

| 101 |

+

except ImportError:

|

| 102 |

+

print("ReportLab not available, skipping PDF creation")

|

| 103 |

+

# Create simple text files instead

|

| 104 |

+

with open(

|

| 105 |

+

"example_data/example_of_emails_sent_to_a_professor_before_applying.pdf",

|

| 106 |

+

"w",

|

| 107 |

+

) as f:

|

| 108 |

+

f.write("This is a dummy PDF file for testing")

|

| 109 |

+

|

| 110 |

+

with open(

|

| 111 |

+

"example_data/Partnership-Agreement-Toolkit_0_0.pdf",

|

| 112 |

+

"w",

|

| 113 |

+

) as f:

|

| 114 |

+

f.write("This is a dummy Partnership Agreement PDF file for testing")

|

| 115 |

+

|

| 116 |

+

with open(

|

| 117 |

+

"example_data/graduate-job-example-cover-letter.pdf",

|

| 118 |

+

"w",

|

| 119 |

+

) as f:

|

| 120 |

+

f.write("This is a dummy cover letter PDF file for testing")

|

| 121 |

+

|

| 122 |

+

print("Created dummy text files instead of PDFs")

|

| 123 |

+

|

| 124 |

+

|

| 125 |

+

def create_dummy_csv():

|

| 126 |

+

"""Create dummy CSV files for testing."""

|

| 127 |

+

# Main CSV

|

| 128 |

+

csv_data = {

|

| 129 |

+

"Case Note": [

|

| 130 |

+

"Client visited for consultation regarding housing issues",

|

| 131 |

+

"Follow-up appointment scheduled for next week",

|

| 132 |

+

"Documentation submitted for review",

|

| 133 |

+

],

|

| 134 |

+

"Client": ["John Smith", "Jane Doe", "Bob Johnson"],

|

| 135 |

+

"Date": ["2024-01-15", "2024-01-16", "2024-01-17"],

|

| 136 |

+

}

|

| 137 |

+

df = pd.DataFrame(csv_data)

|

| 138 |

+

df.to_csv("example_data/combined_case_notes.csv", index=False)

|

| 139 |

+

print("Created dummy CSV: example_data/combined_case_notes.csv")

|

| 140 |

+

|

| 141 |

+

# Lambeth CSV

|

| 142 |

+

lambeth_data = {

|

| 143 |

+

"text": [

|

| 144 |

+

"Lambeth 2030 vision document content",

|

| 145 |

+

"Our Future Our Lambeth strategic plan",

|

| 146 |

+

"Community engagement and development",

|

| 147 |

+

],

|

| 148 |

+

"page": [1, 2, 3],

|

| 149 |

+

}

|

| 150 |

+

df_lambeth = pd.DataFrame(lambeth_data)

|

| 151 |

+

df_lambeth.to_csv(

|

| 152 |

+

"example_data/Lambeth_2030-Our_Future_Our_Lambeth.pdf.csv", index=False

|

| 153 |

+

)

|

| 154 |

+

print("Created dummy CSV: example_data/Lambeth_2030-Our_Future_Our_Lambeth.pdf.csv")

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

def create_dummy_word_doc():

|

| 158 |

+

"""Create dummy Word document."""

|

| 159 |

+

try:

|

| 160 |

+

from docx import Document

|

| 161 |

+

|

| 162 |

+

doc = Document()

|

| 163 |

+

doc.add_heading("Test Document for Redaction", 0)

|

| 164 |

+

doc.add_paragraph("This is a test document for redaction testing.")

|

| 165 |

+

doc.add_paragraph("Contact Information:")

|

| 166 |

+

doc.add_paragraph("Email: test@example.com")

|

| 167 |

+

doc.add_paragraph("Phone: 123-456-7890")

|

| 168 |

+

doc.add_paragraph("Name: John Doe")

|

| 169 |

+

doc.add_paragraph("Address: 123 Test Street, Test City, TC 12345")

|

| 170 |

+

|

| 171 |

+

doc.save("example_data/Bold minimalist professional cover letter.docx")

|

| 172 |

+

print("Created dummy Word document")

|

| 173 |

+

|

| 174 |

+

except ImportError:

|

| 175 |

+

print("python-docx not available, skipping Word document creation")

|

| 176 |

+

|

| 177 |

+

|

| 178 |

+

def create_allow_deny_lists():

|

| 179 |

+

"""Create dummy allow/deny lists."""

|

| 180 |

+

# Allow lists

|

| 181 |

+

allow_data = {"word": ["test", "example", "document"]}

|

| 182 |

+

pd.DataFrame(allow_data).to_csv(

|

| 183 |

+

"example_data/test_allow_list_graduate.csv", index=False

|

| 184 |

+

)

|

| 185 |

+

pd.DataFrame(allow_data).to_csv(

|

| 186 |

+

"example_data/test_allow_list_partnership.csv", index=False

|

| 187 |

+

)

|

| 188 |

+

print("Created allow lists")

|

| 189 |

+

|

| 190 |

+

# Deny lists

|

| 191 |

+

deny_data = {"word": ["sensitive", "confidential", "private"]}

|

| 192 |

+

pd.DataFrame(deny_data).to_csv(

|

| 193 |

+

"example_data/partnership_toolkit_redact_custom_deny_list.csv", index=False

|

| 194 |

+

)

|

| 195 |

+

pd.DataFrame(deny_data).to_csv(

|

| 196 |

+

"example_data/Partnership-Agreement-Toolkit_test_deny_list_para_single_spell.csv",

|

| 197 |

+

index=False,

|

| 198 |

+

)

|

| 199 |

+

print("Created deny lists")

|

| 200 |

+

|

| 201 |

+

# Whole page redaction list

|

| 202 |

+

page_data = {"page": [1, 2]}

|

| 203 |

+

pd.DataFrame(page_data).to_csv(

|

| 204 |

+

"example_data/partnership_toolkit_redact_some_pages.csv", index=False

|

| 205 |

+

)

|

| 206 |

+

print("Created whole page redaction list")

|

| 207 |

+

|

| 208 |

+

|

| 209 |

+

def create_ocr_output():

|

| 210 |

+

"""Create dummy OCR output CSV."""

|

| 211 |

+

ocr_data = {

|

| 212 |

+

"page": [1, 2, 3],

|

| 213 |

+

"text": [

|

| 214 |

+

"This is page 1 content with some text",

|

| 215 |

+

"This is page 2 content with different text",

|

| 216 |

+

"This is page 3 content with more text",

|

| 217 |

+

],

|

| 218 |

+

"left": [0.1, 0.3, 0.5],

|

| 219 |

+

"top": [0.95, 0.92, 0.88],

|

| 220 |

+

"width": [0.05, 0.02, 0.02],

|

| 221 |

+

"height": [0.01, 0.02, 0.02],

|

| 222 |

+

"line": [1, 2, 3],

|

| 223 |

+

}

|

| 224 |

+

df = pd.DataFrame(ocr_data)

|

| 225 |

+

df.to_csv(

|

| 226 |

+

"example_data/example_outputs/doubled_output_joined.pdf_ocr_output.csv",

|

| 227 |

+

index=False,

|

| 228 |

+

)

|

| 229 |

+

print("Created dummy OCR output CSV")

|

| 230 |

+

|

| 231 |

+

|

| 232 |

+

def create_dummy_image():

|

| 233 |

+

"""Create dummy image for testing."""

|

| 234 |

+

try:

|

| 235 |

+

from PIL import Image, ImageDraw, ImageFont

|

| 236 |

+

|

| 237 |

+

img = Image.new("RGB", (800, 600), color="white")

|

| 238 |

+

draw = ImageDraw.Draw(img)

|

| 239 |

+

|

| 240 |

+

# Try to use a system font

|

| 241 |

+

try:

|

| 242 |

+

font = ImageFont.truetype(

|

| 243 |

+

"/usr/share/fonts/truetype/dejavu/DejaVuSans.ttf", 20

|

| 244 |

+

)

|

| 245 |

+

except Exception as e:

|

| 246 |

+

print(f"Error loading DejaVuSans font: {e}")

|

| 247 |

+

try:

|

| 248 |

+

font = ImageFont.truetype("/System/Library/Fonts/Arial.ttf", 20)

|

| 249 |

+

except Exception as e:

|

| 250 |

+

print(f"Error loading Arial font: {e}")

|

| 251 |

+

font = ImageFont.load_default()

|

| 252 |

+

|

| 253 |

+

# Add text to image

|

| 254 |

+

draw.text((50, 50), "Test Document for Redaction", fill="black", font=font)

|

| 255 |

+

draw.text((50, 100), "Email: test@example.com", fill="black", font=font)

|

| 256 |

+

draw.text((50, 150), "Phone: 123-456-7890", fill="black", font=font)

|

| 257 |

+

draw.text((50, 200), "Name: John Doe", fill="black", font=font)

|

| 258 |

+

draw.text((50, 250), "Address: 123 Test Street", fill="black", font=font)

|

| 259 |

+

|

| 260 |

+

img.save("example_data/example_complaint_letter.jpg")

|

| 261 |

+

print("Created dummy image")

|

| 262 |

+

|

| 263 |

+

except ImportError:

|

| 264 |

+

print("PIL not available, skipping image creation")

|

| 265 |

+

|

| 266 |

+

|

| 267 |

+

def main():

|

| 268 |

+

"""Main setup function."""

|

| 269 |

+

print("Setting up test data for GitHub Actions...")

|

| 270 |

+

print(f"Current working directory: {os.getcwd()}")

|

| 271 |

+

print(f"Python version: {sys.version}")

|

| 272 |

+

|

| 273 |

+

create_directories()

|

| 274 |

+

create_dummy_pdf()

|

| 275 |

+

create_dummy_csv()

|

| 276 |

+

create_dummy_word_doc()

|

| 277 |

+

create_allow_deny_lists()

|

| 278 |

+

create_ocr_output()

|

| 279 |

+

create_dummy_image()

|

| 280 |

+

|

| 281 |

+

print("\nTest data setup complete!")

|

| 282 |

+

print("Created files:")

|

| 283 |

+

for root, dirs, files in os.walk("example_data"):

|

| 284 |

+

for file in files:

|

| 285 |

+

file_path = os.path.join(root, file)

|

| 286 |

+

print(f" {file_path}")

|

| 287 |

+

# Verify the file exists and has content

|

| 288 |

+

if os.path.exists(file_path):

|

| 289 |

+

file_size = os.path.getsize(file_path)

|

| 290 |

+

print(f" Size: {file_size} bytes")

|

| 291 |

+

else:

|

| 292 |

+

print(" WARNING: File does not exist!")

|

| 293 |

+

|

| 294 |

+

# Verify critical files exist

|

| 295 |

+

critical_files = [

|

| 296 |

+

"example_data/Partnership-Agreement-Toolkit_0_0.pdf",

|

| 297 |

+

"example_data/graduate-job-example-cover-letter.pdf",

|

| 298 |

+

"example_data/example_of_emails_sent_to_a_professor_before_applying.pdf",

|

| 299 |

+

]

|

| 300 |

+

|

| 301 |

+

print("\nVerifying critical test files:")

|

| 302 |

+

for file_path in critical_files:

|

| 303 |

+

if os.path.exists(file_path):

|

| 304 |

+

file_size = os.path.getsize(file_path)

|

| 305 |

+

print(f"✅ {file_path} exists ({file_size} bytes)")

|

| 306 |

+

else:

|

| 307 |

+

print(f"❌ {file_path} MISSING!")

|

| 308 |

+

|

| 309 |

+

|

| 310 |

+

if __name__ == "__main__":

|

| 311 |

+

main()

|

.github/workflow_README.md

ADDED

|

@@ -0,0 +1,183 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# GitHub Actions CI/CD Setup

|

| 2 |

+

|

| 3 |

+

This directory contains GitHub Actions workflows for automated testing of the CLI redaction application.

|

| 4 |

+

|

| 5 |

+

## Workflows Overview

|

| 6 |

+

|

| 7 |

+

### 1. **Simple Test Run** (`.github/workflows/simple-test.yml`)

|

| 8 |

+

- **Purpose**: Basic test execution

|

| 9 |

+

- **Triggers**: Push to main/dev, Pull requests

|

| 10 |

+

- **OS**: Ubuntu Latest

|

| 11 |

+

- **Python**: 3.11

|

| 12 |

+

- **Features**:

|

| 13 |

+

- Installs system dependencies

|

| 14 |

+

- Sets up test data

|

| 15 |

+

- Runs CLI tests

|

| 16 |

+

- Runs pytest

|

| 17 |

+

|

| 18 |

+

### 2. **Comprehensive CI/CD** (`.github/workflows/ci.yml`)

|

| 19 |

+

- **Purpose**: Full CI/CD pipeline

|

| 20 |

+

- **Features**:

|

| 21 |

+

- Linting (Ruff, Black)

|

| 22 |

+

- Unit tests (Python 3.10, 3.11, 3.12)

|

| 23 |

+

- Integration tests

|

| 24 |

+

- Security scanning (Safety, Bandit)

|

| 25 |

+

- Coverage reporting

|

| 26 |

+

- Package building (on main branch)

|

| 27 |

+

|

| 28 |

+

### 3. **Multi-OS Testing** (`.github/workflows/multi-os-test.yml`)

|

| 29 |

+

- **Purpose**: Cross-platform testing

|

| 30 |

+

- **OS**: Ubuntu, macOS (Windows not included currently but may be reintroduced)

|

| 31 |

+

- **Python**: 3.10, 3.11, 3.12

|

| 32 |

+

- **Features**: Tests compatibility across different operating systems

|

| 33 |

+

|

| 34 |

+

### 4. **Basic Test Suite** (`.github/workflows/test.yml`)

|

| 35 |

+

- **Purpose**: Original test workflow

|

| 36 |

+

- **Features**:

|

| 37 |

+

- Multiple Python versions

|

| 38 |

+

- System dependency installation

|

| 39 |

+

- Test data creation

|

| 40 |

+

- Coverage reporting

|

| 41 |

+

|

| 42 |

+

## Setup Scripts

|

| 43 |

+

|

| 44 |

+

### Test Data Setup (`.github/scripts/setup_test_data.py`)

|

| 45 |

+

Creates dummy test files when example data is not available:

|

| 46 |

+

- PDF documents

|

| 47 |

+

- CSV files

|

| 48 |

+

- Word documents

|

| 49 |

+

- Images

|

| 50 |

+

- Allow/deny lists

|

| 51 |

+

- OCR output files

|

| 52 |

+

|

| 53 |

+

## Usage

|

| 54 |

+

|

| 55 |

+

### Running Tests Locally

|

| 56 |

+

|

| 57 |

+

```bash

|

| 58 |

+

# Install dependencies

|

| 59 |

+

pip install -r requirements.txt

|

| 60 |

+

pip install pytest pytest-cov

|

| 61 |

+

|

| 62 |

+

# Setup test data

|

| 63 |

+

python .github/scripts/setup_test_data.py

|

| 64 |

+

|

| 65 |

+

# Run tests

|

| 66 |

+

cd test

|

| 67 |

+

python test.py

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

### GitHub Actions Triggers

|

| 71 |

+

|

| 72 |

+

1. **Push to main/dev**: Runs all tests

|

| 73 |

+

2. **Pull Request**: Runs tests and linting

|

| 74 |

+

3. **Daily Schedule**: Runs tests at 2 AM UTC

|

| 75 |

+

4. **Manual Trigger**: Can be triggered manually from GitHub

|

| 76 |

+

|

| 77 |

+

## Configuration

|

| 78 |

+

|

| 79 |

+

### Environment Variables

|

| 80 |

+

- `PYTHON_VERSION`: Default Python version (3.11)

|

| 81 |

+

- `PYTHONPATH`: Set automatically for test discovery

|

| 82 |

+

|

| 83 |

+

### Caching

|

| 84 |

+

- Pip dependencies are cached for faster builds

|

| 85 |

+

- Cache key based on requirements.txt hash

|

| 86 |

+

|

| 87 |

+

### Artifacts

|

| 88 |

+

- Test results (JUnit XML)

|

| 89 |

+

- Coverage reports (HTML, XML)

|

| 90 |

+

- Security reports

|

| 91 |

+

- Build artifacts (on main branch)

|

| 92 |

+

|

| 93 |

+

## Test Data

|

| 94 |

+

|

| 95 |

+

The workflows automatically create test data when example files are missing:

|

| 96 |

+

|

| 97 |

+

### Required Files Created:

|

| 98 |

+

- `example_data/example_of_emails_sent_to_a_professor_before_applying.pdf`

|

| 99 |

+

- `example_data/combined_case_notes.csv`

|

| 100 |

+

- `example_data/Bold minimalist professional cover letter.docx`

|

| 101 |

+

- `example_data/example_complaint_letter.jpg`

|

| 102 |

+

- `example_data/test_allow_list_*.csv`

|

| 103 |

+

- `example_data/partnership_toolkit_redact_*.csv`

|

| 104 |

+

- `example_data/example_outputs/doubled_output_joined.pdf_ocr_output.csv`

|

| 105 |

+

|

| 106 |

+

### Dependencies Installed:

|

| 107 |

+

- **System**: tesseract-ocr, poppler-utils, OpenGL libraries

|

| 108 |

+

- **Python**: All requirements.txt packages + pytest, reportlab, pillow

|

| 109 |

+

|

| 110 |

+

## Workflow Status

|

| 111 |

+

|

| 112 |

+

### Success Criteria:

|

| 113 |

+

- ✅ All tests pass

|

| 114 |

+

- ✅ No linting errors

|

| 115 |

+

- ✅ Security checks pass

|

| 116 |

+

- ✅ Coverage meets threshold (if configured)

|

| 117 |

+

|

| 118 |

+

### Failure Handling:

|

| 119 |

+

- Tests are designed to skip gracefully if files are missing

|

| 120 |

+

- AWS tests are expected to fail without credentials

|

| 121 |

+

- System dependency failures are handled with fallbacks

|

| 122 |

+

|

| 123 |

+

## Customization

|

| 124 |

+

|

| 125 |

+

### Adding New Tests:

|

| 126 |

+

1. Add test methods to `test/test.py`

|

| 127 |

+

2. Update test data in `setup_test_data.py` if needed

|

| 128 |

+

3. Tests will automatically run in all workflows

|

| 129 |

+

|

| 130 |

+

### Modifying Workflows:

|

| 131 |

+

1. Edit the appropriate `.yml` file

|

| 132 |

+

2. Test locally first

|

| 133 |

+

3. Push to trigger the workflow

|

| 134 |

+

|

| 135 |

+

### Environment-Specific Settings:

|

| 136 |

+

- **Ubuntu**: Full system dependencies

|

| 137 |

+

- **Windows**: Python packages only

|

| 138 |

+

- **macOS**: Homebrew dependencies

|

| 139 |

+

|

| 140 |

+

## Troubleshooting

|

| 141 |

+

|

| 142 |

+

### Common Issues:

|

| 143 |

+

|

| 144 |

+

1. **Missing Dependencies**:

|

| 145 |

+

- Check system dependency installation

|

| 146 |

+

- Verify Python package versions

|

| 147 |

+

|

| 148 |

+

2. **Test Failures**:

|

| 149 |

+

- Check test data creation

|

| 150 |

+

- Verify file paths

|

| 151 |

+

- Review test output logs

|

| 152 |

+

|

| 153 |

+

3. **AWS Test Failures**:

|

| 154 |

+

- Expected without credentials

|

| 155 |

+

- Tests are designed to handle this gracefully

|

| 156 |

+

|

| 157 |

+

4. **System Dependency Issues**:

|

| 158 |

+

- Different OS have different requirements

|

| 159 |

+

- Check the specific OS section in workflows

|

| 160 |

+

|

| 161 |

+

### Debug Mode:

|

| 162 |

+

Add `--verbose` or `-v` flags to pytest commands for more detailed output.

|

| 163 |

+

|

| 164 |

+

## Security

|

| 165 |

+

|

| 166 |

+

- Dependencies are scanned with Safety

|

| 167 |

+

- Code is scanned with Bandit

|

| 168 |

+

- No secrets are exposed in logs

|

| 169 |

+

- Test data is temporary and cleaned up

|

| 170 |

+

|

| 171 |

+

## Performance

|

| 172 |

+

|

| 173 |

+

- Tests run in parallel where possible

|

| 174 |

+

- Dependencies are cached

|

| 175 |

+

- Only necessary system packages are installed

|

| 176 |

+

- Test data is created efficiently

|

| 177 |

+

|

| 178 |

+

## Monitoring

|

| 179 |

+

|

| 180 |

+

- Workflow status is visible in GitHub Actions tab

|

| 181 |

+

- Coverage reports are uploaded to Codecov

|

| 182 |

+

- Test results are available as artifacts

|

| 183 |

+

- Security reports are generated and stored

|

.github/workflows/archive_workflows/multi-os-test.yml

ADDED

|

@@ -0,0 +1,109 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Multi-OS Test

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [ main ]

|

| 6 |

+

pull_request:

|

| 7 |

+

branches: [ main ]

|

| 8 |

+

|

| 9 |

+

permissions:

|

| 10 |

+

contents: read

|

| 11 |

+

actions: read

|

| 12 |

+

|

| 13 |

+

jobs:

|

| 14 |

+

test:

|

| 15 |

+

runs-on: ${{ matrix.os }}

|

| 16 |

+

strategy:

|

| 17 |

+

matrix:

|

| 18 |

+

os: [ubuntu-latest, macos-latest] # windows-latest, not included as tesseract cannot be installed silently

|

| 19 |

+

python-version: ["3.11", "3.12", "3.13"]

|

| 20 |

+

exclude:

|

| 21 |

+

# Exclude some combinations to reduce CI time

|

| 22 |

+

#- os: windows-latest

|

| 23 |

+

# python-version: ["3.12", "3.13"]

|

| 24 |

+

- os: macos-latest

|

| 25 |

+

python-version: ["3.12", "3.13"]

|

| 26 |

+

|

| 27 |

+

steps:

|

| 28 |

+

- uses: actions/checkout@v4

|

| 29 |

+

|

| 30 |

+

- name: Set up Python ${{ matrix.python-version }}

|

| 31 |

+

uses: actions/setup-python@v4

|

| 32 |

+

with:

|

| 33 |

+

python-version: ${{ matrix.python-version }}

|

| 34 |

+

|

| 35 |

+

- name: Install system dependencies (Ubuntu)

|

| 36 |

+

if: matrix.os == 'ubuntu-latest'

|

| 37 |

+

run: |

|

| 38 |

+

sudo apt-get update

|

| 39 |

+

sudo apt-get install -y \

|

| 40 |

+

tesseract-ocr \

|

| 41 |

+

tesseract-ocr-eng \

|

| 42 |

+

poppler-utils \

|

| 43 |

+

libgl1-mesa-dri \

|

| 44 |

+

libglib2.0-0

|

| 45 |

+

|

| 46 |

+

- name: Install system dependencies (macOS)

|

| 47 |

+

if: matrix.os == 'macos-latest'

|

| 48 |

+

run: |

|

| 49 |

+

brew install tesseract poppler

|

| 50 |

+

|

| 51 |

+

- name: Install system dependencies (Windows)

|

| 52 |

+

if: matrix.os == 'windows-latest'

|

| 53 |

+

run: |

|

| 54 |

+

# Create tools directory

|

| 55 |

+

if (!(Test-Path "C:\tools")) {

|

| 56 |

+

mkdir C:\tools

|

| 57 |

+

}

|

| 58 |

+

|

| 59 |

+

# Download and install Tesseract

|

| 60 |

+

$tesseractUrl = "https://github.com/tesseract-ocr/tesseract/releases/download/5.5.0/tesseract-ocr-w64-setup-5.5.0.20241111.exe"

|

| 61 |

+

$tesseractInstaller = "C:\tools\tesseract-installer.exe"

|

| 62 |

+

Invoke-WebRequest -Uri $tesseractUrl -OutFile $tesseractInstaller

|

| 63 |

+

|

| 64 |

+

# Install Tesseract silently

|

| 65 |

+

Start-Process -FilePath $tesseractInstaller -ArgumentList "/S", "/D=C:\tools\tesseract" -Wait

|

| 66 |

+

|

| 67 |

+

# Download and extract Poppler

|

| 68 |

+

$popplerUrl = "https://github.com/oschwartz10612/poppler-windows/releases/download/v25.07.0-0/Release-25.07.0-0.zip"

|

| 69 |

+

$popplerZip = "C:\tools\poppler.zip"

|

| 70 |

+

Invoke-WebRequest -Uri $popplerUrl -OutFile $popplerZip

|

| 71 |

+

|

| 72 |

+

# Extract Poppler

|

| 73 |

+

Expand-Archive -Path $popplerZip -DestinationPath C:\tools\poppler -Force

|

| 74 |

+

|

| 75 |

+

# Add to PATH

|

| 76 |

+

echo "C:\tools\tesseract" >> $env:GITHUB_PATH

|

| 77 |

+

echo "C:\tools\poppler\poppler-25.07.0\Library\bin" >> $env:GITHUB_PATH

|

| 78 |

+

|

| 79 |

+

# Set environment variables for your application

|

| 80 |

+

echo "TESSERACT_FOLDER=C:\tools\tesseract" >> $env:GITHUB_ENV

|

| 81 |

+

echo "POPPLER_FOLDER=C:\tools\poppler\poppler-25.07.0\Library\bin" >> $env:GITHUB_ENV

|

| 82 |

+

echo "TESSERACT_DATA_FOLDER=C:\tools\tesseract\tessdata" >> $env:GITHUB_ENV

|

| 83 |

+

|

| 84 |

+

# Verify installation using full paths (since PATH won't be updated in current session)

|

| 85 |

+

& "C:\tools\tesseract\tesseract.exe" --version

|

| 86 |

+

& "C:\tools\poppler\poppler-25.07.0\Library\bin\pdftoppm.exe" -v

|

| 87 |

+

|

| 88 |

+

- name: Install Python dependencies

|

| 89 |

+

run: |

|

| 90 |

+

python -m pip install --upgrade pip

|

| 91 |

+

pip install -r requirements.txt

|

| 92 |

+

pip install pytest pytest-cov reportlab pillow

|

| 93 |

+

|

| 94 |

+

- name: Download spaCy model

|

| 95 |

+

run: |

|

| 96 |

+

python -m spacy download en_core_web_lg

|

| 97 |

+

|

| 98 |

+

- name: Setup test data

|

| 99 |

+

run: |

|

| 100 |

+

python .github/scripts/setup_test_data.py

|

| 101 |

+

|

| 102 |

+

- name: Run CLI tests

|

| 103 |

+

run: |

|

| 104 |

+

cd test

|

| 105 |

+

python test.py

|

| 106 |

+

|

| 107 |

+

- name: Run tests with pytest

|

| 108 |

+

run: |

|

| 109 |

+

pytest test/test.py -v --tb=short

|

.github/workflows/ci.yml

ADDED

|

@@ -0,0 +1,260 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: CI/CD Pipeline

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches: [ main ]

|

| 6 |

+

pull_request:

|

| 7 |

+

branches: [ main ]

|

| 8 |

+

#schedule:

|

| 9 |

+

# Run tests daily at 2 AM UTC

|

| 10 |

+

# - cron: '0 2 * * *'

|

| 11 |

+

|

| 12 |

+

permissions:

|

| 13 |

+

contents: read

|

| 14 |

+

actions: read

|

| 15 |

+

pull-requests: write

|

| 16 |

+

issues: write

|

| 17 |

+

|

| 18 |

+

env:

|

| 19 |

+

PYTHON_VERSION: "3.11"

|

| 20 |

+

|

| 21 |

+

jobs:

|

| 22 |

+

lint:

|

| 23 |

+

runs-on: ubuntu-latest

|

| 24 |

+

steps:

|

| 25 |

+

- uses: actions/checkout@v4

|

| 26 |

+

|

| 27 |

+

- name: Set up Python

|

| 28 |

+

uses: actions/setup-python@v4

|

| 29 |

+

with:

|

| 30 |

+

python-version: ${{ env.PYTHON_VERSION }}

|

| 31 |

+

|

| 32 |

+

- name: Install dependencies

|

| 33 |

+

run: |

|

| 34 |

+

python -m pip install --upgrade pip

|

| 35 |

+

pip install ruff black

|

| 36 |

+

|

| 37 |

+

- name: Run Ruff linter

|

| 38 |

+

run: ruff check .

|

| 39 |

+

|

| 40 |

+

- name: Run Black formatter check

|

| 41 |

+

run: black --check .

|

| 42 |

+

|

| 43 |

+

test-unit:

|

| 44 |

+

runs-on: ubuntu-latest

|

| 45 |

+

strategy:

|

| 46 |

+

matrix:

|

| 47 |

+

python-version: [3.11, 3.12, 3.13]

|

| 48 |

+

|

| 49 |

+

steps:

|

| 50 |

+

- uses: actions/checkout@v4

|

| 51 |

+

|

| 52 |

+

- name: Set up Python ${{ matrix.python-version }}

|

| 53 |

+

uses: actions/setup-python@v4

|

| 54 |

+

with:

|

| 55 |

+

python-version: ${{ matrix.python-version }}

|

| 56 |

+

|

| 57 |

+

- name: Cache pip dependencies

|

| 58 |

+

uses: actions/cache@v4

|

| 59 |

+

with:

|

| 60 |

+

path: ~/.cache/pip

|

| 61 |

+

key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }}

|

| 62 |

+

restore-keys: |

|

| 63 |

+

${{ runner.os }}-pip-

|

| 64 |

+

|

| 65 |

+

- name: Install system dependencies

|

| 66 |

+

run: |

|

| 67 |

+

sudo apt-get update

|

| 68 |

+

sudo apt-get install -y \

|

| 69 |

+

tesseract-ocr \

|

| 70 |

+

tesseract-ocr-eng \

|

| 71 |

+

poppler-utils \

|

| 72 |

+

libgl1-mesa-dri \

|

| 73 |

+

libglib2.0-0 \

|

| 74 |

+

libsm6 \

|

| 75 |

+

libxext6 \

|

| 76 |

+

libxrender-dev \

|

| 77 |

+

libgomp1

|

| 78 |

+

|

| 79 |

+

- name: Install Python dependencies

|

| 80 |

+

run: |

|

| 81 |

+

python -m pip install --upgrade pip

|

| 82 |

+

pip install -r requirements_lightweight.txt

|

| 83 |

+

pip install pytest pytest-cov pytest-html pytest-xdist reportlab pillow

|

| 84 |

+

|

| 85 |

+

- name: Download spaCy model

|

| 86 |

+

run: |

|

| 87 |

+

python -m spacy download en_core_web_lg

|

| 88 |

+

|

| 89 |

+

- name: Setup test data

|

| 90 |

+

run: |

|

| 91 |

+

python .github/scripts/setup_test_data.py

|

| 92 |

+

echo "Setup script completed. Checking results:"

|

| 93 |

+

ls -la example_data/ || echo "example_data directory not found"

|

| 94 |

+

|

| 95 |

+

- name: Verify test data files

|

| 96 |

+

run: |

|

| 97 |

+

echo "Checking if critical test files exist:"

|

| 98 |

+

ls -la example_data/

|

| 99 |

+

echo "Checking for specific PDF files:"

|

| 100 |

+

ls -la example_data/*.pdf || echo "No PDF files found"

|

| 101 |

+

echo "Checking file sizes:"

|

| 102 |

+

find example_data -name "*.pdf" -exec ls -lh {} \;

|