Spaces:

Sleeping

Sleeping

Auto-deploy from GitHub Actions

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +121 -0

- .github/workflows/main.yml +60 -0

- .gitignore +54 -0

- README.md +253 -0

- app.py +872 -0

- dataset_preparation.ipynb +0 -0

- inference_outputs/image_000000_annotated.jpg +0 -0

- inference_outputs/image_002126_annotated.jpg +3 -0

- requirements.txt +0 -0

- saved_models/efficientnetb0_stage2_best.weights.h5 +3 -0

- saved_models/mobilenetv2_v2_stage2_best.weights.h5 +3 -0

- saved_models/resnet50_v2_stage2_best.weights.h5 +3 -0

- saved_models/vgg16_v2_stage2_best.h5 +3 -0

- scripts/01_Data Augmentation.ipynb +595 -0

- scripts/01_EDA.ipynb +0 -0

- scripts/02_efficientnetb0.py +385 -0

- scripts/02_mobilenetv2.py +430 -0

- scripts/02_model_comparision.ipynb +19 -0

- scripts/02_resnet50.py +482 -0

- scripts/02_vgg16.py +422 -0

- scripts/03_eval_yolo.py +151 -0

- scripts/03_train_yolo.py +56 -0

- scripts/03_yolo_dataset_creation.py +248 -0

- scripts/04_inference_pipeline.py +436 -0

- scripts/04_validation and cleaning.py +310 -0

- scripts/check.py +239 -0

- scripts/compare_models.py +267 -0

- scripts/convert_efficientnet_weights.py +109 -0

- scripts/convert_mobilenet_weights.py +83 -0

- scripts/convert_vgg16_weights.py +79 -0

- scripts/train_yolo_smartvision.py +428 -0

- scripts/yolov8n.pt +3 -0

- smartvision_metrics/comparison_plots/MobileNetV2_cm.png +3 -0

- smartvision_metrics/comparison_plots/MobileNetV2_v3_cm.png +3 -0

- smartvision_metrics/comparison_plots/ResNet50_cm.png +3 -0

- smartvision_metrics/comparison_plots/ResNet50_v2_Stage_2_FT_cm.png +3 -0

- smartvision_metrics/comparison_plots/VGG16_cm.png +3 -0

- smartvision_metrics/comparison_plots/VGG16_v2_Stage_2_FT_cm.png +3 -0

- smartvision_metrics/comparison_plots/accuracy_comparison.png +0 -0

- smartvision_metrics/comparison_plots/efficientnetb0_cm.png +3 -0

- smartvision_metrics/comparison_plots/f1_comparison.png +0 -0

- smartvision_metrics/comparison_plots/size_comparison.png +0 -0

- smartvision_metrics/comparison_plots/speed_comparison.png +0 -0

- smartvision_metrics/comparison_plots/top5_comparison.png +0 -0

- smartvision_metrics/efficientnetb0/confusion_matrix.npy +0 -0

- smartvision_metrics/efficientnetb0/metrics.json +12 -0

- smartvision_metrics/efficientnetb0_stage2/confusion_matrix.npy +0 -0

- smartvision_metrics/efficientnetb0_stage2/metrics.json +12 -0

- smartvision_metrics/mobilenetv2/confusion_matrix.npy +0 -0

- smartvision_metrics/mobilenetv2/metrics.json +12 -0

.gitattributes

ADDED

|

@@ -0,0 +1,121 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

saved_models/resnet50_v2_stage2_best.weights.h5 filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

saved_models/vgg16_v2_stage2_best.h5 filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

inference_outputs/image_002126_annotated.jpg filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

saved_models/efficientnetb0_stage2_best.weights.h5 filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

saved_models/mobilenetv2_v2_stage2_best.weights.h5 filter=lfs diff=lfs merge=lfs -text

|

| 7 |

+

scripts/yolov8n.pt filter=lfs diff=lfs merge=lfs -text

|

| 8 |

+

smartvision_metrics/comparison_plots/MobileNetV2_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 9 |

+

smartvision_metrics/comparison_plots/MobileNetV2_v3_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 10 |

+

smartvision_metrics/comparison_plots/ResNet50_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 11 |

+

smartvision_metrics/comparison_plots/ResNet50_v2_Stage_2_FT_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 12 |

+

smartvision_metrics/comparison_plots/VGG16_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 13 |

+

smartvision_metrics/comparison_plots/VGG16_v2_Stage_2_FT_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 14 |

+

smartvision_metrics/comparison_plots/efficientnetb0_cm.png filter=lfs diff=lfs merge=lfs -text

|

| 15 |

+

smartvision_yolo/yolov8n_25classes/BoxF1_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 16 |

+

smartvision_yolo/yolov8n_25classes/BoxPR_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 17 |

+

smartvision_yolo/yolov8n_25classes/BoxP_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 18 |

+

smartvision_yolo/yolov8n_25classes/BoxR_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 19 |

+

smartvision_yolo/yolov8n_25classes/confusion_matrix.png filter=lfs diff=lfs merge=lfs -text

|

| 20 |

+

smartvision_yolo/yolov8n_25classes/confusion_matrix_normalized.png filter=lfs diff=lfs merge=lfs -text

|

| 21 |

+

smartvision_yolo/yolov8n_25classes/labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 22 |

+

smartvision_yolo/yolov8n_25classes/results.png filter=lfs diff=lfs merge=lfs -text

|

| 23 |

+

smartvision_yolo/yolov8n_25classes/train_batch0.jpg filter=lfs diff=lfs merge=lfs -text

|

| 24 |

+

smartvision_yolo/yolov8n_25classes/train_batch1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 25 |

+

smartvision_yolo/yolov8n_25classes/train_batch1260.jpg filter=lfs diff=lfs merge=lfs -text

|

| 26 |

+

smartvision_yolo/yolov8n_25classes/train_batch1261.jpg filter=lfs diff=lfs merge=lfs -text

|

| 27 |

+

smartvision_yolo/yolov8n_25classes/train_batch1262.jpg filter=lfs diff=lfs merge=lfs -text

|

| 28 |

+

smartvision_yolo/yolov8n_25classes/train_batch2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 29 |

+

smartvision_yolo/yolov8n_25classes/val_batch0_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 30 |

+

smartvision_yolo/yolov8n_25classes/val_batch0_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 31 |

+

smartvision_yolo/yolov8n_25classes/val_batch1_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 32 |

+

smartvision_yolo/yolov8n_25classes/val_batch1_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 33 |

+

smartvision_yolo/yolov8n_25classes/val_batch2_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 34 |

+

smartvision_yolo/yolov8n_25classes/val_batch2_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

smartvision_yolo/yolov8n_25classes/weights/best.pt filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

smartvision_yolo/yolov8n_25classes/weights/last.pt filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/BoxF1_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/BoxPR_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/BoxP_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/BoxR_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/confusion_matrix.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/confusion_matrix_normalized.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/results.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/train_batch0.jpg filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/train_batch1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/train_batch2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/train_batch8400.jpg filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/train_batch8401.jpg filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/train_batch8402.jpg filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/val_batch0_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/val_batch0_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/val_batch1_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/val_batch1_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/val_batch2_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/val_batch2_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/weights/best.pt filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

yolo_runs/smartvision_yolov8s6[[:space:]]-[[:space:]]Copy/weights/last.pt filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

yolo_runs/smartvision_yolov8s_alltrain/BoxF1_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

yolo_runs/smartvision_yolov8s_alltrain/BoxPR_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

yolo_runs/smartvision_yolov8s_alltrain/BoxP_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

yolo_runs/smartvision_yolov8s_alltrain/BoxR_curve.png filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

yolo_runs/smartvision_yolov8s_alltrain/confusion_matrix.png filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

yolo_runs/smartvision_yolov8s_alltrain/confusion_matrix_normalized.png filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

yolo_runs/smartvision_yolov8s_alltrain/labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

yolo_runs/smartvision_yolov8s_alltrain/results.png filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

yolo_runs/smartvision_yolov8s_alltrain/train_batch0.jpg filter=lfs diff=lfs merge=lfs -text

|

| 68 |

+

yolo_runs/smartvision_yolov8s_alltrain/train_batch1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 69 |

+

yolo_runs/smartvision_yolov8s_alltrain/train_batch2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 70 |

+

yolo_runs/smartvision_yolov8s_alltrain/val_batch0_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 71 |

+

yolo_runs/smartvision_yolov8s_alltrain/val_batch0_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 72 |

+

yolo_runs/smartvision_yolov8s_alltrain/val_batch1_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 73 |

+

yolo_runs/smartvision_yolov8s_alltrain/val_batch1_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 74 |

+

yolo_runs/smartvision_yolov8s_alltrain/val_batch2_labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 75 |

+

yolo_runs/smartvision_yolov8s_alltrain/val_batch2_pred.jpg filter=lfs diff=lfs merge=lfs -text

|

| 76 |

+

yolo_runs/smartvision_yolov8s_alltrain/weights/best.pt filter=lfs diff=lfs merge=lfs -text

|

| 77 |

+

yolo_runs/smartvision_yolov8s_alltrain/weights/last.pt filter=lfs diff=lfs merge=lfs -text

|

| 78 |

+

yolo_runs/smartvision_yolov8s_alltrain2/labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 79 |

+

yolo_runs/smartvision_yolov8s_alltrain2/train_batch0.jpg filter=lfs diff=lfs merge=lfs -text

|

| 80 |

+

yolo_runs/smartvision_yolov8s_alltrain2/train_batch1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 81 |

+

yolo_runs/smartvision_yolov8s_alltrain2/train_batch2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 82 |

+

yolo_runs/smartvision_yolov8s_alltrain3/labels.jpg filter=lfs diff=lfs merge=lfs -text

|

| 83 |

+

yolo_runs/smartvision_yolov8s_alltrain3/train_batch0.jpg filter=lfs diff=lfs merge=lfs -text

|

| 84 |

+

yolo_runs/smartvision_yolov8s_alltrain3/train_batch1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 85 |

+

yolo_runs/smartvision_yolov8s_alltrain3/train_batch2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 86 |

+

yolo_runs/smartvision_yolov8s_alltrain3/weights/best.pt filter=lfs diff=lfs merge=lfs -text

|

| 87 |

+

yolo_runs/smartvision_yolov8s_alltrain3/weights/last.pt filter=lfs diff=lfs merge=lfs -text

|

| 88 |

+

yolo_vis/samples/image_000001.jpg filter=lfs diff=lfs merge=lfs -text

|

| 89 |

+

yolo_vis/samples/image_000003.jpg filter=lfs diff=lfs merge=lfs -text

|

| 90 |

+

yolo_vis/samples/image_000004.jpg filter=lfs diff=lfs merge=lfs -text

|

| 91 |

+

yolo_vis/samples/image_000005.jpg filter=lfs diff=lfs merge=lfs -text

|

| 92 |

+

yolo_vis/samples/image_000006.jpg filter=lfs diff=lfs merge=lfs -text

|

| 93 |

+

yolo_vis/samples/image_000007.jpg filter=lfs diff=lfs merge=lfs -text

|

| 94 |

+

yolo_vis/samples2/image_000001.jpg filter=lfs diff=lfs merge=lfs -text

|

| 95 |

+

yolo_vis/samples2/image_000002.jpg filter=lfs diff=lfs merge=lfs -text

|

| 96 |

+

yolo_vis/samples2/image_000003.jpg filter=lfs diff=lfs merge=lfs -text

|

| 97 |

+

yolo_vis/samples2/image_000004.jpg filter=lfs diff=lfs merge=lfs -text

|

| 98 |

+

yolo_vis/samples2/image_000005.jpg filter=lfs diff=lfs merge=lfs -text

|

| 99 |

+

yolo_vis/samples2/image_000007.jpg filter=lfs diff=lfs merge=lfs -text

|

| 100 |

+

yolo_vis/samples3/image_001750.jpg filter=lfs diff=lfs merge=lfs -text

|

| 101 |

+

yolo_vis/samples3/image_001752.jpg filter=lfs diff=lfs merge=lfs -text

|

| 102 |

+

yolo_vis/samples3/image_001753.jpg filter=lfs diff=lfs merge=lfs -text

|

| 103 |

+

yolo_vis/samples3/image_001755.jpg filter=lfs diff=lfs merge=lfs -text

|

| 104 |

+

yolo_vis/samples3/image_001756.jpg filter=lfs diff=lfs merge=lfs -text

|

| 105 |

+

yolo_vis/samples3/image_001757.jpg filter=lfs diff=lfs merge=lfs -text

|

| 106 |

+

yolo_vis/samples4/image_001750.jpg filter=lfs diff=lfs merge=lfs -text

|

| 107 |

+

yolo_vis/samples4/image_001751.jpg filter=lfs diff=lfs merge=lfs -text

|

| 108 |

+

yolo_vis/samples4/image_001752.jpg filter=lfs diff=lfs merge=lfs -text

|

| 109 |

+

yolo_vis/samples4/image_001753.jpg filter=lfs diff=lfs merge=lfs -text

|

| 110 |

+

yolo_vis/samples4/image_001754.jpg filter=lfs diff=lfs merge=lfs -text

|

| 111 |

+

yolo_vis/samples4/image_001755.jpg filter=lfs diff=lfs merge=lfs -text

|

| 112 |

+

yolo_vis/samples4/image_001757.jpg filter=lfs diff=lfs merge=lfs -text

|

| 113 |

+

yolo_vis/samples_debug/image_001750.jpg filter=lfs diff=lfs merge=lfs -text

|

| 114 |

+

yolo_vis/samples_debug/image_001752.jpg filter=lfs diff=lfs merge=lfs -text

|

| 115 |

+

yolo_vis/samples_debug/image_001753.jpg filter=lfs diff=lfs merge=lfs -text

|

| 116 |

+

yolo_vis/samples_debug2/image_001750.jpg filter=lfs diff=lfs merge=lfs -text

|

| 117 |

+

yolo_vis/samples_debug2/image_001751.jpg filter=lfs diff=lfs merge=lfs -text

|

| 118 |

+

yolo_vis/samples_debug2/image_001752.jpg filter=lfs diff=lfs merge=lfs -text

|

| 119 |

+

yolo_vis/samples_debug2/image_001753.jpg filter=lfs diff=lfs merge=lfs -text

|

| 120 |

+

yolov8n.pt filter=lfs diff=lfs merge=lfs -text

|

| 121 |

+

yolov8s.pt filter=lfs diff=lfs merge=lfs -text

|

.github/workflows/main.yml

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Deploy to Hugging Face Space

|

| 2 |

+

|

| 3 |

+

on:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- main

|

| 7 |

+

workflow_dispatch:

|

| 8 |

+

|

| 9 |

+

jobs:

|

| 10 |

+

deploy:

|

| 11 |

+

runs-on: ubuntu-latest

|

| 12 |

+

|

| 13 |

+

steps:

|

| 14 |

+

# Step 1 — Checkout repo with LFS

|

| 15 |

+

- name: Checkout repository

|

| 16 |

+

uses: actions/checkout@v4

|

| 17 |

+

with:

|

| 18 |

+

fetch-depth: 0

|

| 19 |

+

lfs: true

|

| 20 |

+

|

| 21 |

+

# (Optional) Verify that LFS files are real binaries, not pointers

|

| 22 |

+

- name: Verify model files

|

| 23 |

+

run: |

|

| 24 |

+

ls -lh saved_models || echo "saved_models folder not found"

|

| 25 |

+

file saved_models/resnet50_v2_stage2_best.weights.h5 || echo "resnet file missing"

|

| 26 |

+

file saved_models/vgg16_v2_stage2_best.h5 || echo "vgg16 file missing"

|

| 27 |

+

|

| 28 |

+

# Step 2 — Set up Python

|

| 29 |

+

- name: Set up Python

|

| 30 |

+

uses: actions/setup-python@v4

|

| 31 |

+

with:

|

| 32 |

+

python-version: "3.10"

|

| 33 |

+

|

| 34 |

+

# Step 3 — Install Hugging Face Hub client

|

| 35 |

+

- name: Install Hugging Face Hub

|

| 36 |

+

run: pip install --upgrade huggingface_hub

|

| 37 |

+

|

| 38 |

+

# Step 4 — Upload entire repo to the Space

|

| 39 |

+

- name: Deploy to Hugging Face Space

|

| 40 |

+

env:

|

| 41 |

+

HF_TOKEN_01: ${{ secrets.HF_TOKEN_01 }}

|

| 42 |

+

HF_SPACE_ID: "yogesh-venkat/SmartVision_AI"

|

| 43 |

+

run: |

|

| 44 |

+

python - << 'EOF'

|

| 45 |

+

from huggingface_hub import HfApi

|

| 46 |

+

import os

|

| 47 |

+

|

| 48 |

+

space_id = os.getenv("HF_SPACE_ID")

|

| 49 |

+

token = os.getenv("HF_TOKEN_01")

|

| 50 |

+

api = HfApi()

|

| 51 |

+

|

| 52 |

+

print(f"🚀 Deploying to Hugging Face Space: {space_id}")

|

| 53 |

+

api.upload_folder(

|

| 54 |

+

repo_id=space_id,

|

| 55 |

+

repo_type="space",

|

| 56 |

+

folder_path=".",

|

| 57 |

+

token=token,

|

| 58 |

+

commit_message="Auto-deploy from GitHub Actions",

|

| 59 |

+

)

|

| 60 |

+

EOF

|

.gitignore

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# --------------------------------------------------

|

| 2 |

+

# Python general

|

| 3 |

+

# --------------------------------------------------

|

| 4 |

+

__pycache__/

|

| 5 |

+

*.py[cod]

|

| 6 |

+

*.pyo

|

| 7 |

+

*.pyd

|

| 8 |

+

*.so

|

| 9 |

+

*.egg-info/

|

| 10 |

+

.env

|

| 11 |

+

.venv

|

| 12 |

+

env/

|

| 13 |

+

venv/

|

| 14 |

+

ENV/

|

| 15 |

+

.ipynb_checkpoints/

|

| 16 |

+

|

| 17 |

+

# --------------------------------------------------

|

| 18 |

+

# OS / Editor junk

|

| 19 |

+

# --------------------------------------------------

|

| 20 |

+

.DS_Store

|

| 21 |

+

Thumbs.db

|

| 22 |

+

.idea/

|

| 23 |

+

.vscode/

|

| 24 |

+

*.swp

|

| 25 |

+

|

| 26 |

+

# --------------------------------------------------

|

| 27 |

+

# Streamlit

|

| 28 |

+

# --------------------------------------------------

|

| 29 |

+

.streamlit/cache/

|

| 30 |

+

.streamlit/static/

|

| 31 |

+

|

| 32 |

+

# --------------------------------------------------

|

| 33 |

+

# Logs

|

| 34 |

+

# --------------------------------------------------

|

| 35 |

+

logs/

|

| 36 |

+

*.log

|

| 37 |

+

|

| 38 |

+

# --------------------------------------------------

|

| 39 |

+

# Datasets (local only)

|

| 40 |

+

# --------------------------------------------------

|

| 41 |

+

smartvision_dataset/

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

# --------------------------------------------------

|

| 46 |

+

# Misc

|

| 47 |

+

# --------------------------------------------------

|

| 48 |

+

*.tmp

|

| 49 |

+

*.bak

|

| 50 |

+

*.old

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

untitled*

|

| 54 |

+

draft*

|

README.md

ADDED

|

@@ -0,0 +1,253 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: mit

|

| 3 |

+

title: SmartVision AI

|

| 4 |

+

sdk: streamlit

|

| 5 |

+

emoji: 🚀

|

| 6 |

+

colorFrom: red

|

| 7 |

+

colorTo: red

|

| 8 |

+

short_description: Multi-domain smart object detection and classification syste

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

# SmartVision AI – Complete Vision Pipeline (YOLOv8 + CNN Classifiers + Streamlit Dashboard)

|

| 12 |

+

|

| 13 |

+

SmartVision AI is a fully integrated **Computer Vision system** that combines:

|

| 14 |

+

|

| 15 |

+

- **Object Detection** using YOLOv8

|

| 16 |

+

- **Image Classification** using 4 deep-learning models:

|

| 17 |

+

**VGG16**, **ResNet50**, **MobileNetV2**, **EfficientNetB0**

|

| 18 |

+

- A complete **Streamlit-based Dashboard** for inference, comparison, metrics visualization, and webcam snapshots

|

| 19 |

+

- A modified dataset built on a **25‑class COCO subset**

|

| 20 |

+

|

| 21 |

+

This README explains setup, architecture, training, deployment, and usage.

|

| 22 |

+

|

| 23 |

+

---

|

| 24 |

+

|

| 25 |

+

## 🚀 Features

|

| 26 |

+

|

| 27 |

+

### ✅ 1. Image Classification (4 Models)

|

| 28 |

+

Each model is fine‑tuned on your custom 25‑class dataset:

|

| 29 |

+

- **VGG16**

|

| 30 |

+

- **ResNet50**

|

| 31 |

+

- **MobileNetV2**

|

| 32 |

+

- **EfficientNetB0**

|

| 33 |

+

|

| 34 |

+

Outputs:

|

| 35 |

+

- Top‑1 class prediction

|

| 36 |

+

- Top‑5 predictions

|

| 37 |

+

- Class probabilities

|

| 38 |

+

|

| 39 |

+

---

|

| 40 |

+

|

| 41 |

+

### 🎯 2. Object Detection – YOLOv8s

|

| 42 |

+

YOLO detects multiple objects in images or webcam snapshots.

|

| 43 |

+

|

| 44 |

+

Features:

|

| 45 |

+

- Bounding boxes

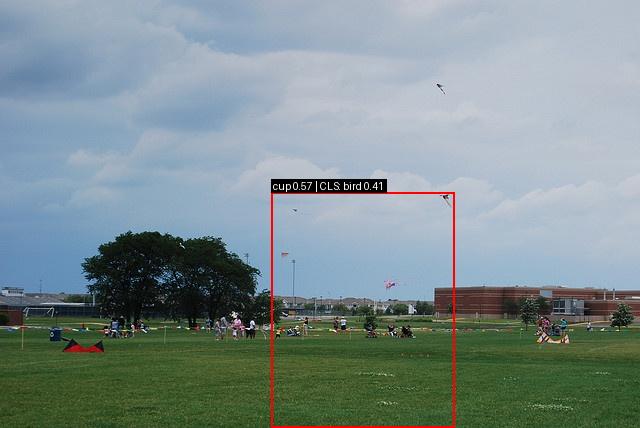

|

| 46 |

+

- Confidence scores

|

| 47 |

+

- Optional classification verification using ResNet50

|

| 48 |

+

- Annotated images saved automatically

|

| 49 |

+

|

| 50 |

+

---

|

| 51 |

+

|

| 52 |

+

### 🔗 3. Integrated Classification + Detection Pipeline

|

| 53 |

+

For each YOLO‑detected box:

|

| 54 |

+

1. Crop region

|

| 55 |

+

2. Classify using chosen CNN model

|

| 56 |

+

3. Display YOLO label + classifier label

|

| 57 |

+

4. Draw combined annotated results

|

| 58 |

+

|

| 59 |

+

---

|

| 60 |

+

|

| 61 |

+

### 📊 4. Metrics Dashboard

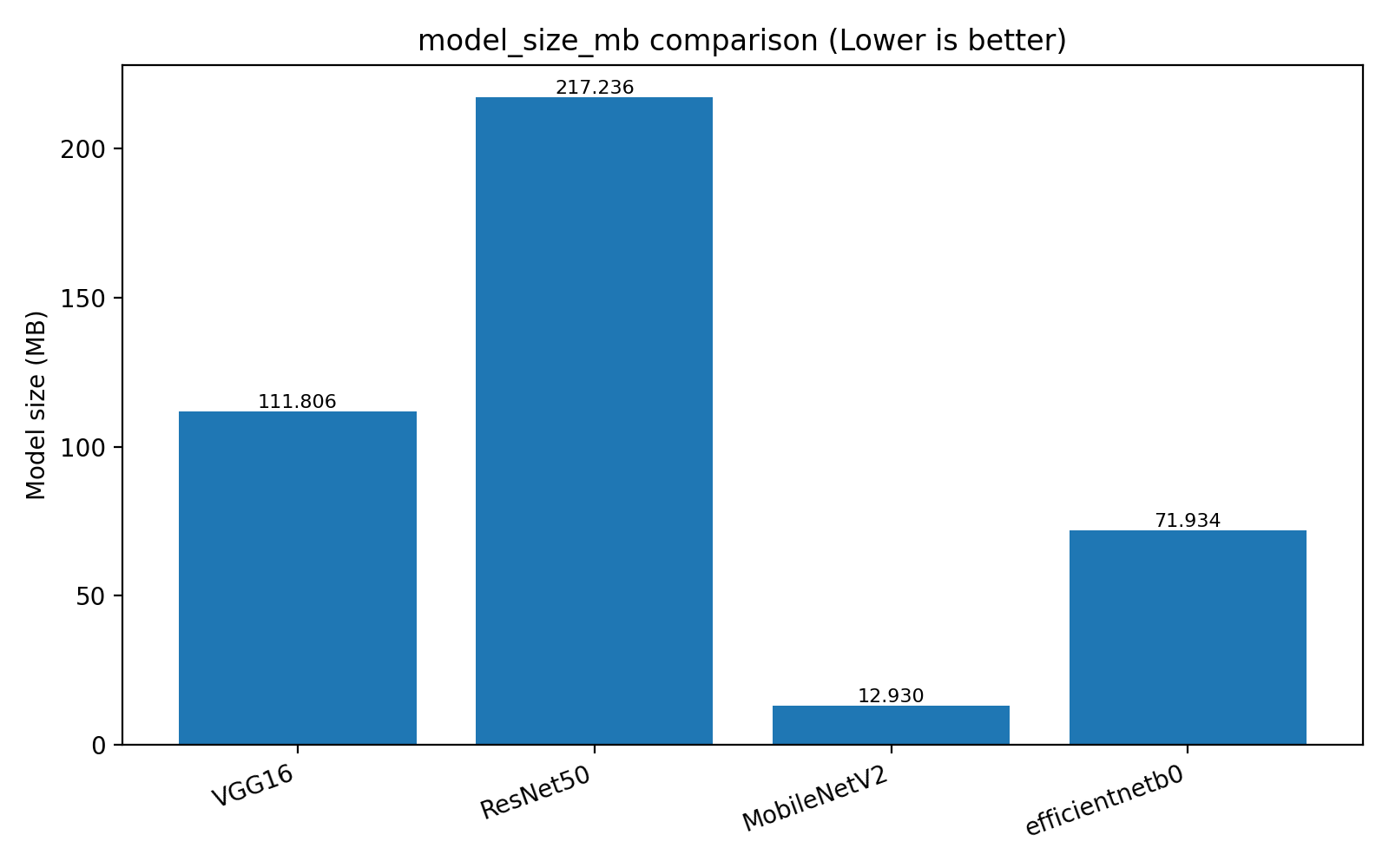

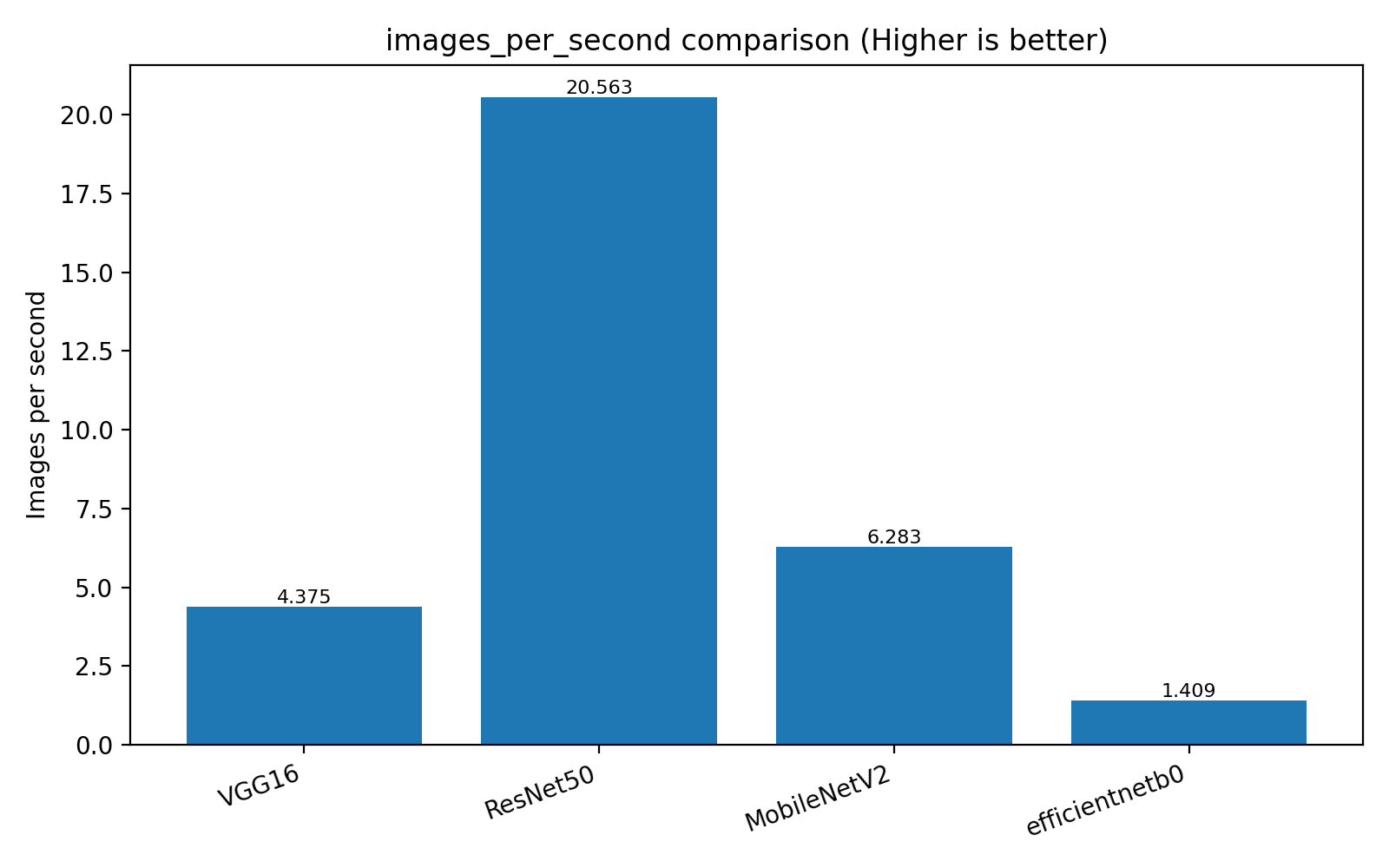

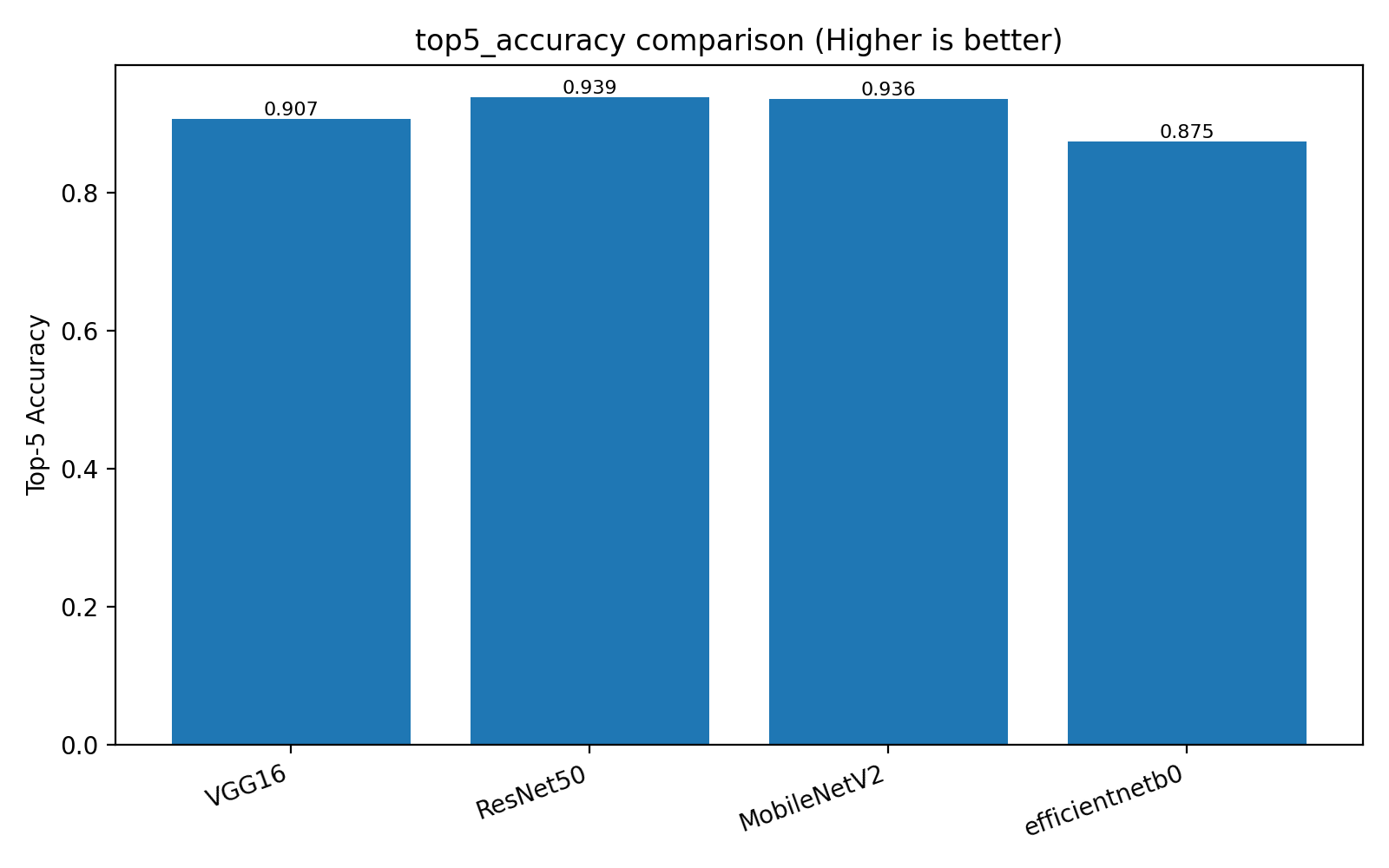

|

| 62 |

+

Displays:

|

| 63 |

+

- Accuracy

|

| 64 |

+

- Weighted F1 score

|

| 65 |

+

- Top‑5 accuracy

|

| 66 |

+

- Images per second

|

| 67 |

+

- Model size

|

| 68 |

+

- YOLOv8 mAP scores

|

| 69 |

+

- Confusion matrices

|

| 70 |

+

- Comparison bar charts

|

| 71 |

+

|

| 72 |

+

---

|

| 73 |

+

|

| 74 |

+

### 📷 5. Webcam Snapshot Detection

|

| 75 |

+

Take a photo via webcam → YOLO detection → annotated results.

|

| 76 |

+

|

| 77 |

+

---

|

| 78 |

+

|

| 79 |

+

## 📁 Project Structure

|

| 80 |

+

|

| 81 |

+

```

|

| 82 |

+

SmartVision_AI/

|

| 83 |

+

│

|

| 84 |

+

├── app.py # Main Streamlit App

|

| 85 |

+

├── saved_models/ # Trained weights (VGG16, ResNet, MobileNetV2, EfficientNet)

|

| 86 |

+

├── yolo_runs/ # YOLOv8 training folder

|

| 87 |

+

├── smartvision_dataset/ # 25-class dataset

|

| 88 |

+

│ ├── classification/

|

| 89 |

+

│ │ ├── train/

|

| 90 |

+

│ │ ├── val/

|

| 91 |

+

│ │ └── test/

|

| 92 |

+

│ └── detection/ # Labels + images for YOLOv8

|

| 93 |

+

│

|

| 94 |

+

├── smartvision_metrics/ # Accuracy, F1, confusion matrices

|

| 95 |

+

├── scripts/ # Weight converters, training scripts

|

| 96 |

+

├── inference_outputs/ # Annotated results

|

| 97 |

+

├── requirements.txt

|

| 98 |

+

└── README.md

|

| 99 |

+

```

|

| 100 |

+

|

| 101 |

+

---

|

| 102 |

+

|

| 103 |

+

## ⚙️ Installation

|

| 104 |

+

|

| 105 |

+

### 1️⃣ Clone Repository

|

| 106 |

+

|

| 107 |

+

```

|

| 108 |

+

git clone https://github.com/<your-username>/SmartVision_AI.git

|

| 109 |

+

cd SmartVision_AI

|

| 110 |

+

```

|

| 111 |

+

|

| 112 |

+

### 2️⃣ Install Dependencies

|

| 113 |

+

|

| 114 |

+

```

|

| 115 |

+

pip install -r requirements.txt

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

### 3️⃣ Install YOLOv8 (Ultralytics)

|

| 119 |

+

|

| 120 |

+

```

|

| 121 |

+

pip install ultralytics

|

| 122 |

+

```

|

| 123 |

+

|

| 124 |

+

---

|

| 125 |

+

|

| 126 |

+

## ▶️ Run Streamlit App

|

| 127 |

+

|

| 128 |

+

```

|

| 129 |

+

streamlit run app.py

|

| 130 |

+

```

|

| 131 |

+

|

| 132 |

+

App will open at:

|

| 133 |

+

|

| 134 |

+

```

|

| 135 |

+

http://localhost:8501

|

| 136 |

+

```

|

| 137 |

+

|

| 138 |

+

---

|

| 139 |

+

|

| 140 |

+

## 🏋️ Training Workflow

|

| 141 |

+

|

| 142 |

+

### 1️⃣ Classification Models

|

| 143 |

+

Each model has:

|

| 144 |

+

- Stage 1 → Train head with frozen backbone

|

| 145 |

+

- Stage 2 → Unfreeze top layers + fine‑tune

|

| 146 |

+

|

| 147 |

+

Scripts:

|

| 148 |

+

```

|

| 149 |

+

scripts/train_mobilenetv2.py

|

| 150 |

+

scripts/train_efficientnetb0.py

|

| 151 |

+

scripts/train_resnet50.py

|

| 152 |

+

scripts/train_vgg16.py

|

| 153 |

+

```

|

| 154 |

+

|

| 155 |

+

### 2️⃣ YOLO Training

|

| 156 |

+

|

| 157 |

+

```

|

| 158 |

+

yolo task=detect mode=train model=yolov8s.pt data=data.yaml epochs=50 imgsz=640

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

Outputs saved to:

|

| 162 |

+

```

|

| 163 |

+

yolo_runs/smartvision_yolov8s/

|

| 164 |

+

```

|

| 165 |

+

|

| 166 |

+

---

|

| 167 |

+

|

| 168 |

+

## 🧪 Supported Classes (25 COCO Classes)

|

| 169 |

+

|

| 170 |

+

```

|

| 171 |

+

airplane, bed, bench, bicycle, bird, bottle, bowl,

|

| 172 |

+

bus, cake, car, cat, chair, couch, cow, cup, dog,

|

| 173 |

+

elephant, horse, motorcycle, person, pizza, potted plant,

|

| 174 |

+

stop sign, traffic light, truck

|

| 175 |

+

```

|

| 176 |

+

|

| 177 |

+

---

|

| 178 |

+

|

| 179 |

+

## 🧰 Deployment on Hugging Face Spaces

|

| 180 |

+

|

| 181 |

+

You can deploy using **Streamlit SDK**.

|

| 182 |

+

|

| 183 |

+

### Steps:

|

| 184 |

+

1. Create public repository on GitHub

|

| 185 |

+

2. Push project files

|

| 186 |

+

3. Create new Hugging Face Space → select **Streamlit**

|

| 187 |

+

4. Connect GitHub repo

|

| 188 |

+

5. Add `requirements.txt`

|

| 189 |

+

6. Enable **GPU** for YOLO (optional)

|

| 190 |

+

7. Deploy 🚀

|

| 191 |

+

|

| 192 |

+

---

|

| 193 |

+

|

| 194 |

+

## 🧾 requirements.txt Example

|

| 195 |

+

|

| 196 |

+

```

|

| 197 |

+

streamlit

|

| 198 |

+

tensorflow==2.13.0

|

| 199 |

+

ultralytics

|

| 200 |

+

numpy

|

| 201 |

+

pandas

|

| 202 |

+

Pillow

|

| 203 |

+

matplotlib

|

| 204 |

+

scikit-learn

|

| 205 |

+

opencv-python-headless

|

| 206 |

+

```

|

| 207 |

+

|

| 208 |

+

---

|

| 209 |

+

|

| 210 |

+

## 📄 .gitignore Example

|

| 211 |

+

|

| 212 |

+

```

|

| 213 |

+

saved_models/

|

| 214 |

+

*.h5

|

| 215 |

+

*.pt

|

| 216 |

+

*.weights.h5

|

| 217 |

+

yolo_runs/

|

| 218 |

+

smartvision_metrics/

|

| 219 |

+

inference_outputs/

|

| 220 |

+

__pycache__/

|

| 221 |

+

*.pyc

|

| 222 |

+

.DS_Store

|

| 223 |

+

env/

|

| 224 |

+

```

|

| 225 |

+

|

| 226 |

+

---

|

| 227 |

+

|

| 228 |

+

## 🙋 Developer

|

| 229 |

+

|

| 230 |

+

**SmartVision AI Project**

|

| 231 |

+

Yogesh Kumar V

|

| 232 |

+

M.Sc. Seed Science & Technology (TNAU)

|

| 233 |

+

Passion: AI, Computer Vision, Agribusiness Technology

|

| 234 |

+

|

| 235 |

+

---

|

| 236 |

+

|

| 237 |

+

## 🏁 Conclusion

|

| 238 |

+

|

| 239 |

+

SmartVision AI integrates:

|

| 240 |

+

- Multi‑model classification

|

| 241 |

+

- YOLO detection

|

| 242 |

+

- Streamlit visualization

|

| 243 |

+

- Full evaluation suite

|

| 244 |

+

|

| 245 |

+

Perfect for:

|

| 246 |

+

- Research

|

| 247 |

+

- Demonstrations

|

| 248 |

+

- CV/AI portfolio

|

| 249 |

+

- Real‑world image understanding

|

| 250 |

+

|

| 251 |

+

---

|

| 252 |

+

|

| 253 |

+

Enjoy using SmartVision AI! 🚀🧠

|

app.py

ADDED

|

@@ -0,0 +1,872 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import time

|

| 3 |

+

import json

|

| 4 |

+

from typing import Dict, Any, List

|

| 5 |

+

|

| 6 |

+

import numpy as np

|

| 7 |

+

from PIL import Image, ImageDraw, ImageFont

|

| 8 |

+

|

| 9 |

+

import streamlit as st

|

| 10 |

+

import pandas as pd

|

| 11 |

+

|

| 12 |

+

import tensorflow as tf

|

| 13 |

+

from tensorflow import keras

|

| 14 |

+

from tensorflow.keras import layers, regularizers

|

| 15 |

+

from ultralytics import YOLO

|

| 16 |

+

|

| 17 |

+

# Keras application imports

|

| 18 |

+

from tensorflow.keras.applications.vgg16 import VGG16, preprocess_input as vgg16_preprocess

|

| 19 |

+

from tensorflow.keras.applications.efficientnet import EfficientNetB0, preprocess_input as effnet_preprocess

|

| 20 |

+

|

| 21 |

+

# ------------------------------------------------------------

|

| 22 |

+

# GLOBAL CONFIG

|

| 23 |

+

# ------------------------------------------------------------

|

| 24 |

+

st.set_page_config(

|

| 25 |

+

page_title="SmartVision AI",

|

| 26 |

+

page_icon="🧠",

|

| 27 |

+

layout="wide",

|

| 28 |

+

)

|

| 29 |

+

|

| 30 |

+

st.markdown(

|

| 31 |

+

"""

|

| 32 |

+

<h1 style='text-align:center;'>

|

| 33 |

+

🤖⚡ <b>SmartVision AI</b> ⚡🤖

|

| 34 |

+

</h1>

|

| 35 |

+

<h3 style='text-align:center; margin-top:-10px;'>

|

| 36 |

+

🔎🎯 Intelligent Multi-Class Object Recognition System 🎯🔎

|

| 37 |

+

</h3>

|

| 38 |

+

""",

|

| 39 |

+

unsafe_allow_html=True

|

| 40 |

+

)

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

st.markdown(

|

| 45 |

+

"<p style='text-align:center; color: gray;'>End-to-end computer vision pipeline on a COCO subset of 25 everyday object classes</p>",

|

| 46 |

+

unsafe_allow_html=True

|

| 47 |

+

)

|

| 48 |

+

|

| 49 |

+

st.divider()

|

| 50 |

+

|

| 51 |

+

from pathlib import Path

|

| 52 |

+

|

| 53 |

+

# Resolve repository root relative to this file (streamlit_app/app.py)

|

| 54 |

+

THIS_FILE = Path(__file__).resolve()

|

| 55 |

+

REPO_ROOT = THIS_FILE.parent # repo/

|

| 56 |

+

SAVED_MODELS_DIR = REPO_ROOT / "saved_models"

|

| 57 |

+

YOLO_RUNS_DIR = REPO_ROOT / "yolo_runs"

|

| 58 |

+

SMARTVISION_METRICS_DIR = REPO_ROOT / "smartvision_metrics"

|

| 59 |

+

SMARTVISION_DATASET_DIR = REPO_ROOT / "smartvision_dataset"

|

| 60 |

+

|

| 61 |

+

# Then turn constants into Path objects / strings

|

| 62 |

+

YOLO_WEIGHTS_PATH = str(YOLO_RUNS_DIR / "smartvision_yolov8s6 - Copy" / "weights" / "best.pt")

|

| 63 |

+

|

| 64 |

+

CLASSIFIER_MODEL_CONFIGS = {

|

| 65 |

+

"VGG16": {

|

| 66 |

+

"type": "vgg16",

|

| 67 |

+

"path": str(SAVED_MODELS_DIR / "vgg16_v2_stage2_best.h5"),

|

| 68 |

+

},

|

| 69 |

+

"ResNet50": {

|

| 70 |

+

"type": "resnet50",

|

| 71 |

+

"path": str(SAVED_MODELS_DIR / "resnet50_v2_stage2_best.weights.h5"),

|

| 72 |

+

},

|

| 73 |

+

"MobileNetV2": {

|

| 74 |

+

"type": "mobilenetv2",

|

| 75 |

+

"path": str(SAVED_MODELS_DIR / "mobilenetv2_v2_stage2_best.weights.h5"),

|

| 76 |

+

},

|

| 77 |

+

"EfficientNetB0": {

|

| 78 |

+