text

stringlengths 454

608k

| url

stringlengths 17

896

| dump

stringclasses 91

values | source

stringclasses 1

value | word_count

int64 101

114k

| flesch_reading_ease

float64 50

104

|

|---|---|---|---|---|---|

I’m having trouble doing a few things and would appreciate some help if possible.

- Optionally adding a context menu item if another package is installed

- Figuring out how to add the function call to do this (which file do I create? where does it go? what are required functions to get started?)

- Figuring out how to add a context menu item and getting the textual contents of what was right clicked?

Now I think #2 is adding a ‘main’ entry to the package.json file. I think that I need to call atom.commands.add() in the module.exports.activate function of the file pointed to in main in the package.json file. I don’t know how to remove the item on deactivate and I don’t know how to test for the installation or availability of another package before continuing. A lot of functions have something like namespace:function-name. How do I declare that namespace and function name specifically?

Finally, there seems to be little to no documentation on how to get the data of the clicked item in the context menu hook. How to add the hook also seems a little … unclear.

Gripe #1: All of the documentation seems to assume contextual knowledge of where to put things and what to put in them. Really annoying for someone coming in and learning

Gripe #2: Dear God, why CoffeeScript?! In a day where traceur and other ES6 options exist, why CoffeeScript

If possible please respond using JavaScript code snippets. I can work with CoffeeScript but it does happen to invoke the gag reflex a bit. Thanks.

|

https://discuss.atom.io/t/sigh-docs-are-not-clear-working-with-contextmenus/14906/3

|

CC-MAIN-2018-13

|

refinedweb

| 270

| 65.32

|

Home › Forums › WPF controls › Xceed Toolkit Plus for WPF › WPF Propertygrid expand/collapse Category

I use the WPF property grid from the toolkit plus. Now i want to bind specific Categories to expand or collapse.

How can I implement this in with current version of toolkit plus?

I have tryed to set a style setter on xaml, which bind a type of toogle button (is a part of category), but this do not synchronize IsChecked with IsExpanded.

Does anyone have a advice or tip?

Thank you very much

Hi,

You can load your app with expanded/collpased categories by setting the ExpandedCategory attribute on your class object (no binding):

[CategoryOrder(“Information”, 0)]

[CategoryOrder( “Conections”, 1 )]

[ExpandedCategory( “Conections”, false )]

public class MyClass

{

[Category( “Information” )]

public bool IsMale { get; set; }

[Category( “Conections” )]

public string FirstName { get; set; }

}

But binding on Categories ? We would need more details of what you are trying to do.

You can have a look at our LiveExplorer to see some examples of PropertyGrid uses. It is available here :.

|

https://forums.xceed.com/forums/topic/wpf-propertygrid-expandcollapse-category/

|

CC-MAIN-2021-49

|

refinedweb

| 170

| 52.6

|

Query lock status of instance¶

Currently we only support locking/unlocking an instance but we are not able to query whether the instance is locked or not. This proposal is to add the lock status to the detailed view of an instance.

Problem description¶

We are able to lock/unlock an instance through nova API now. But we don’t return the lock status of the servers.

Proposed change¶

Display the lock status as part of the detailed view of an instance (that is, ‘nova show’)

Alternatives¶

The lock status can be identified by attempting to lock the instance, but if the instance is not already locked this has the side-effect of locking it. If another process simultaneously tries to query the lock status in the same fashion, it may get a false positive. Equally if another process tries to delete the instance while it is locked due to a query, it will fail when it shouldn’t.

REST API impact¶

Add following output to the response body of GET /v2/45210fba73d24dd681dc5c292c6b1e7f/ servers/a9dd1fd6-27fb-4128-92e6-93bcab085a98

Following lock info will be added in addition to existing output info.

If the locked is True, following info will be added into output:

If the locked is false, this will return following info:

Both v2 and v3 API will be affected.

In v2 API, extension os-server-locked-status will be added to advertise the extra information. alias: os-server-locked-status name: ServerLockStatus namespace: When the new extension “os-server-locked-status” is loaded, 2 new fields ‘locked’, ‘locked_by’ will be added to the os-hypervisor API.

In v3 API, locked information will be directly added to extended_status.py since locked_by is already there.

Other end user impact¶

This will allow user to query the lock status of an instance.

python-novaclient will be updated in order to show the lock status in the ‘nova show’ commands.

If there is no lock status info in the output from older v2 API, the new python-novaclient will exclude the lock status, locked_by fields.

Implementation¶

Testing¶

Tempest cases will be added, especially the lock/unlock related cases will check through the APIs to be added, e.g. the new lock status fields will be mandatory required fields.

|

http://specs.openstack.org/openstack/nova-specs/specs/kilo/approved/get-lock-status-of-instance.html

|

CC-MAIN-2019-35

|

refinedweb

| 376

| 60.24

|

The method named in the

$Run$ tag must have at least one parameter, called

options, which provides access to the script options.

... $Run$ mainMethod ...

def mainMethod(options): print "Running the main method"

It is possible to define an entry point to the use case that requires more than

one parameter. The definition of the

$Run$ tag only

defines the function name. The definition of the function within the script defines

how many parameters are needed.

... $Run$ main ...

# main method with positional arguments def main(options, filename, value): print "Running the main method" # Using the positional arguments supplied to the script. print "Using file: " + filename + " and value: " + value

In this example

main requires two parameters,

filename and

value which must be supplied on the command-line when the use case

script is run.

|

http://infocenter.arm.com/help/topic/com.arm.doc.dui0446z/vvi1443623165598.html

|

CC-MAIN-2020-10

|

refinedweb

| 132

| 58.11

|

Observe point changes/movements?

- StephenNixon last edited by gferreira

I'm hoping to record the changes made to points in glyphs. My current plan is to make a dictionary of point locations when a glyph is opened (or possibly just accessing the glif data), then comparing point locations when points are moved, and making a dictionary of point movements.

Is there an observer that can send a notification when points are moved?

I'm hoping for something along the lines of

pointMovedInCurrentGlyph, so I could add something like this to my code...

addObserver(self, 'recordPointMovement', "pointMovedInCurrentGlyph") # fake code

...but I'm not finding anything obvious.

Am I missing something? How might I best approach this?

There is a

Contour.PointsChangednotification for a contour object.

There are no notifications for each point object.

a tiny script that shows all font level notifications:

import vanilla class AllNotifications(object): def __init__(self): self.w = vanilla.Window((400, 400), minSize=(200, 200)) self.w.e = vanilla.TextEditor((0, 0, 0, 0)) # keep a ref otherwise weak ref will not work self.w._ref = self self.w.bind("close", self.windowClose) self.w.open() self.font = CurrentFont().naked() self.font.dispatcher.addObserver(self, "callback", notification=None, observable=None) def windowClose(self, sender): # remove the observer self.font.dispatcher.removeObserver(self, notification=None, observable=None) # remove the references del self.w._ref del self.font def callback(self, notification): txt = "\n%s --> %s" % (notification.name, notification.object) self.w.e.set(self.w.e.get() + txt) AllNotifications()

@frederik said in Observe point changes/movements?:

Contour.PointsChanged

Amazing, thank you! I'll give this a shot tonight and see where it gets me.

- StephenNixon last edited by gferreira

It's working nicely to show that a point moved!

Is there a simple way to know which point moved, once I get the notification? The notification I receive is:

Contour.PointsChanged --> <lib.fontObjects.doodleContour.DoodleContour object at 0x112f24320>

Ideally, I'd want to get to data which was the point index with its parent contour index.

If I print

notification.data, it only gives

None.

Does the

object at 0x112f24320mean anything?

Would I have to do something like looping through all points to compare them to a "before" state (possibly in another layer), then assign changed points a unique identifier with generateIdentifierForPoint(point)?

the

Contour.PointsChangedcalls your callback with a notification object:

notification.object # in this case the contour object notification.data # optional data related to the event, in this case it will be None

this notification is sent on different events: inserting, removing, changing start point...

in most cases the moved points are the selected ones:

glyph.selectedPoints

Ohh of course, that is simpler than I was thinking!

I suppose that the only time points would move without being selected is if they were moved by a script, right?

a script or something else like fe a transormation from the inspector

Makes sense. Thanks so much for your help!

|

https://forum.robofont.com/topic/515/observe-point-changes-movements

|

CC-MAIN-2020-40

|

refinedweb

| 492

| 51.95

|

Board index » scheme

All times are UTC

In any case, the need to exclude one or the other for this particular kind of identifier seems not of a piece with the rest of Scheme.

--d

--

GTE Internetworking, Powered by BBN, Burlington, MA *** DON'T SEND TECHNICAL QUESTIONS DIRECTLY TO ME, post them to newsgroups. Please DON'T copy followups to me -- I'll assume it wasn't posted to the group.

>If it were the latter, how would you ever get unquote-splicing? After all,

>pretty clear that the specification "If a comma appears followed

I guess my question should have been: Why allow legal

of them won't unquote them the way it does for other identifiers? The "Identifiers" section doesn't

to some considerable effort toward making illegal what would have been rather harmless identifiers (e.g., 401k, 9-to-5, -!-, .iota).

-Steve

>>seem to prefer the former.)

>former would be the correct parsing.

It *will*, if only you will put some whitespace in to disambiguate

> (define foo '(bar baz))

(gorp bar baz)

(gorp bletch) >

-Rob

-----

Applied Networking Silicon Graphics, Inc. Phone: 650-933-1673 1600 Amphitheatre Pkwy. FAX: 650-933-0511 Mountain View, CA 94043 PP-ASEL-IA

1. identifier names

2. Identifier name length in g77

3. String representation of an identifier's name

4. identifiers vs. IDENTIFIERS

5. CALL, identifier-2, and address-identifiers

6. Legal proc name and namespace

7. Time for a Fresh Scheme Standard: Say Goodbye to the RnRS Relic

8. Derived Syntax in RnRS and beyond

9. Time for a Fresh Scheme Standard: Say Goodbye to the RnRS Relic

10. Old RnRS available?

11. Time for a Fresh Scheme Standard: Say Goodbye to the RnRS Relic

12. Time for a Fresh Scheme Standard: Say Goodbye to the RnRS Relic

|

http://computer-programming-forum.com/40-scheme/011850e021c92f57.htm

|

CC-MAIN-2019-13

|

refinedweb

| 298

| 75.5

|

Hello and welcome back to the channel. In this video you will learn one of the important React hooks and it's useMemo. will be much easier to understand how useMemo works by real example so let's create a list of users, an input and a search button.

const users = [ { id: "1", name: "Foo" }, { id: "2", name: "Bar" }, ]; function App() { const [text, setText] = React.useState(""); const [search, setSearch] = useState(""); const handleText = (event) => { setText(event.target.value); }; const handleSearch = () => { setSearch(text); }; return ( <div> <h1>React hooks for beginners</h1> <input type="text" value={text} onChange={handleText} /> <button type="button" onClick={handleSearch}> Search </button> </div> ); }

So we created an array of initial users on the top. Also we have 2 states, one for changed text in the input and other we are setting only when we click on the button. This is the crucial difference.

Now we want to search for users so we will render only filtered by search users.

function App() { ... const filteredUsers = users.filter((user) => { console.log("filtering users"); return user.name.toLowerCase().includes(search.toLowerCase()); }), return ( <div> <h1>React hooks for beginners</h1> <input type="text" value={text} onChange={handleText} /> <button type="button" onClick={handleSearch}> Search </button> <ul> {filteredUsers.map((filteredUser) => ( <div key={filteredUser.id}>{filteredUser.name}</div> ))} </ul> </div> ); }

As you can see in browser now our users are rendered and when we click search button they are filtering correctly and rendering on the screen. But what problem do we have here? As you can see each letter that we are typing leads to rerendering of the component. Which is fine. But rerendering means that every line inside component will be called from beginning to the end. And this is exactly what happens. This is why every time when we are typing we see our console log inside filtering of users. Because we are calling this filtering again and again.

The problem here is obvious. If we have lots of data in array (like 1000 or users) then it is not good for performance because we are doing filter with exactly same data because actually our search value didn't change.

This is exactly where useMemo comes into play. It brings the idea of memoization. This means that if value didn't change then we just store the result of some slow computed function in the variable and use it until our variables don't change. As you see our users filter depends only on search.

const filteredUsers = useMemo( () => users.filter((user) => { console.log("filtering users"); return user.name.toLowerCase().includes(search.toLowerCase()); }), [search] );

So we just put our filter as a return of useMemo. Also we set a dependencies array. It works the same way as in useEffect. Our useMemo will call the function only when search changes.

As you can see in browser now our filtering is not triggered when we are typing but only when we click on submit button.

It's also super important to remember that if you forget to write a dependency array or it is empty your useMemo function is doing nothing. So it is crucial to provide a dependency array.

Also you might ask why we don't write the every line and constuction with useMemo if it's so effective. Because every optimisation comes at a cost and calling useMemo and storing value additionally all consumes performance. So it is recommended to use memo only when you have performance problem or you know that exactly here it will be the bottleneck.

So this is how you can use useMemo.

|

https://monsterlessons-academy.com/posts/how-to-use-use-memo-hook-in-react

|

CC-MAIN-2022-40

|

refinedweb

| 592

| 57.77

|

# Indexes in PostgreSQL — 6 (SP-GiST)

We've already discussed PostgreSQL [indexing engine](https://habr.com/ru/company/postgrespro/blog/441962/), [the interface of access methods](https://habr.com/ru/company/postgrespro/blog/442546/), and three methods: [hash index](https://habr.com/post/442776/), [B-tree](https://habr.com/ru/company/postgrespro/blog/443284/), and [GiST](https://habr.com/ru/company/postgrespro/blog/444742/). In this article, we will describe SP-GiST.

SP-GiST

=======

First, a few words about this name. The «GiST» part alludes to some similarity with the same-name access method. The similarity does exist: both are generalized search trees that provide a framework for building various access methods.

«SP» stands for space partitioning. The space here is often just what we are used to call a space, for example, a two-dimensional plane. But we will see that any search space is meant, that is, actually any value domain.

SP-GiST is suitable for structures where the space can be recursively split into *non-intersecting* areas. This class comprises quadtrees, k-dimensional trees (k-D trees), and radix trees.

Structure

---------

So, the idea of SP-GiST access method is to split the value domain into *non-overlapping* subdomains each of which, in turn, can also be split. Partitioning like this induces *non-balanced* trees (unlike B-trees and regular GiST).

The trait of being non-intersecting simplifies decision-making during insertion and search. On the other hand, as a rule, the trees induced are of low branching. For example, a node of a quadtree usually has four child nodes (unlike B-trees, where the nodes amount to hundreds) and larger depth. Trees like these well suit the work in RAM, but the index is stored on a disk and therefore, to reduce the number of I/O operations, nodes have to be packed into pages, and it is not easy to do this efficiently. Besides, the time it takes to find different values in the index, may vary because of differences in branch depths.

This access method, same way as GiST, takes care of low-level tasks (simultaneous access and locks, logging, and a pure search algorithm) and provides a specialized simplified interface to enable adding support for new data types and for new partitioning algorithms.

An internal node of SP-GiST tree stores references to child nodes; a *label* can be defined for each reference. Besides, an internal node can store a value called a *prefix*. Actually this value is not obligatory a prefix; it can be regarded as an arbitrary predicate that is met for all child nodes.

Leaf nodes of SP-GiST contain a value of the indexed type and a reference to a table row (TID). The indexed data itself (search key) can be used as the value, but not obligatory: a shortened value can be stored.

In addition, leaf nodes can be grouped into lists. So, an internal node can reference not only one value, but a whole list.

Note that prefixes, labels, and values in leaf nodes have their own data types, independent of one another.

Same way as in GiST, the main function to define for search is *the consistency function*. This function is called for a tree node and returns a set of child nodes whose values «are consistent» with the search predicate (as usual, in the form "*indexed-field operator expression*"). For a leaf node, the consistency function determines whether the indexed value in this node meets the search predicate.

The search starts with the root node. The consistency function permits to find out which child nodes it makes sense to visit. The algorithm repeats for each of the nodes found. The search is depth-first.

At the physical level, index nodes are packed into pages to make work with the nodes efficient from the point of view of I/O operations. Note that one page can contain either internal or leaf nodes, but not both.

Example: quadtree

-----------------

A quadtree is used to index points in a plane. An idea is to recursively split areas into four parts (quadrants) with respect to *the central point*. The depth of branches in such a tree can vary and depends on the density of points in appropriate quadrants.

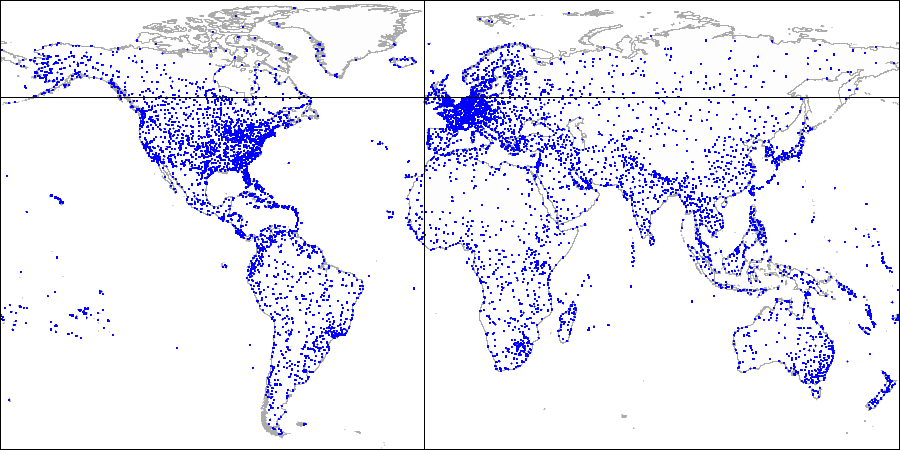

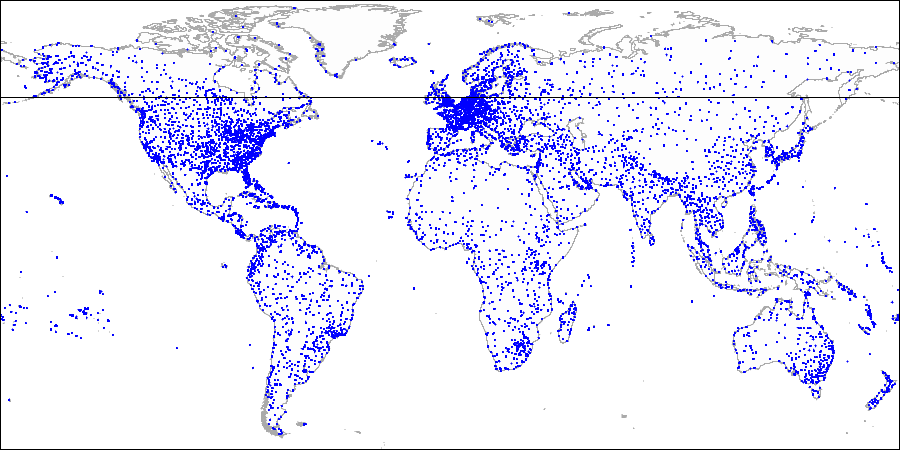

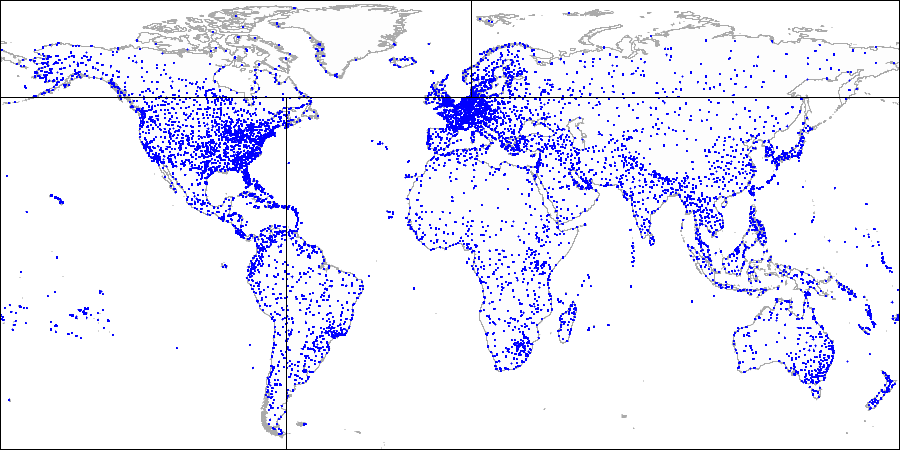

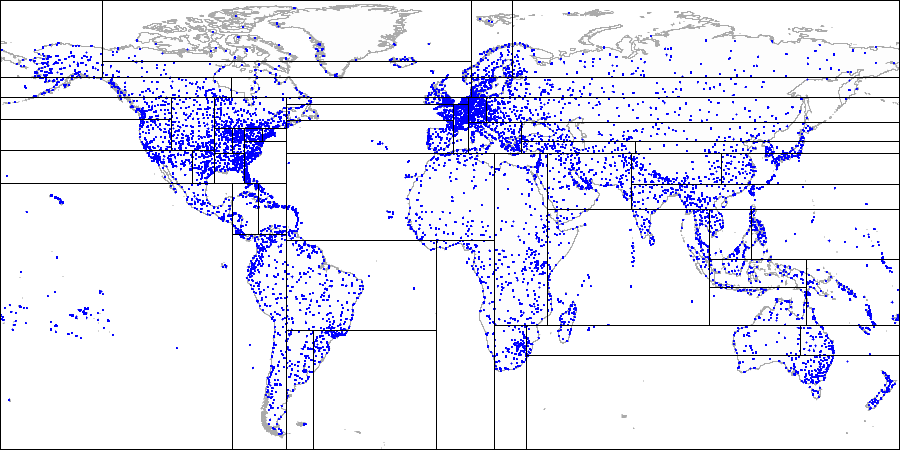

This is what it looks like in figures, by example of the [demo database](https://postgrespro.com/docs/postgrespro/11/demodb-bookings) augmented by airports from the site [openflights.org](https://openflights.org/data.html). By the way, recently we released a new version of the database in which, among the rest, we replaced longitude and latitude with one field of type «point».

*First, we split the plane into four quadrants...*

*Then we split each of the quadrants...*

*And so on until we get the final partitioning.*

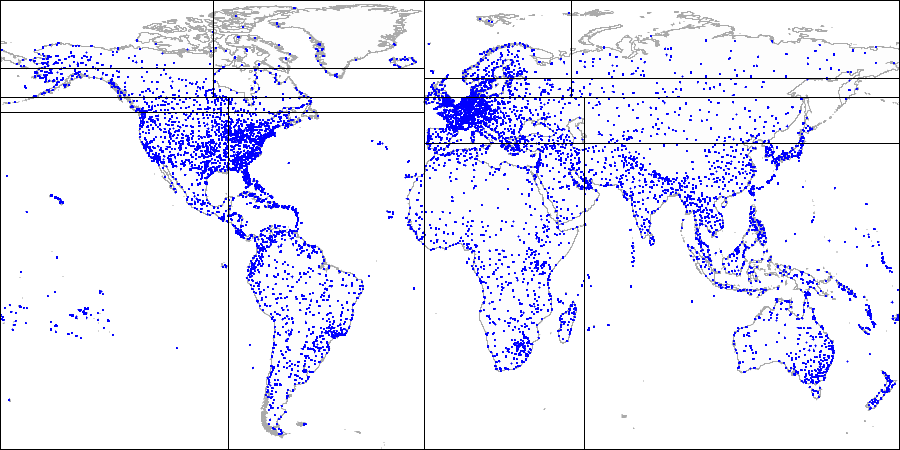

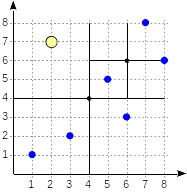

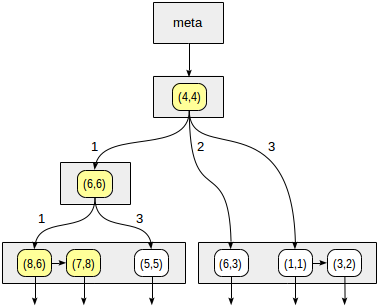

Let's provide more details of a simple example that we already considered in the [GiST-related article](https://habr.com/ru/company/postgrespro/blog/444742/). See what the partitioning may look like in this case:

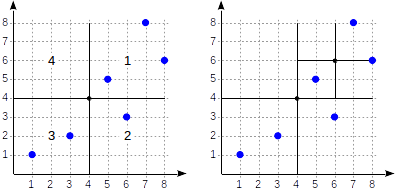

The quadrants are numbered as shown in the first figure. For definiteness sake, let's place child nodes from left to right exactly in the same sequence. A possible index structure in this case is shown in the figure below. Each internal node references a maximum of four child nodes. Each reference can be labeled with the quadrant number, as in the figure. But there is no label in the implementation since it is more convenient to store a fixed array of four references some of which can be empty.

Points that lie on the boundaries relate to the quadrant with the smaller number.

```

postgres=# create table points(p point);

postgres=# insert into points(p) values

(point '(1,1)'), (point '(3,2)'), (point '(6,3)'),

(point '(5,5)'), (point '(7,8)'), (point '(8,6)');

postgres=# create index points_quad_idx on points using spgist(p);

```

In this case, «quad\_point\_ops» operator class is used by default, which contains the following operators:

```

postgres=# select amop.amopopr::regoperator, amop.amopstrategy

from pg_opclass opc, pg_opfamily opf, pg_am am, pg_amop amop

where opc.opcname = 'quad_point_ops'

and opf.oid = opc.opcfamily

and am.oid = opf.opfmethod

and amop.amopfamily = opc.opcfamily

and am.amname = 'spgist'

and amop.amoplefttype = opc.opcintype;

```

```

amopopr | amopstrategy

-----------------+--------------

<<(point,point) | 1 strictly left

>>(point,point) | 5 strictly right

~=(point,point) | 6 coincides

<^(point,point) | 10 strictly below

>^(point,point) | 11 strictly above

<@(point,box) | 8 contained in rectangle

(6 rows)

```

For example, let's look how the query `select * from points where p >^ point '(2,7)'` will be performed (find all points that lie above the given one).

We start with the root node and use the consistency function to select to which child nodes to descend. For the operator `>^`, this function compares the point (2,7) with the central point of the node (4,4) and selects the quadrants that may contain the points sought, in this case, the first and fourth quadrants.

In the node corresponding to the first quadrant, we again determine the child nodes using the consistency function. The central point is (6,6), and we again need to look through the first and fourth quadrants.

The list of leaf nodes (8,6) and (7,8) corresponds to the first quadrant, of which only the point (7,8) meets the query condition. The reference to the fourth quadrant is empty.

In the internal node (4,4), the reference to the fourth quadrant is empty as well, which completes the search.

```

postgres=# set enable_seqscan = off;

postgres=# explain (costs off) select * from points where p >^ point '(2,7)';

```

```

QUERY PLAN

------------------------------------------------

Index Only Scan using points_quad_idx on points

Index Cond: (p >^ '(2,7)'::point)

(2 rows)

```

### Internals

We can explore the internal structure of SP-GiST indexes using "[gevel](http://www.sai.msu.su/~megera/wiki/Gevel)" extension, which was mentioned earlier. Bad news is that due to a bug, this extension works incorrectly with modern versions of PostgreSQL. Good news is that we plan to augment «pageinspect» with the functionality of «gevel» ([discussion](https://www.postgresql.org/message-id/flat/accae316-5e4d-8963-0c3d-277ef13c396c@postgrespro.ru#accae316-5e4d-8963-0c3d-277ef13c396c@postgrespro.ru)). And the bug has already been fixed in «pageinspect».

> Again, bad news is that the patch has stuck with no progress.

>

>

For example, let's take the extended demo database, which was used to draw pictures with the world map.

```

demo=# create index airports_coordinates_quad_idx on airports_ml using spgist(coordinates);

```

First, we can get some statistics for the index:

```

demo=# select * from spgist_stats('airports_coordinates_quad_idx');

```

```

spgist_stats

----------------------------------

totalPages: 33 +

deletedPages: 0 +

innerPages: 3 +

leafPages: 30 +

emptyPages: 2 +

usedSpace: 201.53 kbytes+

usedInnerSpace: 2.17 kbytes +

usedLeafSpace: 199.36 kbytes+

freeSpace: 61.44 kbytes +

fillRatio: 76.64% +

leafTuples: 5993 +

innerTuples: 37 +

innerAllTheSame: 0 +

leafPlaceholders: 725 +

innerPlaceholders: 0 +

leafRedirects: 0 +

innerRedirects: 0

(1 row)

```

And second, we can output the index tree itself:

```

demo=# select tid, n, level, tid_ptr, prefix, leaf_value

from spgist_print('airports_coordinates_quad_idx') as t(

tid tid,

allthesame bool,

n int,

level int,

tid_ptr tid,

prefix point, -- prefix type

node_label int, -- label type (unused here)

leaf_value point -- list value type

)

order by tid, n;

```

```

tid | n | level | tid_ptr | prefix | leaf_value

---------+---+-------+---------+------------------+------------------

(1,1) | 0 | 1 | (5,3) | (-10.220,53.588) |

(1,1) | 1 | 1 | (5,2) | (-10.220,53.588) |

(1,1) | 2 | 1 | (5,1) | (-10.220,53.588) |

(1,1) | 3 | 1 | (5,14) | (-10.220,53.588) |

(3,68) | | 3 | | | (86.107,55.270)

(3,70) | | 3 | | | (129.771,62.093)

(3,85) | | 4 | | | (57.684,-20.430)

(3,122) | | 4 | | | (107.438,51.808)

(3,154) | | 3 | | | (-51.678,64.191)

(5,1) | 0 | 2 | (24,27) | (-88.680,48.638) |

(5,1) | 1 | 2 | (5,7) | (-88.680,48.638) |

...

```

But keep in mind that «spgist\_print» outputs not all leaf values, but only the first one from the list, and therefore shows the structure of the index rather than its full contents.

Example: k-dimensional trees

----------------------------

For the same points in the plane, we can also suggest another way to partition the space.

Let's draw *a horizontal line* through the first point being indexed. It splits the plane into two parts: upper and lower. The second point to be indexed falls into one of these parts. Through this point, let's draw *a vertical line*, which splits this part into two ones: right and left. We again draw a horizontal line through the next point and a vertical line through yet the next point, and so on.

All internal nodes of the tree built this way will have only two child nodes. Each of the two references can lead either to the internal node that is next in the hierarchy or to the list of leaf nodes.

This method can be easily generalized for k-dimensional spaces, and therefore, the trees are also called k-dimensional (k-D trees) in the literature.

Explaining the method by example of airports:

*First we split the plane into upper and lower parts...*

*Then we split each part into left and right parts...*

*And so on until we get the final partitioning.*

To use a partitioning just like this, we need to explicitly specify the operator class **«kd\_point\_ops»** when creating an index.

```

postgres=# create index points_kd_idx on points using spgist(p kd_point_ops);

```

This class includes exactly the same operators as the «default» class «quad\_point\_ops».

### Internals

When looking through the tree structure, we need to take into account that the prefix in this case is only one coordinate rather than a point:

```

demo=# select tid, n, level, tid_ptr, prefix, leaf_value

from spgist_print('airports_coordinates_kd_idx') as t(

tid tid,

allthesame bool,

n int,

level int,

tid_ptr tid,

prefix float, -- prefix type

node_label int, -- label type (unused here)

leaf_value point -- list node type

)

order by tid, n;

```

```

tid | n | level | tid_ptr | prefix | leaf_value

---------+---+-------+---------+------------+------------------

(1,1) | 0 | 1 | (5,1) | 53.740 |

(1,1) | 1 | 1 | (5,4) | 53.740 |

(3,113) | | 6 | | | (-7.277,62.064)

(3,114) | | 6 | | | (-85.033,73.006)

(5,1) | 0 | 2 | (5,12) | -65.449 |

(5,1) | 1 | 2 | (5,2) | -65.449 |

(5,2) | 0 | 3 | (5,6) | 35.624 |

(5,2) | 1 | 3 | (5,3) | 35.624 |

...

```

Example: radix tree

-------------------

We can also use SP-GiST to implement a radix tree for strings. The idea of a radix tree is that a string to be indexed is not fully stored in a leaf node, but is obtained by concatenating the values stored in the nodes above this one up to the root.

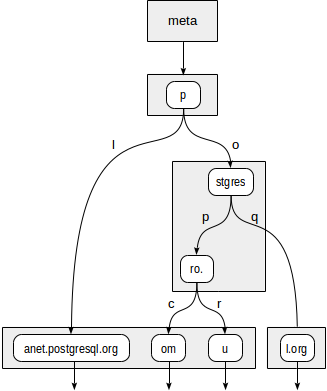

Assume, we need to index site URLs: «postgrespro.ru», «postgrespro.com», «postgresql.org», and «planet.postgresql.org».

```

postgres=# create table sites(url text);

postgres=# insert into sites values ('postgrespro.ru'),('postgrespro.com'),('postgresql.org'),('planet.postgresql.org');

postgres=# create index on sites using spgist(url);

```

The tree will look as follows:

The internal nodes of the tree store prefixes common to all child nodes. For example, in child nodes of «stgres», the values start with «p» + «o» + «stgres».

Unlike in quadtrees, each pointer to a child node is additionally labeled with one character (more exactly, with two bytes, but this is not so important).

«text\_ops» operator class supports B-tree-like operators: «equal», «greater», and «less»:

```

postgres=# select amop.amopopr::regoperator, amop.amopstrategy

from pg_opclass opc, pg_opfamily opf, pg_am am, pg_amop amop

where opc.opcname = 'text_ops'

and opf.oid = opc.opcfamily

and am.oid = opf.opfmethod

and amop.amopfamily = opc.opcfamily

and am.amname = 'spgist'

and amop.amoplefttype = opc.opcintype;

```

```

amopopr | amopstrategy

-----------------+--------------

~<~(text,text) | 1

~<=~(text,text) | 2

=(text,text) | 3

~>=~(text,text) | 4

~>~(text,text) | 5

<(text,text) | 11

<=(text,text) | 12

>=(text,text) | 14

>(text,text) | 15

(9 rows)

```

The distinction of operators with tildes is that they manipulate *bytes* rather than *characters*.

Sometimes, a representation in the form of a radix tree may turn out to be much more compact than B-tree since the values are not fully stored, but reconstructed as the need arises while descending through the tree.

Consider a query: `select * from sites where url like 'postgresp%ru'`. It can be performed using the index:

```

postgres=# explain (costs off) select * from sites where url like 'postgresp%ru';

```

```

QUERY PLAN

------------------------------------------------------------------------------

Index Only Scan using sites_url_idx on sites

Index Cond: ((url ~>=~ 'postgresp'::text) AND (url ~<~ 'postgresq'::text))

Filter: (url ~~ 'postgresp%ru'::text)

(3 rows)

```

Actually, the index is used to find values that are greater or equal to «postgresp», but less than «postgresq» (Index Cond), and then matching values are chosen from the result (Filter).

First, the consistency function must decide to which child nodes of «p» root we need to descend. Two options are available: «p» + «l» (no need to descend, which is clear even without diving deeper) and «p» + «o» + «stgres» (continue the descent).

For «stgres» node, a call to the consistency function is needed again to check «postgres» + «p» + «ro.» (continue the descent) and «postgres» + «q» (no need to descend).

For «ro.» node and all its child leaf nodes, the consistency function will respond «yes», so the index method will return two values: «postgrespro.com» and «postgrespro.ru». One matching value will be selected of them at the filtering stage.

### Internals

When looking through the tree structure, we need to take data types into account:

```

postgres=# select * from spgist_print('sites_url_idx') as t(

tid tid,

allthesame bool,

n int,

level int,

tid_ptr tid,

prefix text, -- prefix type

node_label smallint, -- label type

leaf_value text -- leaf node type

)

order by tid, n;

```

Properties

----------

Let's look at the properties of SP-GiST access method (queries [were provided earlier](https://habr.com/ru/company/postgrespro/blog/442546/)):

```

amname | name | pg_indexam_has_property

--------+---------------+-------------------------

spgist | can_order | f

spgist | can_unique | f

spgist | can_multi_col | f

spgist | can_exclude | t

```

SP-GiST indexes cannot be used for sorting and for support of the unique constraint. Additionally, indexes like this cannot be created on several columns (unlike GiST). But it is permitted to use such indexes to support exclusion constraints.

The following index-layer properties are available:

```

name | pg_index_has_property

---------------+-----------------------

clusterable | f

index_scan | t

bitmap_scan | t

backward_scan | f

```

The difference from GiST here is that clustering is impossible.

And eventually the following are column-layer properties:

```

name | pg_index_column_has_property

--------------------+------------------------------

asc | f

desc | f

nulls_first | f

nulls_last | f

orderable | f

distance_orderable | f

returnable | t

search_array | f

search_nulls | t

```

Sorting is not supported, which is predictable. Distance operators for search of nearest neighbors are not available in SP-GiST so far. Most likely, this feature will be supported in future.

> It is supported in upcoming PostgreSQL 12, the patch by Nikita Glukhov.

>

>

SP-GiST can be used for index-only scan, at least for the discussed operator classes. As we have seen, in some instances, indexed values are explicitly stored in leaf nodes, while in the other ones, the values are reconstructed part by part during the tree descent.

### NULLs

Not to complicate the picture, we haven't mentioned NULLs so far. It is clear from the index properties that NULLs are supported. Really:

```

postgres=# explain (costs off)

select * from sites where url is null;

```

```

QUERY PLAN

----------------------------------------------

Index Only Scan using sites_url_idx on sites

Index Cond: (url IS NULL)

(2 rows)

```

However, NULL is something foreign for SP-GiST. All operators from «spgist» operator class must be strict: an operator must return NULL whenever any of its parameters is NULL. The method itself ensures this: NULLs are just not passed to operators.

But to use the access method for index-only scan, NULLs must be stored in the index anyway. And they are stored, but in a separate tree with its own root.

Other data types

----------------

In addition to points and radix trees for strings, other methods based on SP-GiST are also implemented PostgreSQL:

* «box\_ops» operator class provides a quadtree for rectangles.

Each *rectangle* is represented by *a point in a four-dimensional space*, so the number of quadrants equals 16. An index like this can beat GiST in performance when there are a lot of intersections of the rectangles: in GiST it is impossible to draw boundaries so as to separate intersecting objects from one another, while there are no such issues with points (even four-dimensional).

* «range\_ops» operator class provides a quadtree for intervals.

*An interval* is represented by *a two-dimensional point*: the lower boundary becomes the abscissa, and the upper boundary becomes the ordinate.

[Read on](https://habr.com/ru/company/postgrespro/blog/448746/).

|

https://habr.com/ru/post/446624/

| null | null | 3,189

| 55.03

|

Answered by:

XML Schema Editor (Visual Studio 2008 RTM)?

How do I open / find the XML Schema editor in VS2008? I have an XSD file but it only lets me edit the raw XML in the text editor mode. In VS2003, we had the ability to switch the editor between visual / text modes. Having trouble finding the corresponding functionality in VS2008. Note, this is not a schema for a dataset.

thanks

scottm

Question

Answers

According to the XML team's blog the schema designer will be released later:

Hi Ian,

As I've mentioned in another thread (), that schema contains a few errors (empty choice and referencing schematron namespace which wasn't imported). When you fix them, the Editor will not complain about included files..

All replies

According to the XML team's blog the schema designer will be released later:

Future version = VS2020?

Please get a working version (CTP or whatever) out as soon as possible. I relied a lot on this tool in VS2005 (crash-buggy as it was) for contract-first web service development. Now, I am forced to the ludicrous alternative of opening VS2005 just for the xsd design view...

Oh and while you are at it, please forward a complain-o-gram to whichever program team owns the web form designer and lets get get multiple-selection of web controls back too. Us enterprise forms guys are just cavemen developers, your modern ways frighten and confuse us.

While the XML Schema Editor may not be ready for use, I'm more concerned that the XML Editor reports errors that are easily resolved in code with the .NET 2.0 Framework (at least in the VS2008 Beta2 release).

With a schemaset that is file-based, linked in to a project, one XSD file of an ISO standard (ISO 19139 - 60+ files, 7 folders) has includes that have no relative path - because the included files are in the same folder as that file (coverage.xsd). All other imports / includes in the complex schemaset are resolved correctly.Code Snippet

<include schemaLocation="feature.xsd"/>

<include schemaLocation="valueObjects.xsd"/>

<include schemaLocation="grids.xsd"/>

<include schemaLocation="geometryAggregates.xsd"/>

The wavy line under the word include cannot be shown here, in the snippet that I have pasted. My project's code to load all schemas from a top or starting file is unexceptional - just schemaSet.Add - and it's automatically resolved.

Having said all that, I'm not sure if VS2008 RTM's XML Editor is misbehaved.

And I certainly would love to see a new release of the XML Schema Editor.

Hi Ian,

As I've mentioned in another thread (), that schema contains a few errors (empty choice and referencing schematron namespace which wasn't imported). When you fix them, the Editor will not complain about included files.

Hello Stan

You're right - I have been changing this troublesome schema file, by either deleting line 423 or using Jeni's suggestion/simplification, but the 'wavy line' problem was indeed when the file coverage.xsd was as-is.

That's nice to know - I wondered how on earth the IDE and the .NET assemblies could be out of synch.

Thanks for pointing that out!

But it's never been a problem with any Schematron crud - I am using the original, untainted release from ISO (URL for download from EDEN was given in another post of mine, on the other thread). That doesn't have assertions stuffed into it.

Interestingly, 3 posts (possibly 2 of mine) have disappeared from that thread. Has someone reported me for "abuse", I wonder! Or, once the question has been answered (marked as green), are further posts disallowed / deleted?.

My code (validation handler) belatedly recognized that Warnings are serious when coming out of the System.XML(etc) assemblies - and I had to "dig deeper" into the exception-tracking classes - but eventually agreed with the IDE.

As a side note, it would be helpful if the warnings included some easily-identifiable text. The filenotfoundexception is one that i could pick up, but it's hard to error-trap and code sensibly for some of the warnings, like the coverage.xsd "line 423" problem with the <choice/>.

My posts miraculously re-appeared today.

My I remind you that this thread is about the MISSING XML schema editor in VS2008?

I cannot understand that MS promotes VS2008 with a lot of bells and whistles, while stuff you have learned to appreciate simply disappears without notice.

Can anyone from MS give some insight in the time schedule for this vital module?

Sorry, but I'm more interested in some of my minor problems.

You have a couple of options -

- Use VS2005 (does the Express version even have the XML Schema Editor - or not?). It works side-by-side with VS2008

- Install VS2008 Beta 2, and download the editor/designer (XML Schema Designer) August 07 CTP. I haven't used it much, but I haven't found anything amiss, yet.

I know nothing, but it's a possibility that the XML Schema Designer that was released in 08/2007 might be enhanced some time before VS vNext to work with VS2008.

Martin, another option (not integrated into Visual Studio, so it is a fall-back only):

There is a free XSD Editor from Liquid Technologies, called Liquid XML Studio. It looks very capable.

Actually, I haven't used it. I have Stylus Studio 2008 XML Enterprise Suite, which is nice (just a pity it is java-based).

But I am trialling their commercial product, called Liquid XML Data Binding 2008, and it is the ONLY tool that I have discovered that can generate code classes from the 54 schema files in the ISO 19139 standard, without a hiccup. It has a $1000+ pricetag, though.

Or you have to rely on some commerical (most if them are not free) XML designer products available on the web.

Example. XMLSpy () or graphical XML Schema editor () from Liquid Technologies.

Thanks

- No there is still no news from MS, here you can find a similar bug report which was simply ignored.

Currently I'm using Liquid Studio designer as 30 day trail which is not free btw.

MS has also stopped the project Linq to XSD where I was also looking forward too, has MS abandoned XSD schemes? ... complete silence :(

Hopefully waiting will pay off, a bit disappointed in MS.

- Edited by Freek Van Mensel Tuesday, May 26, 2009 7:32 AM

|

https://social.msdn.microsoft.com/Forums/en-US/84212ff1-ca41-4a86-bc0a-fe9d59b902f9/xml-schema-editor-visual-studio-2008-rtm?forum=xmlandnetfx

|

CC-MAIN-2016-44

|

refinedweb

| 1,074

| 64.3

|

You are not logged in.

Pages: 1

hi all

whats the problem:

a test application written in c segfaults on printf() call's

the application is used to test a library i've written for distributed computing purpose

in current state, not that special, just sending a few udp broadcasts and doing tcp connects

more details on the segfault behaviour:

the prog is compiled like this

gcc -g -ggdb -g3 -ggdb3 -Wall -Wextra -Wpadded -O0 -I../../external -I../../src -o main ./main.c

running with gdb shows the following backtrace after the segfault:

Program terminated with signal 11, Segmentation fault. #0 _IO_vfprintf_internal (s=0xbf9cff4c, format=0x2 <Address 0x2 out of bounds>, ap=0xbf9cff7a "A\037w") at vfprintf.c:1288 1288 vfprintf.c: No such file or directory. in vfprintf.c (gdb) bt #0 _IO_vfprintf_internal (s=0xbf9cff4c, format=0x2 <Address 0x2 out of bounds>, ap=0xbf9cff7a "A\037w") at vfprintf.c:1288 #1 0x006818a0 in __printf (format=0x8058d4d "\ncommand: ") at printf.c:35 #2 0x08058009 in main (argc=5, argv=0xbf9d04f4) at ./main.c:197

let's have a look at main.c:196 - main.c:198

while(buffer[0]!='q' && run){ printf("\ncommand: "); if(scanf("%s", buffer)<=0) continue;

what else can be said:

if i remove every printf in the whole app AND library it doesnt segfault but the while loop above ends after a few loops cause run is switched to 0

the weird thing - i removed the SIGTERM handler which set's run to 0, so it shouldnt break until i enter q, which in fact i didnt

that's why i assume some application space corruption im not expirienced with

after adding some printf in the library it segfaults again after a few loops on printf, nowhere else

the printf can also be whatever you want

i also tried valgrind and it doesnt detect any memory leaks or wrong assignments or such

it really doesnt print anything remarkable until the segfault

what i think:

my opinion is that i maybe do something wrong with a few pointers and cause something to break

im using a few function pointers for library callbacks and a double linked list which does a few complicated pointer arithmetic operations

it seems that the problem is also tightly coupled to the test application and library im working on

i tried to break it down to a test case but i dont the the error to occur on smaller pieces

questions:

since i don't think this is a problem where someone can answer "its caused by ...."

my question is

"given a correctly working libc, ubuntu installation (natty) and no hardware failure, which i just assume, what needs to be done to cause printf to segfault like this altough it is called with a static string like printf("test");"?

i think this goes into something like corrupting heap or stack which im not that much familiar with - maybe there are some resources on how stack/heap corruption is done?

thanks for any help on this

[EDIT]

from … -the-heap/

i got a simple example for a stack overflow

int main() { char nStack[100000000]; printf("test"); return 0; }

which causes printf to segfault

on my ubuntu installation i found that a size of 8384000

results in a segfault pretty much the same way as i described above

so it seems that i corrupt my function stack and after reading a few articles on this topic i think i was using too much network buffer variables in combination

with a bunch of function calls using local struct's

altough corrupting nearly 8MB of stack seems hard to believe, anyway ill try to reduce the memory footprint as a first step

[/EDIT]

Last edited by thinking (2011-08-31 03:28 PM)

I doubt it's a pure stack overflow of that sort (ie: just using too much stack space)... Much more likely is dynamic heap corruption... Though, I'm not sure why valgrind wouldn't spot it for you... It's always been great at catching such things for me... Perhaps it is stack corruption of some sort, such as overflowing a stack buffer and trashing other important stuff also on the stack (like function return addresses)... It's hard to say without seeing more code...

However, just from the 3 lines you posted, I don't like the look of the third one... A scanf("%s") is unbounded, so could easily overflow your buffer there... You'd be far better off using something like fgets() or getline() for input...

From the lines posted, I would guess that 'buffer' isn't always NUL terminated.

(or line terminated, for that matter.) Make sure the receiver always terminates

the buffer with a NUL before processing the data.

Stack size you can see in /proc/$PID/smaps, for instance. So you could check

that when it's hanging in gdb after a crash.

Took me forever to find out that X crashed with no error messages because

it used more stack than I allowed with ulimit -s.

I got confused by that scanf, thougth 'buffer' was the input instead of stdin.

That's indeed a strange way of using scanf(), I'd do what Rob suggests and

use something better fitting that's also not unbound.

What I meant is the buffer where the UDP packet is received into. But if the

UDP data aren't strings then never mind about NUL termination.

it seems i have solved the problem, although i have no idea what exactly went wrong

in general i did two things:

1) adding code to monitor the stack size during runtime

2) rearranging my source

1)

as it seemd that stack corruption plays a role on this i added a local variable in main like this

char *stackstart = NULL; int main(){ int someotherlocalvar; char bottom_of_stack; stackstart = (char*)&bottom_of_stack; ...

in whatever function call i do i get the size of the current stack using this

void somefunction(int someparam){ char current_stack_position; printf("the current stack size is: %d bytes\n", (int)(stackstart-(¤t_stack_position)) ); ...

there are a bunch of things to note on this code:

o) its not very portable, for example the stack size on my linux box grows from top to bottom

thats why i do stackstart-current_stack_position

o) this method is not very accurate

o) this is just a rough example of what i did and it actually worked for me - i would not recommend using this in production code

have a look at … -size-in-c for another example

using this method i noticed that my global variables are garbaged after a few function calls after the first loop

since i couldn't find any further reason for this i got to step 2 which solved the problem

2)

on project start for fast development i made the decision that i dont split the code in .c and .h files but only use .h files

with functions directly in them

i also had #ifndef/#define/#endif constructs on top/end of every file

in case of a small lib this method is nice to see code changes pretty fast, so not having to do make clean; make and such

as the code grows it gets clumsy

to be honest i made some bad expirience with g++ compiliation with templates and mid sized projects, but this also just reflects my lack of knowledge on compilation units

i dont know what was the problem but i think gcc caused it during linking in combination with some global debugging variables i was using, could also be a gcc bug - hard to track at this point

so i rearranged everything to autotools and a minimal makefile for my own testing purpose

that's it - the code is working now without any change of function internals

just cleanly separating in .c,.h files did it

@robseace and i3839

the reason i used scanf, was that its 'just' for testing and i'm the only user currently

but you're right, the scanf isnt very nice for obvious reason and also shouldn't be used for testing - there are better functions for this

thx for your thoughts on this

Last edited by thinking (2011-08-31 03:26 PM)

Plus, there's stack used in libc's pre-main() code, and main()'s argc and argv (and envp) will be on the stack... Like i3839 said, "/proc/self/*maps" is a much more accurate method of figuring out stack usage on Linux...

Pages: 1

|

http://developerweb.net/viewtopic.php?id=7262

|

CC-MAIN-2019-13

|

refinedweb

| 1,416

| 54.8

|

Something like fifty years ago, an argument raged among computer engineers over the order in which numbers should be stored in computer memory.

During the arguments, some (mostly among the least-significant-first camp, IIRC) pointed out the Lilliputian argument between the "Little-Endians" and "Big-Endians" in Gulliver's Travels. The least-significant-first camp claimed the position of Little-Endian-ness, which left the most-significant-first camp associated with High Church once the allegory was commonly accepted.

In Gulliver's Travels, the arguments between Lilliput and Blefuscu, including the endianness argument, are depicted by Swift as mere matters of fashion.

Most of the least-significant-first camp took the same approach: In memory, endianness doesn't matter.

This was a bit of duplicitous implicit poisoning of the well, similar to the habit Intel salescrew had a decade or two later of claiming that Turing complete CPUs were all equivalent, therefore we should all buy their self-proclaimed most popular CPU -- false analogy and a lot of logical steps being skipped among other things.

To summarize the question of byte order, we need to take a general look at data in computer memory. Computer memory is organized as an array of sequentially addressable elements, which implies an ordering to the contents of memory:

Let's put the number 123456 (one hundred twenty three thousand four hundred fifty-six) encoded as text after the description:

Note that text is naturally recorded most significant digit first in English.

(Thousands group separators just get in the way in computers, so I just left the comma out.)

If we wrote 123456 textually least significant digit first, it would look like this:

You may be wondering why someone would write numbers backwards, but there are actually language/numeric contexts in which least significant digit first is the common order. (They may be useful to refer to later.) Even in English, we have contexts like dates where the order is not most significant first:

- September 17, 1787 (mixed order) and

- 17 September 1787 (least significant first)

So we know that it is not completely unthinkable to do such a thing.

Now, text is actually encoded in computer memory as strings of numeric codes. Let's look at the data in the second example, reading it as hexadecimal numbers that represent the characters of the text instead of interpreting it as text:

That's interesting, isn't it?

No?

Okay, let's leave everything but the number interpreted as text:

Now, we haven't actually been changing what is in memory in Example 2. We're just changing how we look at it. We are trying to get a sense of what is actually stored in memory. (If you have a decent OS, you have command line tools like hexdump that allow you to look at files this way. You should try it some time.)

So, now let's try changing the form of the number. Instead of putting it in as text, let's put it in as a number -- an integer. (It's convenient that the address where it will go is 16, for something we call alignment, but we won't really talk about that just yet.)

First, we need to rewrite 123456 (one hundred twenty-three thousand four hundred fifty-six) as a hexadecimal number:

123456 ÷ 164 = 1 rem 57920So,

57920 ÷ 163 = 14 (E16) rem 576

576 ÷ 162 = 2 rem 64

64 ÷ 161 = 4 rem 0

123456 == 1E24016Two hexadecimal digits take one byte in memory on a computer with an 8 bit byte.

(Numbers up to 4294967295 (FFFFFFFF16) can be stored in four bytes on computers with an 8 bit byte.)

Let's look at 123456 (1E24016) stored at location 16, most significant byte first:

Now let's look at the same number, stored least significant byte first:

For a CPU that is MSB first, it will always store and read MSB first (as in example 3), so there's no problem.

And an LSB first CPU will always store and read LSB first, so, again, no problem.

The CPU is built to do it one way or the other, and it will always do it the way it's built, so there's no problem here.

That's the essence of the argument.

It's no longer true, and it really was never true. All bus masters have to agree on how they store numbers in a particular chunk of data or the numbers get turned upside down. (Or in the case of mixed mode integers, inside out and upside down, etc.)

Back then, however, CPUs did not usually have the ability to switch byte order without a bit of work. And alternate bus masters were not as common as now, and usually tended to be built specifically for the CPU.

These days, with intelligent I/O devices, alternate bus masters are rather common. (Graphics co-processors, network interfaces, disk drive interfaces, etc.) If one is going to be a bad boy and do byte order backwards from the rest, unless you isolate the bad boy somehow, things tend to fall apart.

But even the ability to switch byte order does not materially change the arguments.

On a CPU that can switch byte order natively, byte order becomes just another property of the integer stored in memory, which the programmer must keep track of, along with the address, size, presence of sign, etc. As long as the software and hardware respect the properties of the integer in memory, there is no problem.

Well, no problem in isolation.

But there is one virtual bus master that tends, in most of the world, to be most significant first when looking at numbers -- the human who might debug the program by looking at the raw contents of memory without access to the detail of the compiled program.

No number exists in isolation.

There it is, the fatal assumption of the argument:

... in isolation ...Nothing in this world exists in isolation.

Why am I going around in circles on this subject?

In modern hardware, we have multiple CPUs and other devices on the computer bus.

Even in the past, the programmer often had to look at what was in memory in order to tell what the program was doing. He was, in effect, another CPU on the bus, as I said above.

Before we take a look at the reasons not to use least significant first byte order, let's look at the primary argument in favor: It theoretically speeds up some hardware processes and made the 8080 and 6502 (among other CPUs) cheaper to produce.

To figure out why, when you perform math on numbers, you start at the least significant end. Let's do a subtraction of two moderately large numbers:

123456You started with the column on the right,

- 98765

-------

24691

6 - 5 = 1right?

CPUs have to point at what they work on, and the idea is that, if they are pointing at the number already, it's handy to be pointing at the first byte to do the math on.

It sounds reasonable, now that you think of it, right?

There are some other issues, like aligning the number before you start, which also appear to have some advantage when the small end is what you point at.

Sounds like maybe the Little-Endian engineers know what they are onto?.

Oh, dear. Maybe the Big-Endians should just shut up.

Well, let's put those arguments aside for a moment and talk about what the human who is trying to debug a program is going to see when he or she looks at a number stored least significant byte first. I'm pretty sure I can show you some problems with the Little-Endian attitude here.

Simple tools are the ones that are usually available. We'll make use of hexdump. If you are working on a Microsoft Windows workstation, you can install Cygwin to get Unix tools, and Cygwin can give you access to hexdump and the gnu C compiler, gcc, and gforth (and lots of other good stuff like bc).

We'll also make use of a little programming in C:

/* Program to demonstrate the effect of LSB1st vs. MSB1st integers

// by Joel Matthew Rees, Amagasaki, Japan

// Permission granted to use for personal purposes.

// See

// Can be downloaded here:

//

*/

#include <stdio.h>

#include <stdlib.h>

/* #define NO__DEPENDENCY_ON_LIMITS_H */

#if !defined NO__DEPENDENCY_ON_LIMITS_H

#include <limits.h>

# define byteWidth ( (size_t) CHAR_BIT )

# define byteMask ( (unsigned long) (unsigned char) ( (unsigned long) -1 ) )

# define ulBytes ( sizeof (unsigned long) ) /* a run-time constant */

#else

unsigned byteWidth = 8; /* Not depending on limits.h . */

unsigned long byteMask = 0xFF;

unsigned ulBytes = 4; /* Sane throw-away initial values. */

void setULbytes( void )

{ int i = 0;

unsigned char chroller = 1;

unsigned char chMask = 1;

unsigned long ulroller = 1;

while ( chroller != 0 )

{ chroller <<= 1;

chMask = ( chMask << 1 ) | 1;

++i;

}

byteMask = chMask;

byteWidth = i;

i = 0;

while ( ulroller != 0 )

{ ulroller <<= 1;

++i;

}

ulBytes = i / byteWidth;

}

#endif

int putLSB( unsigned long ivalue, int early )

{ int i = 0;

do

{ putchar( ivalue & byteMask );

++i;

ivalue >>= 8;

} while ( ( i < ulBytes ) && !( early && ( ivalue == 0 ) ) );

return i;

}

int putMSB( unsigned long ivalue, int early )

{ int i = 0;

do

{ putchar( ( ivalue >> ( ( ulBytes - 1 ) * byteWidth ) ) & byteMask );

++i;

ivalue <<= byteWidth;

} while ( ( i < ulBytes ) && !( early && ( ivalue == 0 ) ) );

return i;

}

void fillch( int count, char ch )

{ while ( count-- > 0 )

{ putchar( ch );

}

}

int printInteger( unsigned long ivalue, unsigned base )

{ char buffer[ 65 ];

char * cpt = buffer + 65;

* --cpt = '\0';

if ( base > 36 )

{ base = 10;

}

do

{ int ch = ivalue % base;

ivalue /= base;

ch += '0';

if ( ch > '9' )

{ ch += 'A' - '9' - 1;

}

* --cpt = ch;

} while ( ivalue > 0 );

fputs( cpt, stdout );

return 64 - ( cpt - buffer );

}

int main( int argc, char *argv[] )

{

unsigned long my_integer = 123456;

int index = 1;

int length;

#if defined NO__DEPENDENCY_ON_LIMITS_H

setULbytes();

#endif

if ( argc > 1 )

{ char * endpt = argv[ 1 ];

my_integer = strtoul( argv[ 1 ], &endpt, 0 );

if ( endpt > argv[ 1 ] )

{ ++index;

}

else

{ my_integer = 123456;

}

}

printf( "Data in memory: " );

length = printInteger( my_integer, 10 );

fillch( 32 - length, '\0' );

length = printInteger( my_integer, 16 );

fillch( 32 - length, '\0' );

printf( "LSB1st early: " );

length = putLSB( my_integer, 1 );

fillch( 16 - length, '-' );

printf( "LSB1st full: " );

length = putLSB( my_integer, 0 );

fillch( 16 - length, '-' );

printf( "MSB1st early: " );

length = putMSB( my_integer, 1 );

fillch( 16 - length, '-' );

printf( "MSB1st full: " );

length = putMSB( my_integer, 0 );

fillch( 16 - length, '-' );

putchar( '\n' );

return EXIT_SUCCESS;

}

[JMR201704281355:

This can be downloaded at previous version at

will eventually be taken off line.will eventually be taken off line.

]

Compile it with the usual

cc -Wall -o lsbmsb lsbmsb.cand run it with something like

- ./lsbmsb | hexdump -C

- ./lsbmsb 1234567890 | hexdump -C

- ./lsbmsb 0x12345 | hexdump -C

- ./lsbmsb 0x12345 | hexdump # look at it two-byte.

- ./lsbmsb $(( 123456 * 256 )) | hexdump -C

- # etc.

Hmm.

me@mycomputer:~/work/mathgames/eco101$ ./lsbmsb | hexdump -C

00000000 44 61 74 61 20 69 6e 20 6d 65 6d 6f 72 79 3a 20 |Data in memory: |

00000010 31 32 33 34 35 36 00 00 00 00 00 00 00 00 00 00 |123456..........|

00000020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000030 31 45 32 34 30 00 00 00 00 00 00 00 00 00 00 00 |1E240...........|

00000040 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000050 4c 53 42 31 73 74 20 65 61 72 6c 79 3a 20 20 20 |LSB1st early: |

00000060 40 e2 01 2d 40 e2 01 00 00 01 e2 40 00 01 e2 40 2d 2d 2d 2d 2d 2d 2d 2d |.......@--------|

000000d0 0a |.|

000000d1

me@mycomputer:~/work/mathgames/eco101$ ./lsbmsb | hexdump

0000000 6144 6174 6920 206e 656d 6f6d 7972 203a

0000010 3231 3433 3635 0000 0000 0000 0000 0000

0000020 0000 0000 0000 0000 0000 0000 0000 0000

0000030 4531 3432 0030 0000 0000 0000 0000 0000

0000040 0000 0000 0000 0000 0000 0000 0000 0000

0000050 534c 3142 7473 6520 7261 796c 203a 2020

0000060 e240 2d01 2d2d 2d2d 2d2d 2d2d 2d2d 2d2d

0000070 534c 3142 7473 6620 6c75 3a6c 2020 2020

0000080 e240 0001 0000 0000 2d2d 2d2d 2d2d 2d2d

0000090 534d 3142 7473 6520 7261 796c 203a 2020

00000a0 0000 0000 0100 40e2 2d2d 2d2d 2d2d 2d2d

00000b0 534d 3142 7473 6620 6c75 3a6c 2020 2020

00000c0 0000 0000 0100 40e2 2d2d 2d2d 2d2d 2d2d

00000d0 000a

00000d1

me@mycomputer:~/work/mathgames/eco101$ ./lsbmsb 0x1E24000 | hexdump -C

00000000 44 61 74 61 20 69 6e 20 6d 65 6d 6f 72 79 3a 20 |Data in memory: |

00000010 33 31 36 30 34 37 33 36 00 00 00 00 00 00 00 00 |31604736........|

00000020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000030 31 45 32 34 30 30 30 00 00 00 00 00 00 00 00 00 |1E24000.........|

00000040 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000050 4c 53 42 31 73 74 20 65 61 72 6c 79 3a 20 20 20 |LSB1st early: |

00000060 00 40 e2 01 00 40 e2 01 01 e2 40 2d 01 e2 40 00 2d 2d 2d 2d 2d 2d 2d 2d |......@.--------|

000000d0 0a |.|

000000d1

me@mycomputer:~/work/mathgames/eco101$ ./lsbmsb 0x1E24000 | hexdump

0000000 6144 6174 6920 206e 656d 6f6d 7972 203a

0000010 3133 3036 3734 3633 0000 0000 0000 0000

0000020 0000 0000 0000 0000 0000 0000 0000 0000

0000030 4531 3432 3030 0030 0000 0000 0000 0000

0000040 0000 0000 0000 0000 0000 0000 0000 0000

0000050 534c 3142 7473 6520 7261 796c 203a 2020

0000060 4000 01e2 2d2d 2d2d 2d2d 2d2d 2d2d 2d2d

0000070 534c 3142 7473 6620 6c75 3a6c 2020 2020

0000080 4000 01e2 0000 0000 2d2d 2d2d 2d2d 2d2d

0000090 534d 3142 7473 6520 7261 796c 203a 2020

00000a0 0000 0000 e201 2d40 2d2d 2d2d 2d2d 2d2d

00000b0 534d 3142 7473 6620 6c75 3a6c 2020 2020

00000c0 0000 0000 e201 0040 2d2d 2d2d 2d2d 2d2d

00000d0 000a

00000d1

Now you may be saying you'd rather not be looking at any of that, but if you really had to, if you had no choice but to look at one or the other, which would you rather look at? LSB1st or MSB1st? Remember, the numbers you are looking for will usually be mixed with text, and the text will likely help you find what you are looking for. If the text gets byte-swapped on you, it's going to be just that much harder.

The salesman says he has tools to let you look at the data, so you don't have to worry. That's all well and good, but it makes you dependent on the vendor, even when the vendor has time and budget to help you.

When the vendor doesn't have time or budget, wouldn't rather be able to use simple tools, at any rate? -- as a start before you set to making your own tools?

Somebody usually pipes up with, "Well, if you guys would all join us Little-Endians, if everybody did it all the same, there'd be no problems!"

So. From now on, everyone does Little-Endian. Blogs? News aggregators? Textbooks? Novels? Are we going to go back and reprint all the classics with Little-Endian numbers?

- 71 September 7871?

Somebody pipes up about now saying everything I'm talking about is human stuff, not technical at all.

The Unicode Consortium determined that they did not want to be caught up in the argument. So they decided that Unicode characters could be encoded either direction. They even figured out how to put a flag called the Byte Order Mark at the beginning of a stream of Unicode text, to warn the consumer of the stream what order to expect the characters in.

Characters, you see, are not integers after all, contrary to the opinions of many a respected computer scientist. Little-Endian byte order enforces this factoid.

Well, the folks who defined the Internet decided they did not want to be reading data streams and crossing their eyes to read the IP addresses and other numeric data buried in the stream. So network byte order is the one that is easy to read, most significant first. If one hundred twenty-three thousand four hundred fifty-six is in the data stream, it shows up as 123456, not 654321.

In setting up the demonstrations of byte order differences, I went to some pain to demonstrate one big difference between the byte orders. If you are looking carefully at the dashes, you may see how least significant first allows you to optimize math. If you can track the presence of carries, you can stop adding small numbers to big ones as soon as the carries disappear.

Looks interesting, right?

Tracking the carries takes more processor time and resources than just simply finish the addition out. This is one of those false early optimizations that has historically killed a lot of software projects.

Worse, the programmer can look at one of these and think a particular case will never generate carries. This is almost always self-deception. The assumptions required to keep the carries from happening are almost always not valid in the end-user's context just often enough to cause hidden bugs of the integer overflow variety.

Isn't that strongly enough stated?

When we humans look at numbers, we perceive them as text. That allows us to do many things without consciously thinking of them, like move to the end or align them. CPUs have to do the same things with numberical text, as we can intuit by looking back at example 2.

When CPUs work with numbers, they have to figure out all sorts of things about the number which we subconsciously read from the text --

Is there a sign?If there is no text, they have no clue ... unless the programmer has already told them.

Is there a decimal point?

How big is the number?

Here is perhaps the strongest argument against least significant first: It induces bugs into software.

Some people think it's a good thing to induce bugs into software. They think it guarantees their after-market revenue stream.

I think there will always be enough bugs without practicing dodgey false optimizations, but what do I know? I've wasted two days I didn't have tilting at this, erm, rainbow. (Or chasing this windmill, maybe?)

One of these days I'll get someone to pay me to design a language that combines the best of Forth and C. Then I'll be able to leap wide instruction sets with a single #ifdef, run faster than a speeding infinite loop with a #define, and stop all integer size bugs with a bare cast. And the first processor it will target will be a 32/64 bit version of the 6809 which will not be least significant bit first.

|

http://defining-computers.blogspot.com/2017/04/

|

CC-MAIN-2018-26

|

refinedweb

| 3,142

| 66.47

|

TypeError: "x" is not a function

The JavaScript exception "is not a function" occurs when there was an attempt to call a value from a function, but the value is not actually a function.

TypeError: Object doesn't support property or method {x} (Edge) TypeError: "x" is not a function

Error type

What went wrong?

It attempted to call a value from a function, but the value is not actually a function. Some code expects you to provide a function, but that didn't happen.

Maybe there is a typo in the function name? Maybe the object you are calling the method

on does not have this function? For example, JavaScript

Objects have no

map function, but the JavaScript

Array object does.

There are many built-in functions in need of a (callback) function. You will have to provide a function in order to have these methods working properly:

- When working with

Arrayor

TypedArrayobjects:

- When working with

Mapand

Setobjects:

Examples

A typo in the function name

In this case, which happens way too often, there is a typo in the method name:

let x = document.getElementByID('foo'); // TypeError: document.getElementByID is not a function

The correct function name is

getElementById:

let x = document.getElementById('foo');

Function called on the wrong object

For certain methods, you have to provide a (callback) function and it will work on

specific objects only. In this example,

Array.prototype.map() is used,

which will work with

Array objects only.

let obj = {a: 13, b: 37, c: 42}; obj.map(function(num) { return num * 2; }); // TypeError: obj.map is not a function

Use an array instead:

let numbers = [1, 4, 9]; numbers.map(function(num) { return num * 2; }); // Array [2, 8, 18]

Function shares a name with a pre-existing property

Sometimes when making a class, you may have a property and a function with the same name. Upon calling the function, the compiler thinks that the function ceases to exist.

var Dog = function () { this.age = 11; this.color = "black"; this.name = "Ralph"; return this; } Dog.prototype.name = function(name) { this.name = name; return this; } var myNewDog = new Dog(); myNewDog.name("Cassidy"); //Uncaught TypeError: myNewDog.name is not a function

Use a different property name instead:

var Dog = function () { this.age = 11; this.color = "black"; this.dogName = "Ralph"; //Using this.dogName instead of .name return this; } Dog.prototype.name = function(name) { this.dogName = name; return this; } var myNewDog = new Dog(); myNewDog.name("Cassidy"); //Dog { age: 11, color: 'black', dogName: 'Cassidy' }

Using brackets for multiplication

In math, you can write 2 × (3 + 5) as 2*(3 + 5) or just 2(3 + 5).

Using the latter will throw an error:

const sixteen = 2(3 + 5); alert('2 x (3 + 5) is ' + String(sixteen)); //Uncaught TypeError: 2 is not a function

You can correct the code by adding a

* operator:

const sixteen = 2 * (3 + 5); alert('2 x (3 + 5) is ' + String(sixteen)); //2 x (3 + 5) is 16

Import the exported module correctly

Ensure you are importing the module correctly.

An example helpers library (

helpers.js)

let helpers = function () { }; helpers.groupBy = function (objectArray, property) { return objectArray.reduce(function (acc, obj) { var key = obj[property]; if (!acc[key]) { acc[key] = []; } acc[key].push(obj); return acc; }, {}); } export default helpers;

The correct import usage (

App.js):

import helpers from './helpers'

|

https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Errors/Not_a_function

|

CC-MAIN-2021-17

|

refinedweb

| 550

| 59.8

|

otherstuf 0.902 other'

Given recent versions (e.g. 0.9.10) of stuf, one could simply use from stuf import chainstuf. This portion of the otherstuf sidecar is now superflous.

For counterstuf:

from otherstuf import counterstuf c = counterstuf() c.update("this and this is this but that isn't this".split()) c.total = sum(c.values()) print "everything:", c.total print "'this' mentioned", c.this, "times" print "'bozo' mentioned", c.bozo, "times" print c

Notes

- Commenced automated multi-version testing with pytest and tox.

- Now successfully packaged and tested for Python 2.6, 2.7, 3.2, 3.3 and PyPy 1.9 (aka Python 2.7.2). Previous exceptions for Python 2.6 have been eliminated.

- Now packaged as a package rather than modules. This changes the import from from chainstuf import chainstuf and from counterstuf import counterstuf to from otherstuf import chainstuf and from otherstuf import counterstuf, respectively.

-.)

- Downloads (All Versions):

- 42 downloads in the last day

- 445 downloads in the last week

- 1423 downloads in the last month

- Author: Jonathan Eunice

- Keywords: Counter ChainMap stuf attribute mapping nested

- Categories

- Development Status :: 4 - Beta

- Intended Audience :: Developers

- License :: OSI Approved :: BSD License

- Operating System :: OS Independent

- Programming Language :: Python

-: otherstuf-0.902.xml

|

https://pypi.python.org/pypi/otherstuf/0.902

|

CC-MAIN-2014-15

|

refinedweb

| 206

| 52.26

|

Example code of jQuery animation

Example code of jQuery animation

Example code of jQuery animation

The jQuery is very powerful framework. It provides a many animation

effects that can

Animating Images in Java Application

Animating Images in Java Application

This section shows you how to create an animation with

multiple images. You can see how animation has been implemented in the following

J

Java Sleep Thread

Java Thread sleep() is a static method.

It sleeps the thread for the given time in milliseconds.

It is used to delay the thread.

It is used in Applet or GUI programming for animation

Java Sleep Thread Example

public class

Animate Div in Jquery

within one animation.

$( "#btn" ).click(function() {

$( "

Java Shapes Bouncing App

Java Shapes Bouncing App hi guys,

im trying to implement the following into my java app code:

Here's my code: first ill post the main class, then the animation panel class, then moving shape and moving rectangle class

Sliding window protocol

Sliding window protocol Hello Everyone.

Will anybody send me the code to make sliding window protocol animation using java swings.

I have to do... only animation to show how packets ar moving from one end to other...

Thank u

Free Java Applets

Free Java Applets

There are many free java applets available on the web that you can use

in your website. These applets includes menu, clock, slide show, image animation