url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

2.22B

| node_id

stringlengths 18

32

| number

int64 1

6.77k

| title

stringlengths 1

290

| user

dict | labels

listlengths 0

4

| state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

listlengths 0

4

| milestone

dict | comments

int64 0

70

| created_at

timestamp[ns, tz=UTC] | updated_at

timestamp[ns, tz=UTC] | closed_at

timestamp[ns, tz=UTC] | author_association

stringclasses 3

values | active_lock_reason

float64 | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

float64 | state_reason

stringclasses 3

values | draft

float64 0

1

⌀ | pull_request

dict | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/238

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/238/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/238/comments

|

https://api.github.com/repos/huggingface/datasets/issues/238/events

|

https://github.com/huggingface/datasets/issues/238

| 631,260,143

|

MDU6SXNzdWU2MzEyNjAxNDM=

| 238

|

[Metric] Bertscore : Warning : Empty candidate sentence; Setting recall to be 0.

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/43774355?v=4",

"events_url": "https://api.github.com/users/astariul/events{/privacy}",

"followers_url": "https://api.github.com/users/astariul/followers",

"following_url": "https://api.github.com/users/astariul/following{/other_user}",

"gists_url": "https://api.github.com/users/astariul/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/astariul",

"id": 43774355,

"login": "astariul",

"node_id": "MDQ6VXNlcjQzNzc0MzU1",

"organizations_url": "https://api.github.com/users/astariul/orgs",

"received_events_url": "https://api.github.com/users/astariul/received_events",

"repos_url": "https://api.github.com/users/astariul/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/astariul/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/astariul/subscriptions",

"type": "User",

"url": "https://api.github.com/users/astariul"

}

|

[

{

"color": "25b21e",

"default": false,

"description": "A bug in a metric script",

"id": 2067393914,

"name": "metric bug",

"node_id": "MDU6TGFiZWwyMDY3MzkzOTE0",

"url": "https://api.github.com/repos/huggingface/datasets/labels/metric%20bug"

}

] |

closed

| false

| null |

[] | null | 1

| 2020-06-05T02:14:47Z

| 2020-06-29T17:10:19Z

| 2020-06-29T17:10:19Z

|

NONE

| null |

When running BERT-Score, I'm meeting this warning :

> Warning: Empty candidate sentence; Setting recall to be 0.

Code :

```

import nlp

metric = nlp.load_metric("bertscore")

scores = metric.compute(["swag", "swags"], ["swags", "totally something different"], lang="en", device=0)

```

---

**What am I doing wrong / How can I hide this warning ?**

|

{

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/238/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/238/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/237

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/237/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/237/comments

|

https://api.github.com/repos/huggingface/datasets/issues/237/events

|

https://github.com/huggingface/datasets/issues/237

| 631,199,940

|

MDU6SXNzdWU2MzExOTk5NDA=

| 237

|

Can't download MultiNLI

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/15801338?v=4",

"events_url": "https://api.github.com/users/patpizio/events{/privacy}",

"followers_url": "https://api.github.com/users/patpizio/followers",

"following_url": "https://api.github.com/users/patpizio/following{/other_user}",

"gists_url": "https://api.github.com/users/patpizio/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/patpizio",

"id": 15801338,

"login": "patpizio",

"node_id": "MDQ6VXNlcjE1ODAxMzM4",

"organizations_url": "https://api.github.com/users/patpizio/orgs",

"received_events_url": "https://api.github.com/users/patpizio/received_events",

"repos_url": "https://api.github.com/users/patpizio/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/patpizio/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patpizio/subscriptions",

"type": "User",

"url": "https://api.github.com/users/patpizio"

}

|

[] |

closed

| false

| null |

[] | null | 3

| 2020-06-04T23:05:21Z

| 2020-06-06T10:51:34Z

| 2020-06-06T10:51:34Z

|

CONTRIBUTOR

| null |

When I try to download MultiNLI with

```python

dataset = load_dataset('multi_nli')

```

I get this long error:

```python

---------------------------------------------------------------------------

OSError Traceback (most recent call last)

<ipython-input-13-3b11f6be4cb9> in <module>

1 # Load a dataset and print the first examples in the training set

2 # nli_dataset = nlp.load_dataset('multi_nli')

----> 3 dataset = load_dataset('multi_nli')

4 # nli_dataset = nlp.load_dataset('multi_nli', split='validation_matched[:10%]')

5 # print(nli_dataset['train'][0])

~\Miniconda3\envs\nlp\lib\site-packages\nlp\load.py in load_dataset(path, name, version, data_dir, data_files, split, cache_dir, download_config, download_mode, ignore_verifications, save_infos, **config_kwargs)

514

515 # Download and prepare data

--> 516 builder_instance.download_and_prepare(

517 download_config=download_config,

518 download_mode=download_mode,

~\Miniconda3\envs\nlp\lib\site-packages\nlp\builder.py in download_and_prepare(self, download_config, download_mode, ignore_verifications, save_infos, try_from_hf_gcs, dl_manager, **download_and_prepare_kwargs)

417 with utils.temporary_assignment(self, "_cache_dir", tmp_data_dir):

418 verify_infos = not save_infos and not ignore_verifications

--> 419 self._download_and_prepare(

420 dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

421 )

~\Miniconda3\envs\nlp\lib\site-packages\nlp\builder.py in _download_and_prepare(self, dl_manager, verify_infos, **prepare_split_kwargs)

455 split_dict = SplitDict(dataset_name=self.name)

456 split_generators_kwargs = self._make_split_generators_kwargs(prepare_split_kwargs)

--> 457 split_generators = self._split_generators(dl_manager, **split_generators_kwargs)

458 # Checksums verification

459 if verify_infos:

~\Miniconda3\envs\nlp\lib\site-packages\nlp\datasets\multi_nli\60774175381b9f3f1e6ae1028229e3cdb270d50379f45b9f2c01008f50f09e6b\multi_nli.py in _split_generators(self, dl_manager)

99 def _split_generators(self, dl_manager):

100

--> 101 downloaded_dir = dl_manager.download_and_extract(

102 "http://storage.googleapis.com/tfds-data/downloads/multi_nli/multinli_1.0.zip"

103 )

~\Miniconda3\envs\nlp\lib\site-packages\nlp\utils\download_manager.py in download_and_extract(self, url_or_urls)

214 extracted_path(s): `str`, extracted paths of given URL(s).

215 """

--> 216 return self.extract(self.download(url_or_urls))

217

218 def get_recorded_sizes_checksums(self):

~\Miniconda3\envs\nlp\lib\site-packages\nlp\utils\download_manager.py in extract(self, path_or_paths)

194 path_or_paths.

195 """

--> 196 return map_nested(

197 lambda path: cached_path(path, extract_compressed_file=True, force_extract=False), path_or_paths,

198 )

~\Miniconda3\envs\nlp\lib\site-packages\nlp\utils\py_utils.py in map_nested(function, data_struct, dict_only, map_tuple)

168 return tuple(mapped)

169 # Singleton

--> 170 return function(data_struct)

171

172

~\Miniconda3\envs\nlp\lib\site-packages\nlp\utils\download_manager.py in <lambda>(path)

195 """

196 return map_nested(

--> 197 lambda path: cached_path(path, extract_compressed_file=True, force_extract=False), path_or_paths,

198 )

199

~\Miniconda3\envs\nlp\lib\site-packages\nlp\utils\file_utils.py in cached_path(url_or_filename, download_config, **download_kwargs)

231 if is_zipfile(output_path):

232 with ZipFile(output_path, "r") as zip_file:

--> 233 zip_file.extractall(output_path_extracted)

234 zip_file.close()

235 elif tarfile.is_tarfile(output_path):

~\Miniconda3\envs\nlp\lib\zipfile.py in extractall(self, path, members, pwd)

1644

1645 for zipinfo in members:

-> 1646 self._extract_member(zipinfo, path, pwd)

1647

1648 @classmethod

~\Miniconda3\envs\nlp\lib\zipfile.py in _extract_member(self, member, targetpath, pwd)

1698

1699 with self.open(member, pwd=pwd) as source, \

-> 1700 open(targetpath, "wb") as target:

1701 shutil.copyfileobj(source, target)

1702

OSError: [Errno 22] Invalid argument: 'C:\\Users\\Python\\.cache\\huggingface\\datasets\\3e12413b8ec69f22dfcfd54a79d1ba9e7aac2e18e334bbb6b81cca64fd16bffc\\multinli_1.0\\Icon\r'

```

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/237/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/237/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/236

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/236/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/236/comments

|

https://api.github.com/repos/huggingface/datasets/issues/236/events

|

https://github.com/huggingface/datasets/pull/236

| 631,099,875

|

MDExOlB1bGxSZXF1ZXN0NDI4MDUwNzI4

| 236

|

CompGuessWhat?! dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/1479733?v=4",

"events_url": "https://api.github.com/users/aleSuglia/events{/privacy}",

"followers_url": "https://api.github.com/users/aleSuglia/followers",

"following_url": "https://api.github.com/users/aleSuglia/following{/other_user}",

"gists_url": "https://api.github.com/users/aleSuglia/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/aleSuglia",

"id": 1479733,

"login": "aleSuglia",

"node_id": "MDQ6VXNlcjE0Nzk3MzM=",

"organizations_url": "https://api.github.com/users/aleSuglia/orgs",

"received_events_url": "https://api.github.com/users/aleSuglia/received_events",

"repos_url": "https://api.github.com/users/aleSuglia/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/aleSuglia/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/aleSuglia/subscriptions",

"type": "User",

"url": "https://api.github.com/users/aleSuglia"

}

|

[] |

closed

| false

| null |

[] | null | 9

| 2020-06-04T19:45:50Z

| 2020-06-11T09:43:42Z

| 2020-06-11T07:45:21Z

|

CONTRIBUTOR

| null |

Hello,

Thanks for the amazing library that you put together. I'm Alessandro Suglia, the first author of CompGuessWhat?!, a recently released dataset for grounded language learning accepted to ACL 2020 ([https://compguesswhat.github.io](https://compguesswhat.github.io)).

This pull-request adds the CompGuessWhat?! splits that have been extracted from the original dataset. This is only part of our evaluation framework because there is also an additional split of the dataset that has a completely different set of games. I didn't integrate it yet because I didn't know what would be the best practice in this case. Let me clarify the scenario.

In our paper, we have a main dataset (let's call it `compguesswhat-gameplay`) and a zero-shot dataset (let's call it `compguesswhat-zs-gameplay`). In the current code of the pull-request, I have only integrated `compguesswhat-gameplay`. I was thinking that it would be nice to have the `compguesswhat-zs-gameplay` in the same dataset class by simply specifying some particular option to the `nlp.load_dataset()` factory. For instance:

```python

cgw = nlp.load_dataset("compguesswhat")

cgw_zs = nlp.load_dataset("compguesswhat", zero_shot=True)

```

The other option would be to have a separate dataset class. Any preferences?

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/236/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/236/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/236.diff",

"html_url": "https://github.com/huggingface/datasets/pull/236",

"merged_at": "2020-06-11T07:45:21Z",

"patch_url": "https://github.com/huggingface/datasets/pull/236.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/236"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/235

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/235/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/235/comments

|

https://api.github.com/repos/huggingface/datasets/issues/235/events

|

https://github.com/huggingface/datasets/pull/235

| 630,952,297

|

MDExOlB1bGxSZXF1ZXN0NDI3OTM1MjQ0

| 235

|

Add experimental datasets

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/10469459?v=4",

"events_url": "https://api.github.com/users/yjernite/events{/privacy}",

"followers_url": "https://api.github.com/users/yjernite/followers",

"following_url": "https://api.github.com/users/yjernite/following{/other_user}",

"gists_url": "https://api.github.com/users/yjernite/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/yjernite",

"id": 10469459,

"login": "yjernite",

"node_id": "MDQ6VXNlcjEwNDY5NDU5",

"organizations_url": "https://api.github.com/users/yjernite/orgs",

"received_events_url": "https://api.github.com/users/yjernite/received_events",

"repos_url": "https://api.github.com/users/yjernite/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/yjernite/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yjernite/subscriptions",

"type": "User",

"url": "https://api.github.com/users/yjernite"

}

|

[] |

closed

| false

| null |

[] | null | 6

| 2020-06-04T15:54:56Z

| 2020-06-12T15:38:55Z

| 2020-06-12T15:38:55Z

|

MEMBER

| null |

## Adding an *experimental datasets* folder

After using the 🤗nlp library for some time, I find that while it makes it super easy to create new memory-mapped datasets with lots of cool utilities, a lot of what I want to do doesn't work well with the current `MockDownloader` based testing paradigm, making it hard to share my work with the community.

My suggestion would be to add a **datasets\_experimental** folder so we can start making these new datasets public without having to completely re-think testing for every single one. We would allow contributors to submit dataset PRs in this folder, but require an explanation for why the current testing suite doesn't work for them. We can then aggregate the feedback and periodically see what's missing from the current tests.

I have added a **datasets\_experimental** folder to the repository and S3 bucket with two initial datasets: ELI5 (explainlikeimfive) and a Wikipedia Snippets dataset to support indexing (wiki\_snippets)

### ELI5

#### Dataset description

This allows people to download the [ELI5: Long Form Question Answering](https://arxiv.org/abs/1907.09190) dataset, along with two variants based on the r/askscience and r/AskHistorians. Full Reddit dumps for each month are downloaded from [pushshift](https://files.pushshift.io/reddit/), filtered for submissions and comments from the desired subreddits, then deleted one at a time to save space. The resulting dataset is split into a training, validation, and test dataset for r/explainlikeimfive, r/askscience, and r/AskHistorians respectively, where each item is a question along with all of its high scoring answers.

#### Issues with the current testing

1. the list of files to be downloaded is not pre-defined, but rather determined by parsing an index web page at run time. This is necessary as the name and compression type of the dump files changes from month to month as the pushshift website is maintained. Currently, the dummy folder requires the user to know which files will be downloaded.

2. to save time, the script works on the compressed files using the corresponding python packages rather than first running `download\_and\_extract` then filtering the extracted files.

### Wikipedia Snippets

#### Dataset description

This script creates a *snippets* version of a source Wikipedia dataset: each article is split into passages of fixed length which can then be indexed using ElasticSearch or a dense indexer. The script currently handles all **wikipedia** and **wiki40b** source datasets, and allows the user to choose the passage length and how much overlap they want across passages. In addition to the passage text, each snippet also has the article title, list of titles of sections covered by the text, and information to map the passage back to the initial dataset at the paragraph and character level.

#### Issues with the current testing

1. The DatasetBuilder needs to call `nlp.load_dataset()`. Currently, testing is not recursive (the test doesn't know where to find the dummy data for the source dataset)

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/235/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/235/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/235.diff",

"html_url": "https://github.com/huggingface/datasets/pull/235",

"merged_at": "2020-06-12T15:38:55Z",

"patch_url": "https://github.com/huggingface/datasets/pull/235.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/235"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/234

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/234/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/234/comments

|

https://api.github.com/repos/huggingface/datasets/issues/234/events

|

https://github.com/huggingface/datasets/issues/234

| 630,534,427

|

MDU6SXNzdWU2MzA1MzQ0Mjc=

| 234

|

Huggingface NLP, Uploading custom dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42269506?v=4",

"events_url": "https://api.github.com/users/Nouman97/events{/privacy}",

"followers_url": "https://api.github.com/users/Nouman97/followers",

"following_url": "https://api.github.com/users/Nouman97/following{/other_user}",

"gists_url": "https://api.github.com/users/Nouman97/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/Nouman97",

"id": 42269506,

"login": "Nouman97",

"node_id": "MDQ6VXNlcjQyMjY5NTA2",

"organizations_url": "https://api.github.com/users/Nouman97/orgs",

"received_events_url": "https://api.github.com/users/Nouman97/received_events",

"repos_url": "https://api.github.com/users/Nouman97/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/Nouman97/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Nouman97/subscriptions",

"type": "User",

"url": "https://api.github.com/users/Nouman97"

}

|

[] |

closed

| false

| null |

[] | null | 4

| 2020-06-04T05:59:06Z

| 2020-07-06T09:33:26Z

| 2020-07-06T09:33:26Z

|

NONE

| null |

Hello,

Does anyone know how we can call our custom dataset using the nlp.load command? Let's say that I have a dataset based on the same format as that of squad-v1.1, how am I supposed to load it using huggingface nlp.

Thank you!

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/234/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/234/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/233

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/233/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/233/comments

|

https://api.github.com/repos/huggingface/datasets/issues/233/events

|

https://github.com/huggingface/datasets/issues/233

| 630,432,132

|

MDU6SXNzdWU2MzA0MzIxMzI=

| 233

|

Fail to download c4 english corpus

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/16605764?v=4",

"events_url": "https://api.github.com/users/donggyukimc/events{/privacy}",

"followers_url": "https://api.github.com/users/donggyukimc/followers",

"following_url": "https://api.github.com/users/donggyukimc/following{/other_user}",

"gists_url": "https://api.github.com/users/donggyukimc/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/donggyukimc",

"id": 16605764,

"login": "donggyukimc",

"node_id": "MDQ6VXNlcjE2NjA1NzY0",

"organizations_url": "https://api.github.com/users/donggyukimc/orgs",

"received_events_url": "https://api.github.com/users/donggyukimc/received_events",

"repos_url": "https://api.github.com/users/donggyukimc/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/donggyukimc/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/donggyukimc/subscriptions",

"type": "User",

"url": "https://api.github.com/users/donggyukimc"

}

|

[] |

closed

| false

| null |

[] | null | 5

| 2020-06-04T01:06:38Z

| 2021-01-08T07:17:32Z

| 2020-06-08T09:16:59Z

|

NONE

| null |

i run following code to download c4 English corpus.

```

dataset = nlp.load_dataset('c4', 'en', beam_runner='DirectRunner'

, data_dir='/mypath')

```

and i met failure as follows

```

Downloading and preparing dataset c4/en (download: Unknown size, generated: Unknown size, total: Unknown size) to /home/adam/.cache/huggingface/datasets/c4/en/2.3.0...

Traceback (most recent call last):

File "download_corpus.py", line 38, in <module>

, data_dir='/home/adam/data/corpus/en/c4')

File "/home/adam/anaconda3/envs/adam/lib/python3.7/site-packages/nlp/load.py", line 520, in load_dataset

save_infos=save_infos,

File "/home/adam/anaconda3/envs/adam/lib/python3.7/site-packages/nlp/builder.py", line 420, in download_and_prepare

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/home/adam/anaconda3/envs/adam/lib/python3.7/site-packages/nlp/builder.py", line 816, in _download_and_prepare

dl_manager, verify_infos=False, pipeline=pipeline,

File "/home/adam/anaconda3/envs/adam/lib/python3.7/site-packages/nlp/builder.py", line 457, in _download_and_prepare

split_generators = self._split_generators(dl_manager, **split_generators_kwargs)

File "/home/adam/anaconda3/envs/adam/lib/python3.7/site-packages/nlp/datasets/c4/f545de9f63300d8d02a6795e2eb34e140c47e62a803f572ac5599e170ee66ecc/c4.py", line 175, in _split_generators

dl_manager.download_checksums(_CHECKSUMS_URL)

AttributeError: 'DownloadManager' object has no attribute 'download_checksums

```

can i get any advice?

|

{

"+1": 3,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 3,

"url": "https://api.github.com/repos/huggingface/datasets/issues/233/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/233/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/232

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/232/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/232/comments

|

https://api.github.com/repos/huggingface/datasets/issues/232/events

|

https://github.com/huggingface/datasets/pull/232

| 630,029,568

|

MDExOlB1bGxSZXF1ZXN0NDI3MjI5NDcy

| 232

|

Nlp cli fix endpoints

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[] |

closed

| false

| null |

[] | null | 1

| 2020-06-03T14:10:39Z

| 2020-06-08T09:02:58Z

| 2020-06-08T09:02:57Z

|

MEMBER

| null |

With this PR users will be able to upload their own datasets and metrics.

As mentioned in #181, I had to use the new endpoints and revert the use of dataclasses (just in case we have changes in the API in the future).

We now distinguish commands for datasets and commands for metrics:

```bash

nlp-cli upload_dataset <path/to/dataset>

nlp-cli upload_metric <path/to/metric>

nlp-cli s3_datasets {rm, ls}

nlp-cli s3_metrics {rm, ls}

```

Does it sound good to you @julien-c @thomwolf ?

|

{

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/232/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/232/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/232.diff",

"html_url": "https://github.com/huggingface/datasets/pull/232",

"merged_at": "2020-06-08T09:02:57Z",

"patch_url": "https://github.com/huggingface/datasets/pull/232.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/232"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/231

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/231/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/231/comments

|

https://api.github.com/repos/huggingface/datasets/issues/231/events

|

https://github.com/huggingface/datasets/pull/231

| 629,988,694

|

MDExOlB1bGxSZXF1ZXN0NDI3MTk3MTcz

| 231

|

Add .download to MockDownloadManager

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[] |

closed

| false

| null |

[] | null | 0

| 2020-06-03T13:20:00Z

| 2020-06-03T14:25:56Z

| 2020-06-03T14:25:55Z

|

MEMBER

| null |

One method from the DownloadManager was missing and some users couldn't run the tests because of that.

@yjernite

|

{

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/231/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/231/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/231.diff",

"html_url": "https://github.com/huggingface/datasets/pull/231",

"merged_at": "2020-06-03T14:25:54Z",

"patch_url": "https://github.com/huggingface/datasets/pull/231.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/231"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/230

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/230/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/230/comments

|

https://api.github.com/repos/huggingface/datasets/issues/230/events

|

https://github.com/huggingface/datasets/pull/230

| 629,983,684

|

MDExOlB1bGxSZXF1ZXN0NDI3MTkzMTQ0

| 230

|

Don't force to install apache beam for wikipedia dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[] |

closed

| false

| null |

[] | null | 0

| 2020-06-03T13:13:07Z

| 2020-06-03T14:34:09Z

| 2020-06-03T14:34:07Z

|

MEMBER

| null |

As pointed out in #227, we shouldn't force users to install apache beam if the processed dataset can be downloaded. I moved the imports of some datasets to avoid this problem

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/230/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/230/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/230.diff",

"html_url": "https://github.com/huggingface/datasets/pull/230",

"merged_at": "2020-06-03T14:34:07Z",

"patch_url": "https://github.com/huggingface/datasets/pull/230.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/230"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/229

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/229/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/229/comments

|

https://api.github.com/repos/huggingface/datasets/issues/229/events

|

https://github.com/huggingface/datasets/pull/229

| 629,956,490

|

MDExOlB1bGxSZXF1ZXN0NDI3MTcxMzc5

| 229

|

Rename dataset_infos.json to dataset_info.json

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/11817160?v=4",

"events_url": "https://api.github.com/users/aswin-giridhar/events{/privacy}",

"followers_url": "https://api.github.com/users/aswin-giridhar/followers",

"following_url": "https://api.github.com/users/aswin-giridhar/following{/other_user}",

"gists_url": "https://api.github.com/users/aswin-giridhar/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/aswin-giridhar",

"id": 11817160,

"login": "aswin-giridhar",

"node_id": "MDQ6VXNlcjExODE3MTYw",

"organizations_url": "https://api.github.com/users/aswin-giridhar/orgs",

"received_events_url": "https://api.github.com/users/aswin-giridhar/received_events",

"repos_url": "https://api.github.com/users/aswin-giridhar/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/aswin-giridhar/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/aswin-giridhar/subscriptions",

"type": "User",

"url": "https://api.github.com/users/aswin-giridhar"

}

|

[] |

closed

| false

| null |

[] | null | 1

| 2020-06-03T12:31:44Z

| 2020-06-03T12:52:54Z

| 2020-06-03T12:48:33Z

|

NONE

| null |

As the file required for the viewing in the live nlp viewer is named as dataset_info.json

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/229/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/229/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/229.diff",

"html_url": "https://github.com/huggingface/datasets/pull/229",

"merged_at": null,

"patch_url": "https://github.com/huggingface/datasets/pull/229.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/229"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/228

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/228/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/228/comments

|

https://api.github.com/repos/huggingface/datasets/issues/228/events

|

https://github.com/huggingface/datasets/issues/228

| 629,952,402

|

MDU6SXNzdWU2Mjk5NTI0MDI=

| 228

|

Not able to access the XNLI dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/11817160?v=4",

"events_url": "https://api.github.com/users/aswin-giridhar/events{/privacy}",

"followers_url": "https://api.github.com/users/aswin-giridhar/followers",

"following_url": "https://api.github.com/users/aswin-giridhar/following{/other_user}",

"gists_url": "https://api.github.com/users/aswin-giridhar/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/aswin-giridhar",

"id": 11817160,

"login": "aswin-giridhar",

"node_id": "MDQ6VXNlcjExODE3MTYw",

"organizations_url": "https://api.github.com/users/aswin-giridhar/orgs",

"received_events_url": "https://api.github.com/users/aswin-giridhar/received_events",

"repos_url": "https://api.github.com/users/aswin-giridhar/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/aswin-giridhar/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/aswin-giridhar/subscriptions",

"type": "User",

"url": "https://api.github.com/users/aswin-giridhar"

}

|

[

{

"color": "94203D",

"default": false,

"description": "",

"id": 2107841032,

"name": "nlp-viewer",

"node_id": "MDU6TGFiZWwyMTA3ODQxMDMy",

"url": "https://api.github.com/repos/huggingface/datasets/labels/nlp-viewer"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/35882?v=4",

"events_url": "https://api.github.com/users/srush/events{/privacy}",

"followers_url": "https://api.github.com/users/srush/followers",

"following_url": "https://api.github.com/users/srush/following{/other_user}",

"gists_url": "https://api.github.com/users/srush/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/srush",

"id": 35882,

"login": "srush",

"node_id": "MDQ6VXNlcjM1ODgy",

"organizations_url": "https://api.github.com/users/srush/orgs",

"received_events_url": "https://api.github.com/users/srush/received_events",

"repos_url": "https://api.github.com/users/srush/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/srush/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/srush/subscriptions",

"type": "User",

"url": "https://api.github.com/users/srush"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/35882?v=4",

"events_url": "https://api.github.com/users/srush/events{/privacy}",

"followers_url": "https://api.github.com/users/srush/followers",

"following_url": "https://api.github.com/users/srush/following{/other_user}",

"gists_url": "https://api.github.com/users/srush/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/srush",

"id": 35882,

"login": "srush",

"node_id": "MDQ6VXNlcjM1ODgy",

"organizations_url": "https://api.github.com/users/srush/orgs",

"received_events_url": "https://api.github.com/users/srush/received_events",

"repos_url": "https://api.github.com/users/srush/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/srush/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/srush/subscriptions",

"type": "User",

"url": "https://api.github.com/users/srush"

}

] | null | 4

| 2020-06-03T12:25:14Z

| 2020-07-17T17:44:22Z

| 2020-07-17T17:44:22Z

|

NONE

| null |

When I try to access the XNLI dataset, I get the following error. The option of plain_text get selected automatically and then I get the following error.

```

FileNotFoundError: [Errno 2] No such file or directory: '/home/sasha/.cache/huggingface/datasets/xnli/plain_text/1.0.0/dataset_info.json'

Traceback:

File "/home/sasha/.local/lib/python3.7/site-packages/streamlit/ScriptRunner.py", line 322, in _run_script

exec(code, module.__dict__)

File "/home/sasha/nlp_viewer/run.py", line 86, in <module>

dts, fail = get(str(option.id), str(conf_option.name) if conf_option else None)

File "/home/sasha/.local/lib/python3.7/site-packages/streamlit/caching.py", line 591, in wrapped_func

return get_or_create_cached_value()

File "/home/sasha/.local/lib/python3.7/site-packages/streamlit/caching.py", line 575, in get_or_create_cached_value

return_value = func(*args, **kwargs)

File "/home/sasha/nlp_viewer/run.py", line 72, in get

builder_instance = builder_cls(name=conf)

File "/home/sasha/.local/lib/python3.7/site-packages/nlp/builder.py", line 610, in __init__

super(GeneratorBasedBuilder, self).__init__(*args, **kwargs)

File "/home/sasha/.local/lib/python3.7/site-packages/nlp/builder.py", line 152, in __init__

self.info = DatasetInfo.from_directory(self._cache_dir)

File "/home/sasha/.local/lib/python3.7/site-packages/nlp/info.py", line 157, in from_directory

with open(os.path.join(dataset_info_dir, DATASET_INFO_FILENAME), "r") as f:

```

Is it possible to see if the dataset_info.json is correctly placed?

|

{

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/228/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/228/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/227

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/227/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/227/comments

|

https://api.github.com/repos/huggingface/datasets/issues/227/events

|

https://github.com/huggingface/datasets/issues/227

| 629,845,704

|

MDU6SXNzdWU2Mjk4NDU3MDQ=

| 227

|

Should we still have to force to install apache_beam to download wikipedia ?

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/17963619?v=4",

"events_url": "https://api.github.com/users/richarddwang/events{/privacy}",

"followers_url": "https://api.github.com/users/richarddwang/followers",

"following_url": "https://api.github.com/users/richarddwang/following{/other_user}",

"gists_url": "https://api.github.com/users/richarddwang/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/richarddwang",

"id": 17963619,

"login": "richarddwang",

"node_id": "MDQ6VXNlcjE3OTYzNjE5",

"organizations_url": "https://api.github.com/users/richarddwang/orgs",

"received_events_url": "https://api.github.com/users/richarddwang/received_events",

"repos_url": "https://api.github.com/users/richarddwang/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/richarddwang/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/richarddwang/subscriptions",

"type": "User",

"url": "https://api.github.com/users/richarddwang"

}

|

[] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

] | null | 3

| 2020-06-03T09:33:20Z

| 2020-06-03T15:25:41Z

| 2020-06-03T15:25:41Z

|

CONTRIBUTOR

| null |

Hi, first thanks to @lhoestq 's revolutionary work, I successfully downloaded processed wikipedia according to the doc. 😍😍😍

But at the first try, it tell me to install `apache_beam` and `mwparserfromhell`, which I thought wouldn't be used according to #204 , it was kind of confusing me at that time.

Maybe we should not force users to install these ? Or we just add them to`nlp`'s dependency ?

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/227/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/227/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/226

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/226/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/226/comments

|

https://api.github.com/repos/huggingface/datasets/issues/226/events

|

https://github.com/huggingface/datasets/pull/226

| 628,344,520

|

MDExOlB1bGxSZXF1ZXN0NDI1OTA0MjEz

| 226

|

add BlendedSkillTalk dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/38249783?v=4",

"events_url": "https://api.github.com/users/mariamabarham/events{/privacy}",

"followers_url": "https://api.github.com/users/mariamabarham/followers",

"following_url": "https://api.github.com/users/mariamabarham/following{/other_user}",

"gists_url": "https://api.github.com/users/mariamabarham/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/mariamabarham",

"id": 38249783,

"login": "mariamabarham",

"node_id": "MDQ6VXNlcjM4MjQ5Nzgz",

"organizations_url": "https://api.github.com/users/mariamabarham/orgs",

"received_events_url": "https://api.github.com/users/mariamabarham/received_events",

"repos_url": "https://api.github.com/users/mariamabarham/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/mariamabarham/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariamabarham/subscriptions",

"type": "User",

"url": "https://api.github.com/users/mariamabarham"

}

|

[] |

closed

| false

| null |

[] | null | 1

| 2020-06-01T10:54:45Z

| 2020-06-03T14:37:23Z

| 2020-06-03T14:37:22Z

|

CONTRIBUTOR

| null |

This PR add the BlendedSkillTalk dataset, which is used to fine tune the blenderbot.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/226/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/226/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/226.diff",

"html_url": "https://github.com/huggingface/datasets/pull/226",

"merged_at": "2020-06-03T14:37:22Z",

"patch_url": "https://github.com/huggingface/datasets/pull/226.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/226"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/225

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/225/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/225/comments

|

https://api.github.com/repos/huggingface/datasets/issues/225/events

|

https://github.com/huggingface/datasets/issues/225

| 628,083,366

|

MDU6SXNzdWU2MjgwODMzNjY=

| 225

|

[ROUGE] Different scores with `files2rouge`

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/43774355?v=4",

"events_url": "https://api.github.com/users/astariul/events{/privacy}",

"followers_url": "https://api.github.com/users/astariul/followers",

"following_url": "https://api.github.com/users/astariul/following{/other_user}",

"gists_url": "https://api.github.com/users/astariul/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/astariul",

"id": 43774355,

"login": "astariul",

"node_id": "MDQ6VXNlcjQzNzc0MzU1",

"organizations_url": "https://api.github.com/users/astariul/orgs",

"received_events_url": "https://api.github.com/users/astariul/received_events",

"repos_url": "https://api.github.com/users/astariul/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/astariul/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/astariul/subscriptions",

"type": "User",

"url": "https://api.github.com/users/astariul"

}

|

[

{

"color": "d722e8",

"default": false,

"description": "Discussions on the metrics",

"id": 2067400959,

"name": "Metric discussion",

"node_id": "MDU6TGFiZWwyMDY3NDAwOTU5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/Metric%20discussion"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/10469459?v=4",

"events_url": "https://api.github.com/users/yjernite/events{/privacy}",

"followers_url": "https://api.github.com/users/yjernite/followers",

"following_url": "https://api.github.com/users/yjernite/following{/other_user}",

"gists_url": "https://api.github.com/users/yjernite/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/yjernite",

"id": 10469459,

"login": "yjernite",

"node_id": "MDQ6VXNlcjEwNDY5NDU5",

"organizations_url": "https://api.github.com/users/yjernite/orgs",

"received_events_url": "https://api.github.com/users/yjernite/received_events",

"repos_url": "https://api.github.com/users/yjernite/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/yjernite/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yjernite/subscriptions",

"type": "User",

"url": "https://api.github.com/users/yjernite"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/10469459?v=4",

"events_url": "https://api.github.com/users/yjernite/events{/privacy}",

"followers_url": "https://api.github.com/users/yjernite/followers",

"following_url": "https://api.github.com/users/yjernite/following{/other_user}",

"gists_url": "https://api.github.com/users/yjernite/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/yjernite",

"id": 10469459,

"login": "yjernite",

"node_id": "MDQ6VXNlcjEwNDY5NDU5",

"organizations_url": "https://api.github.com/users/yjernite/orgs",

"received_events_url": "https://api.github.com/users/yjernite/received_events",

"repos_url": "https://api.github.com/users/yjernite/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/yjernite/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yjernite/subscriptions",

"type": "User",

"url": "https://api.github.com/users/yjernite"

}

] | null | 3

| 2020-06-01T00:50:36Z

| 2020-06-03T15:27:18Z

| 2020-06-03T15:27:18Z

|

NONE

| null |

It seems that the ROUGE score of `nlp` is lower than the one of `files2rouge`.

Here is a self-contained notebook to reproduce both scores : https://colab.research.google.com/drive/14EyAXValB6UzKY9x4rs_T3pyL7alpw_F?usp=sharing

---

`nlp` : (Only mid F-scores)

>rouge1 0.33508031962733364

rouge2 0.14574333776191592

rougeL 0.2321187823256159

`files2rouge` :

>Running ROUGE...

===========================

1 ROUGE-1 Average_R: 0.48873 (95%-conf.int. 0.41192 - 0.56339)

1 ROUGE-1 Average_P: 0.29010 (95%-conf.int. 0.23605 - 0.34445)

1 ROUGE-1 Average_F: 0.34761 (95%-conf.int. 0.29479 - 0.39871)

===========================

1 ROUGE-2 Average_R: 0.20280 (95%-conf.int. 0.14969 - 0.26244)

1 ROUGE-2 Average_P: 0.12772 (95%-conf.int. 0.08603 - 0.17752)

1 ROUGE-2 Average_F: 0.14798 (95%-conf.int. 0.10517 - 0.19240)

===========================

1 ROUGE-L Average_R: 0.32960 (95%-conf.int. 0.26501 - 0.39676)

1 ROUGE-L Average_P: 0.19880 (95%-conf.int. 0.15257 - 0.25136)

1 ROUGE-L Average_F: 0.23619 (95%-conf.int. 0.19073 - 0.28663)

---

When using longer predictions/gold, the difference is bigger.

**How can I reproduce same score as `files2rouge` ?**

@lhoestq

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/225/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/225/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/224

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/224/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/224/comments

|

https://api.github.com/repos/huggingface/datasets/issues/224/events

|

https://github.com/huggingface/datasets/issues/224

| 627,791,693

|

MDU6SXNzdWU2Mjc3OTE2OTM=

| 224

|

[Feature Request/Help] BLEURT model -> PyTorch

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/6889910?v=4",

"events_url": "https://api.github.com/users/adamwlev/events{/privacy}",

"followers_url": "https://api.github.com/users/adamwlev/followers",

"following_url": "https://api.github.com/users/adamwlev/following{/other_user}",

"gists_url": "https://api.github.com/users/adamwlev/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/adamwlev",

"id": 6889910,

"login": "adamwlev",

"node_id": "MDQ6VXNlcjY4ODk5MTA=",

"organizations_url": "https://api.github.com/users/adamwlev/orgs",

"received_events_url": "https://api.github.com/users/adamwlev/received_events",

"repos_url": "https://api.github.com/users/adamwlev/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/adamwlev/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/adamwlev/subscriptions",

"type": "User",

"url": "https://api.github.com/users/adamwlev"

}

|

[

{

"color": "a2eeef",

"default": true,

"description": "New feature or request",

"id": 1935892871,

"name": "enhancement",

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement"

}

] |

closed

| false

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/10469459?v=4",

"events_url": "https://api.github.com/users/yjernite/events{/privacy}",

"followers_url": "https://api.github.com/users/yjernite/followers",

"following_url": "https://api.github.com/users/yjernite/following{/other_user}",

"gists_url": "https://api.github.com/users/yjernite/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/yjernite",

"id": 10469459,

"login": "yjernite",

"node_id": "MDQ6VXNlcjEwNDY5NDU5",

"organizations_url": "https://api.github.com/users/yjernite/orgs",

"received_events_url": "https://api.github.com/users/yjernite/received_events",

"repos_url": "https://api.github.com/users/yjernite/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/yjernite/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yjernite/subscriptions",

"type": "User",

"url": "https://api.github.com/users/yjernite"

}

|

[

{

"avatar_url": "https://avatars.githubusercontent.com/u/10469459?v=4",

"events_url": "https://api.github.com/users/yjernite/events{/privacy}",

"followers_url": "https://api.github.com/users/yjernite/followers",

"following_url": "https://api.github.com/users/yjernite/following{/other_user}",

"gists_url": "https://api.github.com/users/yjernite/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/yjernite",

"id": 10469459,

"login": "yjernite",

"node_id": "MDQ6VXNlcjEwNDY5NDU5",

"organizations_url": "https://api.github.com/users/yjernite/orgs",

"received_events_url": "https://api.github.com/users/yjernite/received_events",

"repos_url": "https://api.github.com/users/yjernite/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/yjernite/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yjernite/subscriptions",

"type": "User",

"url": "https://api.github.com/users/yjernite"

}

] | null | 6

| 2020-05-30T18:30:40Z

| 2023-08-26T17:38:48Z

| 2021-01-04T09:53:32Z

|

NONE

| null |

Hi, I am interested in porting google research's new BLEURT learned metric to PyTorch (because I wish to do something experimental with language generation and backpropping through BLEURT). I noticed that you guys don't have it yet so I am partly just asking if you plan to add it (@thomwolf said you want to do so on Twitter).

I had a go of just like manually using the checkpoint that they publish which includes the weights. It seems like the architecture is exactly aligned with the out-of-the-box BertModel in transformers just with a single linear layer on top of the CLS embedding. I loaded all the weights to the PyTorch model but I am not able to get the same numbers as the BLEURT package's python api. Here is my colab notebook where I tried https://colab.research.google.com/drive/1Bfced531EvQP_CpFvxwxNl25Pj6ptylY?usp=sharing . If you have any pointers on what might be going wrong that would be much appreciated!

Thank you muchly!

|

{

"+1": 1,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/224/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/224/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/223

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/223/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/223/comments

|

https://api.github.com/repos/huggingface/datasets/issues/223/events

|

https://github.com/huggingface/datasets/issues/223

| 627,683,386

|

MDU6SXNzdWU2Mjc2ODMzODY=

| 223

|

[Feature request] Add FLUE dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/58078086?v=4",

"events_url": "https://api.github.com/users/lbourdois/events{/privacy}",

"followers_url": "https://api.github.com/users/lbourdois/followers",

"following_url": "https://api.github.com/users/lbourdois/following{/other_user}",

"gists_url": "https://api.github.com/users/lbourdois/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lbourdois",

"id": 58078086,

"login": "lbourdois",

"node_id": "MDQ6VXNlcjU4MDc4MDg2",

"organizations_url": "https://api.github.com/users/lbourdois/orgs",

"received_events_url": "https://api.github.com/users/lbourdois/received_events",

"repos_url": "https://api.github.com/users/lbourdois/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lbourdois/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lbourdois/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lbourdois"

}

|

[

{

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset",

"id": 2067376369,

"name": "dataset request",

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request"

}

] |

closed

| false

| null |

[] | null | 3

| 2020-05-30T08:52:15Z

| 2020-12-03T13:39:33Z

| 2020-12-03T13:39:33Z

|

NONE

| null |

Hi,

I think it would be interesting to add the FLUE dataset for francophones or anyone wishing to work on French.

In other requests, I read that you are already working on some datasets, and I was wondering if FLUE was planned.

If it is not the case, I can provide each of the cleaned FLUE datasets (in the form of a directly exploitable dataset rather than in the original xml formats which require additional processing, with the French part for cases where the dataset is based on a multilingual dataframe, etc.).

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/223/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/223/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/222

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/222/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/222/comments

|

https://api.github.com/repos/huggingface/datasets/issues/222/events

|

https://github.com/huggingface/datasets/issues/222

| 627,586,690

|

MDU6SXNzdWU2Mjc1ODY2OTA=

| 222

|

Colab Notebook breaks when downloading the squad dataset

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/338917?v=4",

"events_url": "https://api.github.com/users/carlos-aguayo/events{/privacy}",

"followers_url": "https://api.github.com/users/carlos-aguayo/followers",

"following_url": "https://api.github.com/users/carlos-aguayo/following{/other_user}",

"gists_url": "https://api.github.com/users/carlos-aguayo/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/carlos-aguayo",

"id": 338917,

"login": "carlos-aguayo",

"node_id": "MDQ6VXNlcjMzODkxNw==",

"organizations_url": "https://api.github.com/users/carlos-aguayo/orgs",

"received_events_url": "https://api.github.com/users/carlos-aguayo/received_events",

"repos_url": "https://api.github.com/users/carlos-aguayo/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/carlos-aguayo/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/carlos-aguayo/subscriptions",

"type": "User",

"url": "https://api.github.com/users/carlos-aguayo"

}

|

[] |

closed

| false

| null |

[] | null | 6

| 2020-05-29T22:55:59Z

| 2020-06-04T00:21:05Z

| 2020-06-04T00:21:05Z

|

NONE

| null |

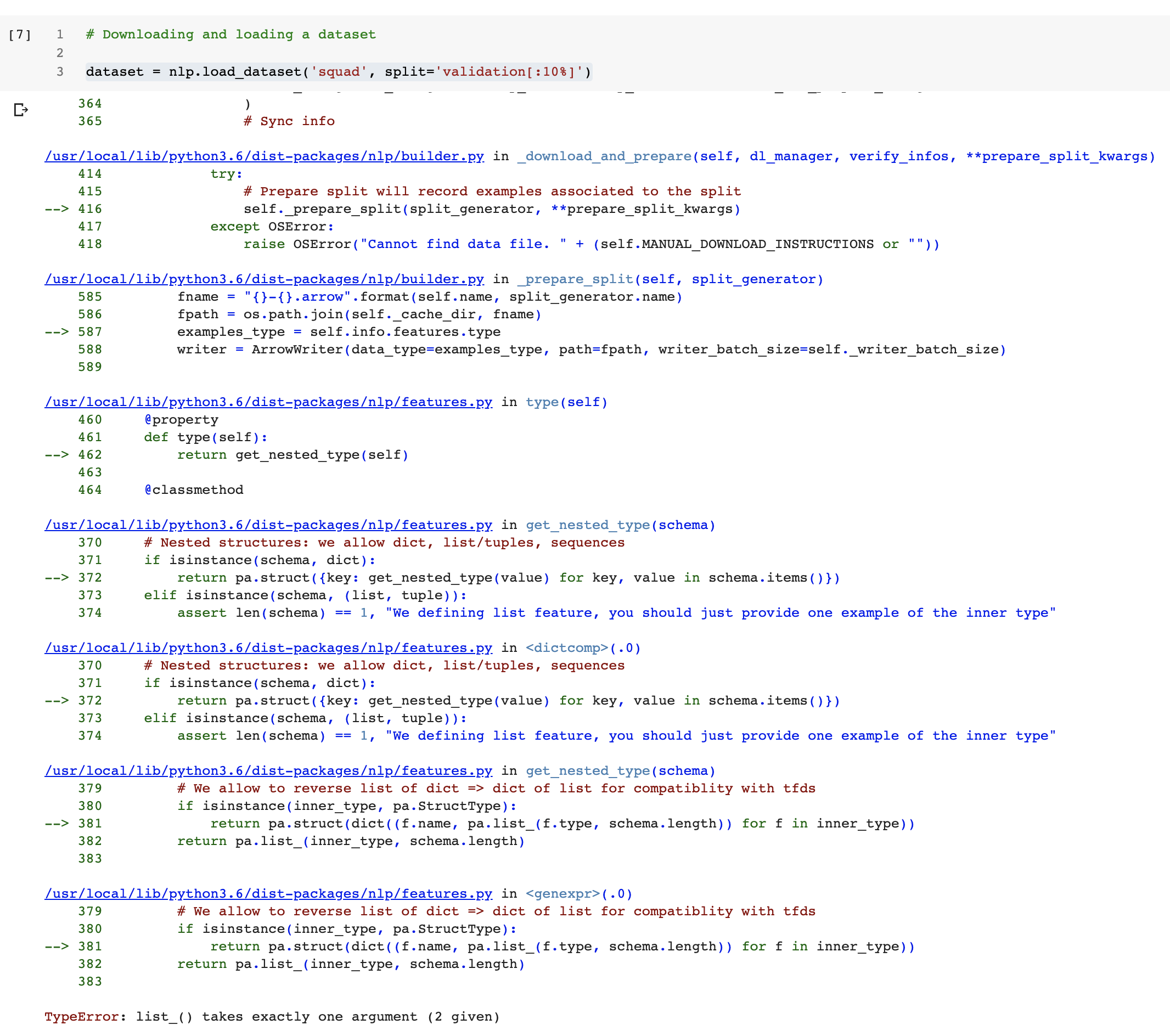

When I run the notebook in Colab

https://colab.research.google.com/github/huggingface/nlp/blob/master/notebooks/Overview.ipynb

breaks when running this cell:

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/222/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/222/timeline

| null |

completed

| null | null | false

|

https://api.github.com/repos/huggingface/datasets/issues/221

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/221/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/221/comments

|

https://api.github.com/repos/huggingface/datasets/issues/221/events

|

https://github.com/huggingface/datasets/pull/221

| 627,300,648

|

MDExOlB1bGxSZXF1ZXN0NDI1MTI5OTc0

| 221

|

Fix tests/test_dataset_common.py

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/13635495?v=4",

"events_url": "https://api.github.com/users/tayciryahmed/events{/privacy}",

"followers_url": "https://api.github.com/users/tayciryahmed/followers",

"following_url": "https://api.github.com/users/tayciryahmed/following{/other_user}",

"gists_url": "https://api.github.com/users/tayciryahmed/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/tayciryahmed",

"id": 13635495,

"login": "tayciryahmed",

"node_id": "MDQ6VXNlcjEzNjM1NDk1",

"organizations_url": "https://api.github.com/users/tayciryahmed/orgs",

"received_events_url": "https://api.github.com/users/tayciryahmed/received_events",

"repos_url": "https://api.github.com/users/tayciryahmed/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/tayciryahmed/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tayciryahmed/subscriptions",

"type": "User",

"url": "https://api.github.com/users/tayciryahmed"

}

|

[] |

closed

| false

| null |

[] | null | 1

| 2020-05-29T14:12:15Z

| 2020-06-01T12:20:42Z

| 2020-05-29T15:02:23Z

|

CONTRIBUTOR

| null |

When I run the command `RUN_SLOW=1 pytest tests/test_dataset_common.py::LocalDatasetTest::test_load_real_dataset_arcd` while working on #220. I get the error ` unexpected keyword argument "'download_and_prepare_kwargs'"` at the level of `load_dataset`. Indeed, this [function](https://github.com/huggingface/nlp/blob/master/src/nlp/load.py#L441) no longer has the argument `download_and_prepare_kwargs` but rather `download_config`. So here I change the tests accordingly.

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/221/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/221/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/221.diff",

"html_url": "https://github.com/huggingface/datasets/pull/221",

"merged_at": "2020-05-29T15:02:23Z",

"patch_url": "https://github.com/huggingface/datasets/pull/221.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/221"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/220

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/220/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/220/comments

|

https://api.github.com/repos/huggingface/datasets/issues/220/events

|

https://github.com/huggingface/datasets/pull/220

| 627,280,683

|

MDExOlB1bGxSZXF1ZXN0NDI1MTEzMzEy

| 220

|

dataset_arcd

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/13635495?v=4",

"events_url": "https://api.github.com/users/tayciryahmed/events{/privacy}",

"followers_url": "https://api.github.com/users/tayciryahmed/followers",

"following_url": "https://api.github.com/users/tayciryahmed/following{/other_user}",

"gists_url": "https://api.github.com/users/tayciryahmed/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/tayciryahmed",

"id": 13635495,

"login": "tayciryahmed",

"node_id": "MDQ6VXNlcjEzNjM1NDk1",

"organizations_url": "https://api.github.com/users/tayciryahmed/orgs",

"received_events_url": "https://api.github.com/users/tayciryahmed/received_events",

"repos_url": "https://api.github.com/users/tayciryahmed/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/tayciryahmed/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tayciryahmed/subscriptions",

"type": "User",

"url": "https://api.github.com/users/tayciryahmed"

}

|

[] |

closed

| false

| null |

[] | null | 2

| 2020-05-29T13:46:50Z

| 2020-05-29T14:58:40Z

| 2020-05-29T14:57:21Z

|

CONTRIBUTOR

| null |

Added Arabic Reading Comprehension Dataset (ARCD): https://arxiv.org/abs/1906.05394

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 1,

"laugh": 0,

"rocket": 0,

"total_count": 1,

"url": "https://api.github.com/repos/huggingface/datasets/issues/220/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/220/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/220.diff",

"html_url": "https://github.com/huggingface/datasets/pull/220",

"merged_at": "2020-05-29T14:57:21Z",

"patch_url": "https://github.com/huggingface/datasets/pull/220.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/220"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/219

|

https://api.github.com/repos/huggingface/datasets

|

https://api.github.com/repos/huggingface/datasets/issues/219/labels{/name}

|

https://api.github.com/repos/huggingface/datasets/issues/219/comments

|

https://api.github.com/repos/huggingface/datasets/issues/219/events

|

https://github.com/huggingface/datasets/pull/219

| 627,235,893

|

MDExOlB1bGxSZXF1ZXN0NDI1MDc2NjQx

| 219

|

force mwparserfromhell as third party

|

{

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"gravatar_id": "",

"html_url": "https://github.com/lhoestq",

"id": 42851186,

"login": "lhoestq",

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"site_admin": false,

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"type": "User",

"url": "https://api.github.com/users/lhoestq"

}

|

[] |

closed

| false

| null |

[] | null | 0

| 2020-05-29T12:33:17Z

| 2020-05-29T13:30:13Z

| 2020-05-29T13:30:12Z

|

MEMBER

| null |

This should fix your env because you had `mwparserfromhell ` as a first party for `isort` @patrickvonplaten

|

{

"+1": 0,

"-1": 0,

"confused": 0,

"eyes": 0,

"heart": 0,

"hooray": 0,

"laugh": 0,

"rocket": 0,

"total_count": 0,

"url": "https://api.github.com/repos/huggingface/datasets/issues/219/reactions"

}

|

https://api.github.com/repos/huggingface/datasets/issues/219/timeline

| null | null | 0

|

{

"diff_url": "https://github.com/huggingface/datasets/pull/219.diff",

"html_url": "https://github.com/huggingface/datasets/pull/219",

"merged_at": "2020-05-29T13:30:12Z",

"patch_url": "https://github.com/huggingface/datasets/pull/219.patch",

"url": "https://api.github.com/repos/huggingface/datasets/pulls/219"

}

| true

|

https://api.github.com/repos/huggingface/datasets/issues/218

|

https://api.github.com/repos/huggingface/datasets

|