id

stringlengths 6

6

| text

stringlengths 20

17.2k

| title

stringclasses 1

value |

|---|---|---|

174148

|

class SQLDatabase:

"""SQL Database.

This class provides a wrapper around the SQLAlchemy engine to interact with a SQL

database.

It provides methods to execute SQL commands, insert data into tables, and retrieve

information about the database schema.

It also supports optional features such as including or excluding specific tables,

sampling rows for table info,

including indexes in table info, and supporting views.

Based on langchain SQLDatabase.

https://github.com/langchain-ai/langchain/blob/e355606b1100097665207ca259de6dc548d44c78/libs/langchain/langchain/utilities/sql_database.py#L39

Args:

engine (Engine): The SQLAlchemy engine instance to use for database operations.

schema (Optional[str]): The name of the schema to use, if any.

metadata (Optional[MetaData]): The metadata instance to use, if any.

ignore_tables (Optional[List[str]]): List of table names to ignore. If set,

include_tables must be None.

include_tables (Optional[List[str]]): List of table names to include. If set,

ignore_tables must be None.

sample_rows_in_table_info (int): The number of sample rows to include in table

info.

indexes_in_table_info (bool): Whether to include indexes in table info.

custom_table_info (Optional[dict]): Custom table info to use.

view_support (bool): Whether to support views.

max_string_length (int): The maximum string length to use.

"""

def __init__(

self,

engine: Engine,

schema: Optional[str] = None,

metadata: Optional[MetaData] = None,

ignore_tables: Optional[List[str]] = None,

include_tables: Optional[List[str]] = None,

sample_rows_in_table_info: int = 3,

indexes_in_table_info: bool = False,

custom_table_info: Optional[dict] = None,

view_support: bool = False,

max_string_length: int = 300,

):

"""Create engine from database URI."""

self._engine = engine

self._schema = schema

if include_tables and ignore_tables:

raise ValueError("Cannot specify both include_tables and ignore_tables")

self._inspector = inspect(self._engine)

# including view support by adding the views as well as tables to the all

# tables list if view_support is True

self._all_tables = set(

self._inspector.get_table_names(schema=schema)

+ (self._inspector.get_view_names(schema=schema) if view_support else [])

)

self._include_tables = set(include_tables) if include_tables else set()

if self._include_tables:

missing_tables = self._include_tables - self._all_tables

if missing_tables:

raise ValueError(

f"include_tables {missing_tables} not found in database"

)

self._ignore_tables = set(ignore_tables) if ignore_tables else set()

if self._ignore_tables:

missing_tables = self._ignore_tables - self._all_tables

if missing_tables:

raise ValueError(

f"ignore_tables {missing_tables} not found in database"

)

usable_tables = self.get_usable_table_names()

self._usable_tables = set(usable_tables) if usable_tables else self._all_tables

if not isinstance(sample_rows_in_table_info, int):

raise TypeError("sample_rows_in_table_info must be an integer")

self._sample_rows_in_table_info = sample_rows_in_table_info

self._indexes_in_table_info = indexes_in_table_info

self._custom_table_info = custom_table_info

if self._custom_table_info:

if not isinstance(self._custom_table_info, dict):

raise TypeError(

"table_info must be a dictionary with table names as keys and the "

"desired table info as values"

)

# only keep the tables that are also present in the database

intersection = set(self._custom_table_info).intersection(self._all_tables)

self._custom_table_info = {

table: info

for table, info in self._custom_table_info.items()

if table in intersection

}

self._max_string_length = max_string_length

self._metadata = metadata or MetaData()

# including view support if view_support = true

self._metadata.reflect(

views=view_support,

bind=self._engine,

only=list(self._usable_tables),

schema=self._schema,

)

@property

def engine(self) -> Engine:

"""Return SQL Alchemy engine."""

return self._engine

@property

def metadata_obj(self) -> MetaData:

"""Return SQL Alchemy metadata."""

return self._metadata

@classmethod

def from_uri(

cls, database_uri: str, engine_args: Optional[dict] = None, **kwargs: Any

) -> "SQLDatabase":

"""Construct a SQLAlchemy engine from URI."""

_engine_args = engine_args or {}

return cls(create_engine(database_uri, **_engine_args), **kwargs)

@property

def dialect(self) -> str:

"""Return string representation of dialect to use."""

return self._engine.dialect.name

def get_usable_table_names(self) -> Iterable[str]:

"""Get names of tables available."""

if self._include_tables:

return sorted(self._include_tables)

return sorted(self._all_tables - self._ignore_tables)

def get_table_columns(self, table_name: str) -> List[Any]:

"""Get table columns."""

return self._inspector.get_columns(table_name)

def get_single_table_info(self, table_name: str) -> str:

"""Get table info for a single table."""

# same logic as table_info, but with specific table names

template = "Table '{table_name}' has columns: {columns}, "

try:

# try to retrieve table comment

table_comment = self._inspector.get_table_comment(

table_name, schema=self._schema

)["text"]

if table_comment:

template += f"with comment: ({table_comment}) "

except NotImplementedError:

# get_table_comment raises NotImplementedError for a dialect that does not support comments.

pass

template += "{foreign_keys}."

columns = []

for column in self._inspector.get_columns(table_name, schema=self._schema):

if column.get("comment"):

columns.append(

f"{column['name']} ({column['type']!s}): "

f"'{column.get('comment')}'"

)

else:

columns.append(f"{column['name']} ({column['type']!s})")

column_str = ", ".join(columns)

foreign_keys = []

for foreign_key in self._inspector.get_foreign_keys(

table_name, schema=self._schema

):

foreign_keys.append(

f"{foreign_key['constrained_columns']} -> "

f"{foreign_key['referred_table']}.{foreign_key['referred_columns']}"

)

foreign_key_str = (

foreign_keys

and " and foreign keys: {}".format(", ".join(foreign_keys))

or ""

)

return template.format(

table_name=table_name, columns=column_str, foreign_keys=foreign_key_str

)

def insert_into_table(self, table_name: str, data: dict) -> None:

"""Insert data into a table."""

table = self._metadata.tables[table_name]

stmt = insert(table).values(**data)

with self._engine.begin() as connection:

connection.execute(stmt)

def truncate_word(self, content: Any, *, length: int, suffix: str = "...") -> str:

"""

Truncate a string to a certain number of words, based on the max string

length.

"""

if not isinstance(content, str) or length <= 0:

return content

if len(content) <= length:

return content

return content[: length - len(suffix)].rsplit(" ", 1)[0] + suffix

def run_sql(self, command: str) -> Tuple[str, Dict]:

"""Execute a SQL statement and return a string representing the results.

If the statement returns rows, a string of the results is returned.

If the statement returns no rows, an empty string is returned.

"""

with self._engine.begin() as connection:

try:

if self._schema:

command = command.replace("FROM ", f"FROM {self._schema}.")

command = command.replace("JOIN ", f"JOIN {self._schema}.")

cursor = connection.execute(text(command))

except (ProgrammingError, OperationalError) as exc:

raise NotImplementedError(

f"Statement {command!r} is invalid SQL."

) from exc

if cursor.returns_rows:

result = cursor.fetchall()

# truncate the results to the max string length

# we can't use str(result) directly because it automatically truncates long strings

truncated_results = []

for row in result:

# truncate each column, then convert the row to a tuple

truncated_row = tuple(

self.truncate_word(column, length=self._max_string_length)

for column in row

)

truncated_results.append(truncated_row)

return str(truncated_results), {

"result": truncated_results,

"col_keys": list(cursor.keys()),

}

return "", {}

| |

174154

|

"""Pydantic output parser."""

import json

from typing import Any, List, Optional, Type

from llama_index.core.output_parsers.base import ChainableOutputParser

from llama_index.core.output_parsers.utils import extract_json_str

from llama_index.core.types import Model

PYDANTIC_FORMAT_TMPL = """

Here's a JSON schema to follow:

{schema}

Output a valid JSON object but do not repeat the schema.

"""

class PydanticOutputParser(ChainableOutputParser):

"""Pydantic Output Parser.

Args:

output_cls (BaseModel): Pydantic output class.

"""

def __init__(

self,

output_cls: Type[Model],

excluded_schema_keys_from_format: Optional[List] = None,

pydantic_format_tmpl: str = PYDANTIC_FORMAT_TMPL,

) -> None:

"""Init params."""

self._output_cls = output_cls

self._excluded_schema_keys_from_format = excluded_schema_keys_from_format or []

self._pydantic_format_tmpl = pydantic_format_tmpl

@property

def output_cls(self) -> Type[Model]:

return self._output_cls # type: ignore

@property

def format_string(self) -> str:

"""Format string."""

return self.get_format_string(escape_json=True)

def get_format_string(self, escape_json: bool = True) -> str:

"""Format string."""

schema_dict = self._output_cls.model_json_schema()

for key in self._excluded_schema_keys_from_format:

del schema_dict[key]

schema_str = json.dumps(schema_dict)

output_str = self._pydantic_format_tmpl.format(schema=schema_str)

if escape_json:

return output_str.replace("{", "{{").replace("}", "}}")

else:

return output_str

def parse(self, text: str) -> Any:

"""Parse, validate, and correct errors programmatically."""

json_str = extract_json_str(text)

return self._output_cls.model_validate_json(json_str)

def format(self, query: str) -> str:

"""Format a query with structured output formatting instructions."""

return query + "\n\n" + self.get_format_string(escape_json=True)

| |

174270

|

import logging

from typing import Any, List, Optional, Tuple

from llama_index.core.base.llms.types import (

ChatMessage,

ChatResponse,

ChatResponseAsyncGen,

ChatResponseGen,

MessageRole,

)

from llama_index.core.base.response.schema import (

AsyncStreamingResponse,

StreamingResponse,

)

from llama_index.core.callbacks import CallbackManager, trace_method

from llama_index.core.chat_engine.types import (

AgentChatResponse,

BaseChatEngine,

StreamingAgentChatResponse,

ToolOutput,

)

from llama_index.core.indices.base_retriever import BaseRetriever

from llama_index.core.indices.query.schema import QueryBundle

from llama_index.core.base.llms.generic_utils import messages_to_history_str

from llama_index.core.llms.llm import LLM

from llama_index.core.memory import BaseMemory, ChatMemoryBuffer

from llama_index.core.postprocessor.types import BaseNodePostprocessor

from llama_index.core.prompts import PromptTemplate

from llama_index.core.response_synthesizers import CompactAndRefine

from llama_index.core.schema import NodeWithScore

from llama_index.core.settings import Settings

from llama_index.core.utilities.token_counting import TokenCounter

from llama_index.core.chat_engine.utils import (

get_prefix_messages_with_context,

get_response_synthesizer,

)

logger = logging.getLogger(__name__)

DEFAULT_CONTEXT_PROMPT_TEMPLATE = """

The following is a friendly conversation between a user and an AI assistant.

The assistant is talkative and provides lots of specific details from its context.

If the assistant does not know the answer to a question, it truthfully says it

does not know.

Here are the relevant documents for the context:

{context_str}

Instruction: Based on the above documents, provide a detailed answer for the user question below.

Answer "don't know" if not present in the document.

"""

DEFAULT_CONTEXT_REFINE_PROMPT_TEMPLATE = """

The following is a friendly conversation between a user and an AI assistant.

The assistant is talkative and provides lots of specific details from its context.

If the assistant does not know the answer to a question, it truthfully says it

does not know.

Here are the relevant documents for the context:

{context_msg}

Existing Answer:

{existing_answer}

Instruction: Refine the existing answer using the provided context to assist the user.

If the context isn't helpful, just repeat the existing answer and nothing more.

"""

DEFAULT_CONDENSE_PROMPT_TEMPLATE = """

Given the following conversation between a user and an AI assistant and a follow up question from user,

rephrase the follow up question to be a standalone question.

Chat History:

{chat_history}

Follow Up Input: {question}

Standalone question:"""

| |

174279

|

from typing import Any, List, Optional

from llama_index.core.base.base_retriever import BaseRetriever

from llama_index.core.base.llms.types import (

ChatMessage,

ChatResponse,

ChatResponseAsyncGen,

ChatResponseGen,

MessageRole,

)

from llama_index.core.base.response.schema import (

StreamingResponse,

AsyncStreamingResponse,

)

from llama_index.core.callbacks import CallbackManager, trace_method

from llama_index.core.chat_engine.types import (

AgentChatResponse,

BaseChatEngine,

StreamingAgentChatResponse,

ToolOutput,

)

from llama_index.core.llms.llm import LLM

from llama_index.core.memory import BaseMemory, ChatMemoryBuffer

from llama_index.core.postprocessor.types import BaseNodePostprocessor

from llama_index.core.response_synthesizers import CompactAndRefine

from llama_index.core.schema import NodeWithScore, QueryBundle

from llama_index.core.settings import Settings

from llama_index.core.chat_engine.utils import (

get_prefix_messages_with_context,

get_response_synthesizer,

)

DEFAULT_CONTEXT_TEMPLATE = (

"Use the context information below to assist the user."

"\n--------------------\n"

"{context_str}"

"\n--------------------\n"

)

DEFAULT_REFINE_TEMPLATE = (

"Using the context below, refine the following existing answer using the provided context to assist the user.\n"

"If the context isn't helpful, just repeat the existing answer and nothing more.\n"

"\n--------------------\n"

"{context_msg}"

"\n--------------------\n"

"Existing Answer:\n"

"{existing_answer}"

"\n--------------------\n"

)

| |

174280

|

class ContextChatEngine(BaseChatEngine):

"""

Context Chat Engine.

Uses a retriever to retrieve a context, set the context in the system prompt,

and then uses an LLM to generate a response, for a fluid chat experience.

"""

def __init__(

self,

retriever: BaseRetriever,

llm: LLM,

memory: BaseMemory,

prefix_messages: List[ChatMessage],

node_postprocessors: Optional[List[BaseNodePostprocessor]] = None,

context_template: Optional[str] = None,

context_refine_template: Optional[str] = None,

callback_manager: Optional[CallbackManager] = None,

) -> None:

self._retriever = retriever

self._llm = llm

self._memory = memory

self._prefix_messages = prefix_messages

self._node_postprocessors = node_postprocessors or []

self._context_template = context_template or DEFAULT_CONTEXT_TEMPLATE

self._context_refine_template = (

context_refine_template or DEFAULT_REFINE_TEMPLATE

)

self.callback_manager = callback_manager or CallbackManager([])

for node_postprocessor in self._node_postprocessors:

node_postprocessor.callback_manager = self.callback_manager

@classmethod

def from_defaults(

cls,

retriever: BaseRetriever,

chat_history: Optional[List[ChatMessage]] = None,

memory: Optional[BaseMemory] = None,

system_prompt: Optional[str] = None,

prefix_messages: Optional[List[ChatMessage]] = None,

node_postprocessors: Optional[List[BaseNodePostprocessor]] = None,

context_template: Optional[str] = None,

context_refine_template: Optional[str] = None,

llm: Optional[LLM] = None,

**kwargs: Any,

) -> "ContextChatEngine":

"""Initialize a ContextChatEngine from default parameters."""

llm = llm or Settings.llm

chat_history = chat_history or []

memory = memory or ChatMemoryBuffer.from_defaults(

chat_history=chat_history, token_limit=llm.metadata.context_window - 256

)

if system_prompt is not None:

if prefix_messages is not None:

raise ValueError(

"Cannot specify both system_prompt and prefix_messages"

)

prefix_messages = [

ChatMessage(content=system_prompt, role=llm.metadata.system_role)

]

prefix_messages = prefix_messages or []

node_postprocessors = node_postprocessors or []

return cls(

retriever,

llm=llm,

memory=memory,

prefix_messages=prefix_messages,

node_postprocessors=node_postprocessors,

callback_manager=Settings.callback_manager,

context_template=context_template,

context_refine_template=context_refine_template,

)

def _get_nodes(self, message: str) -> List[NodeWithScore]:

"""Generate context information from a message."""

nodes = self._retriever.retrieve(message)

for postprocessor in self._node_postprocessors:

nodes = postprocessor.postprocess_nodes(

nodes, query_bundle=QueryBundle(message)

)

return nodes

async def _aget_nodes(self, message: str) -> List[NodeWithScore]:

"""Generate context information from a message."""

nodes = await self._retriever.aretrieve(message)

for postprocessor in self._node_postprocessors:

nodes = postprocessor.postprocess_nodes(

nodes, query_bundle=QueryBundle(message)

)

return nodes

def _get_response_synthesizer(

self, chat_history: List[ChatMessage], streaming: bool = False

) -> CompactAndRefine:

# Pull the system prompt from the prefix messages

system_prompt = ""

prefix_messages = self._prefix_messages

if (

len(self._prefix_messages) != 0

and self._prefix_messages[0].role == MessageRole.SYSTEM

):

system_prompt = str(self._prefix_messages[0].content)

prefix_messages = self._prefix_messages[1:]

# Get the messages for the QA and refine prompts

qa_messages = get_prefix_messages_with_context(

self._context_template,

system_prompt,

prefix_messages,

chat_history,

self._llm.metadata.system_role,

)

refine_messages = get_prefix_messages_with_context(

self._context_refine_template,

system_prompt,

prefix_messages,

chat_history,

self._llm.metadata.system_role,

)

# Get the response synthesizer

return get_response_synthesizer(

self._llm, self.callback_manager, qa_messages, refine_messages, streaming

)

@trace_method("chat")

def chat(

self,

message: str,

chat_history: Optional[List[ChatMessage]] = None,

prev_chunks: Optional[List[NodeWithScore]] = None,

) -> AgentChatResponse:

if chat_history is not None:

self._memory.set(chat_history)

# get nodes and postprocess them

nodes = self._get_nodes(message)

if len(nodes) == 0 and prev_chunks is not None:

nodes = prev_chunks

# Get the response synthesizer with dynamic prompts

chat_history = self._memory.get(

input=message,

)

synthesizer = self._get_response_synthesizer(chat_history)

response = synthesizer.synthesize(message, nodes)

user_message = ChatMessage(content=message, role=MessageRole.USER)

ai_message = ChatMessage(content=str(response), role=MessageRole.ASSISTANT)

self._memory.put(user_message)

self._memory.put(ai_message)

return AgentChatResponse(

response=str(response),

sources=[

ToolOutput(

tool_name="retriever",

content=str(nodes),

raw_input={"message": message},

raw_output=nodes,

)

],

source_nodes=nodes,

)

@trace_method("chat")

def stream_chat(

self,

message: str,

chat_history: Optional[List[ChatMessage]] = None,

prev_chunks: Optional[List[NodeWithScore]] = None,

) -> StreamingAgentChatResponse:

if chat_history is not None:

self._memory.set(chat_history)

# get nodes and postprocess them

nodes = self._get_nodes(message)

if len(nodes) == 0 and prev_chunks is not None:

nodes = prev_chunks

# Get the response synthesizer with dynamic prompts

chat_history = self._memory.get(

input=message,

)

synthesizer = self._get_response_synthesizer(chat_history, streaming=True)

response = synthesizer.synthesize(message, nodes)

assert isinstance(response, StreamingResponse)

def wrapped_gen(response: StreamingResponse) -> ChatResponseGen:

full_response = ""

for token in response.response_gen:

full_response += token

yield ChatResponse(

message=ChatMessage(

content=full_response, role=MessageRole.ASSISTANT

),

delta=token,

)

user_message = ChatMessage(content=message, role=MessageRole.USER)

ai_message = ChatMessage(content=full_response, role=MessageRole.ASSISTANT)

self._memory.put(user_message)

self._memory.put(ai_message)

return StreamingAgentChatResponse(

chat_stream=wrapped_gen(response),

sources=[

ToolOutput(

tool_name="retriever",

content=str(nodes),

raw_input={"message": message},

raw_output=nodes,

)

],

source_nodes=nodes,

is_writing_to_memory=False,

)

@trace_method("chat")

async def achat(

self,

message: str,

chat_history: Optional[List[ChatMessage]] = None,

prev_chunks: Optional[List[NodeWithScore]] = None,

) -> AgentChatResponse:

if chat_history is not None:

self._memory.set(chat_history)

# get nodes and postprocess them

nodes = await self._aget_nodes(message)

if len(nodes) == 0 and prev_chunks is not None:

nodes = prev_chunks

# Get the response synthesizer with dynamic prompts

chat_history = self._memory.get(

input=message,

)

synthesizer = self._get_response_synthesizer(chat_history)

response = await synthesizer.asynthesize(message, nodes)

user_message = ChatMessage(content=message, role=MessageRole.USER)

ai_message = ChatMessage(content=str(response), role=MessageRole.ASSISTANT)

await self._memory.aput(user_message)

await self._memory.aput(ai_message)

return AgentChatResponse(

response=str(response),

sources=[

ToolOutput(

tool_name="retriever",

content=str(nodes),

raw_input={"message": message},

raw_output=nodes,

)

],

source_nodes=nodes,

)

| |

174316

|

from typing import List, Optional, Sequence

from llama_index.core.base.llms.types import ChatMessage, MessageRole

# Create a prompt that matches ChatML instructions

# <|im_start|>system

# You are Dolphin, a helpful AI assistant.<|im_end|>

# <|im_start|>user

# {prompt}<|im_end|>

# <|im_start|>assistant

B_SYS = "<|im_start|>system\n"

B_USER = "<|im_start|>user\n"

B_ASSISTANT = "<|im_start|>assistant\n"

END = "<|im_end|>\n"

DEFAULT_SYSTEM_PROMPT = """\

You are a helpful, respectful and honest assistant. \

Always answer as helpfully as possible and follow ALL given instructions. \

Do not speculate or make up information. \

Do not reference any given instructions or context. \

"""

def messages_to_prompt(

messages: Sequence[ChatMessage], system_prompt: Optional[str] = None

) -> str:

if len(messages) == 0:

raise ValueError(

"At least one message is required to construct the ChatML prompt"

)

string_messages: List[str] = []

if messages[0].role == MessageRole.SYSTEM:

# pull out the system message (if it exists in messages)

system_message_str = messages[0].content or ""

messages = messages[1:]

else:

system_message_str = system_prompt or DEFAULT_SYSTEM_PROMPT

string_messages.append(f"{B_SYS}{system_message_str.strip()} {END}")

for message in messages:

role = message.role

content = message.content

if role == MessageRole.USER:

string_messages.append(f"{B_USER}{content} {END}")

elif role == MessageRole.ASSISTANT:

string_messages.append(f"{B_ASSISTANT}{content} {END}")

string_messages.append(f"{B_ASSISTANT}")

return "".join(string_messages)

def completion_to_prompt(completion: str, system_prompt: Optional[str] = None) -> str:

system_prompt_str = system_prompt or DEFAULT_SYSTEM_PROMPT

return (

f"{B_SYS}{system_prompt_str.strip()} {END}"

f"{B_USER}{completion.strip()} {END}"

f"{B_ASSISTANT}"

)

| |

174368

|

"generate_cohere_reranker_finetuning_dataset": "llama_index.finetuning",

"CohereRerankerFinetuneEngine": "llama_index.finetuning",

"MistralAIFinetuneEngine": "llama_index.finetuning",

"BaseFinetuningHandler": "llama_index.finetuning.callbacks",

"OpenAIFineTuningHandler": "llama_index.finetuning.callbacks",

"MistralAIFineTuningHandler": "llama_index.finetuning.callbacks",

"CrossEncoderFinetuneEngine": "llama_index.finetuning.cross_encoders",

"CohereRerankerFinetuneDataset": "llama_index.finetuning.rerankers",

"SingleStoreVectorStore": "llama_index.vector_stores.singlestoredb",

"QdrantVectorStore": "llama_index.vector_stores.qdrant",

"PineconeVectorStore": "llama_index.vector_stores.pinecone",

"AWSDocDbVectorStore": "llama_index.vector_stores.awsdocdb",

"DuckDBVectorStore": "llama_index.vector_stores.duckdb",

"SupabaseVectorStore": "llama_index.vector_stores.supabase",

"UpstashVectorStore": "llama_index.vector_stores.upstash",

"LanceDBVectorStore": "llama_index.vector_stores.lancedb",

"AsyncBM25Strategy": "llama_index.vector_stores.elasticsearch",

"AsyncDenseVectorStrategy": "llama_index.vector_stores.elasticsearch",

"AsyncRetrievalStrategy": "llama_index.vector_stores.elasticsearch",

"AsyncSparseVectorStrategy": "llama_index.vector_stores.elasticsearch",

"ElasticsearchStore": "llama_index.vector_stores.elasticsearch",

"PGVectorStore": "llama_index.vector_stores.postgres",

"VertexAIVectorStore": "llama_index.vector_stores.vertexaivectorsearch",

"CassandraVectorStore": "llama_index.vector_stores.cassandra",

"ZepVectorStore": "llama_index.vector_stores.zep",

"RocksetVectorStore": "llama_index.vector_stores.rocksetdb",

"MyScaleVectorStore": "llama_index.vector_stores.myscale",

"KDBAIVectorStore": "llama_index.vector_stores.kdbai",

"AlibabaCloudOpenSearchConfig": "llama_index.vector_stores.alibabacloud_opensearch",

"AlibabaCloudOpenSearchStore": "llama_index.vector_stores.alibabacloud_opensearch",

"TencentVectorDB": "llama_index.vector_stores.tencentvectordb",

"CollectionParams": "llama_index.vector_stores.tencentvectordb",

"MilvusVectorStore": "llama_index.vector_stores.milvus",

"AnalyticDBVectorStore": "llama_index.vector_stores.analyticdb",

"Neo4jVectorStore": "llama_index.vector_stores.neo4jvector",

"DeepLakeVectorStore": "llama_index.vector_stores.deeplake",

"CouchbaseVectorStore": "llama_index.vector_stores.couchbase",

"WeaviateVectorStore": "llama_index.vector_stores.weaviate",

"BaiduVectorDB": "llama_index.vector_stores.baiduvectordb",

"TableParams": "llama_index.vector_stores.baiduvectordb",

"TableField": "llama_index.vector_stores.baiduvectordb",

"TimescaleVectorStore": "llama_index.vector_stores.timescalevector",

"TablestoreVectorStore": "llama_index.vector_stores.tablestore",

"DashVectorStore": "llama_index.vector_stores.dashvector",

"JaguarVectorStore": "llama_index.vector_stores.jaguar",

"FaissVectorStore": "llama_index.vector_stores.faiss",

"AzureAISearchVectorStore": "llama_index.vector_stores.azureaisearch",

"CognitiveSearchVectorStore": "llama_index.vector_stores.azureaisearch",

"IndexManagement": "llama_index.vector_stores.azureaisearch",

"MetadataIndexFieldType": "llama_index.vector_stores.azureaisearch",

"MongoDBAtlasVectorSearch": "llama_index.vector_stores.mongodb",

"AstraDBVectorStore": "llama_index.vector_stores.astra_db",

"ChromaVectorStore": "llama_index.vector_stores.chroma",

"VearchVectorStore": "llama_index.vector_stores.vearch",

"BagelVectorStore": "llama_index.vector_stores.bagel",

"NeptuneAnalyticsVectorStore": "llama_index.vector_stores.neptune",

"ClickHouseVectorStore": "llama_index.vector_stores.clickhouse",

"TxtaiVectorStore": "llama_index.vector_stores.txtai",

"EpsillaVectorStore": "llama_index.vector_stores.epsilla",

"LanternVectorStore": "llama_index.vector_stores.lantern",

"RelytVectorStore": "llama_index.vector_stores.relyt",

"FirestoreVectorStore": "llama_index.vector_stores.firestore",

"HologresVectorStore": "llama_index.vector_stores.hologres",

"AwaDBVectorStore": "llama_index.vector_stores.awadb",

"WordliftVectorStore": "llama_index.vector_stores.wordlift",

"DatabricksVectorSearch": "llama_index.vector_stores.databricks",

"AzureCosmosDBMongoDBVectorSearch": "llama_index.vector_stores.azurecosmosmongo",

"TypesenseVectorStore": "llama_index.vector_stores.typesense",

"PGVectoRsStore": "llama_index.vector_stores.pgvecto_rs",

"OpensearchVectorStore": "llama_index.vector_stores.opensearch",

"OpensearchVectorClient": "llama_index.vector_stores.opensearch",

"TiDBVectorStore": "llama_index.vector_stores.tidbvector",

"DocArrayInMemoryVectorStore": "llama_index.vector_stores.docarray",

"DocArrayHnswVectorStore": "llama_index.vector_stores.docarray",

"DynamoDBVectorStore": "llama_index.vector_stores.dynamodb",

"ChatGPTRetrievalPluginClient": "llama_index.vector_stores.chatgpt_plugin",

"TairVectorStore": "llama_index.vector_stores.tair",

"RedisVectorStore": "llama_index.vector_stores.redis",

"set_google_config": "llama_index.vector_stores.google",

"GoogleVectorStore": "llama_index.vector_stores.google",

"VespaVectorStore": "llama_index.vector_stores.vespa",

"hybrid_template": "llama_index.vector_stores.vespa",

"MetalVectorStore": "llama_index.vector_stores.metal",

"DuckDBRetriever": "llama_index.retrievers.duckdb_retriever",

"PathwayRetriever": "llama_index.retrievers.pathway",

"AmazonKnowledgeBasesRetriever": "llama_index.retrievers.bedrock",

"MongoDBAtlasBM25Retriever": "llama_index.retrievers.mongodb_atlas_bm25_retriever",

"VideoDBRetriever": "llama_index.retrievers.videodb",

"YouRetriever": "llama_index.retrievers.you",

"DashScopeCloudIndex": "llama_index.indices.managed.dashscope",

"DashScopeCloudRetriever": "llama_index.indices.managed.dashscope",

"PostgresMLIndex": "llama_index.indices.managed.postgresml",

"PostgresMLRetriever": "llama_index.indices.managed.postgresml",

"ZillizCloudPipelineIndex": "llama_index.indices.managed.zilliz",

"ZillizCloudPipelineRetriever": "llama_index.indices.managed.zilliz",

"LlamaCloudIndex": "llama_index.indices.managed.llama_cloud",

"LlamaCloudRetriever": "llama_index.indices.managed.llama_cloud",

"ColbertIndex": "llama_index.indices.managed.colbert",

"VectaraIndex": "llama_index.indices.managed.vectara",

"VectaraRetriever": "llama_index.indices.managed.vectara",

"VectaraAutoRetriever": "llama_index.indices.managed.vectara",

"GoogleIndex": "llama_index.indices.managed.google",

"VertexAIIndex": "llama_index.indices.managed.vertexai",

"VertexAIRetriever": "llama_index.indices.managed.vertexai",

"SalesforceToolSpec": "llama_index.tools.salesforce",

"PythonFileToolSpec": "llama_index.tools.python_file",

| |

174405

|

"""Response schema."""

import asyncio

from dataclasses import dataclass, field

from typing import Any, Dict, List, Optional, Union

from llama_index.core.async_utils import asyncio_run

from llama_index.core.bridge.pydantic import BaseModel

from llama_index.core.schema import NodeWithScore

from llama_index.core.types import TokenGen, TokenAsyncGen

from llama_index.core.utils import truncate_text

@dataclass

class Response:

"""Response object.

Returned if streaming=False.

Attributes:

response: The response text.

"""

response: Optional[str]

source_nodes: List[NodeWithScore] = field(default_factory=list)

metadata: Optional[Dict[str, Any]] = None

def __str__(self) -> str:

"""Convert to string representation."""

return self.response or "None"

def get_formatted_sources(self, length: int = 100) -> str:

"""Get formatted sources text."""

texts = []

for source_node in self.source_nodes:

fmt_text_chunk = truncate_text(source_node.node.get_content(), length)

doc_id = source_node.node.node_id or "None"

source_text = f"> Source (Doc id: {doc_id}): {fmt_text_chunk}"

texts.append(source_text)

return "\n\n".join(texts)

@dataclass

class PydanticResponse:

"""PydanticResponse object.

Returned if streaming=False.

Attributes:

response: The response text.

"""

response: Optional[BaseModel]

source_nodes: List[NodeWithScore] = field(default_factory=list)

metadata: Optional[Dict[str, Any]] = None

def __str__(self) -> str:

"""Convert to string representation."""

return self.response.model_dump_json() if self.response else "None"

def __getattr__(self, name: str) -> Any:

"""Get attribute, but prioritize the pydantic response object."""

if self.response is not None and name in self.response.model_dump():

return getattr(self.response, name)

else:

return None

def __post_init_post_parse__(self) -> None:

"""This method is required.

According to the Pydantic docs, if a stdlib dataclass (which this class

is one) gets mixed with a BaseModel (in the sense that this gets used as a

Field in another BaseModel), then this stdlib dataclass will automatically

get converted to a pydantic.v1.dataclass.

However, it appears that in that automatic conversion, this method

is left as NoneType, which raises an error. To safeguard against that,

we are expilcitly defining this method as something that can be called.

Sources:

- https://docs.pydantic.dev/1.10/usage/dataclasses/#use-of-stdlib-dataclasses-with-basemodel

- https://docs.pydantic.dev/1.10/usage/dataclasses/#initialize-hooks

"""

return

def get_formatted_sources(self, length: int = 100) -> str:

"""Get formatted sources text."""

texts = []

for source_node in self.source_nodes:

fmt_text_chunk = truncate_text(source_node.node.get_content(), length)

doc_id = source_node.node.node_id or "None"

source_text = f"> Source (Doc id: {doc_id}): {fmt_text_chunk}"

texts.append(source_text)

return "\n\n".join(texts)

def get_response(self) -> Response:

"""Get a standard response object."""

response_txt = self.response.model_dump_json() if self.response else "None"

return Response(response_txt, self.source_nodes, self.metadata)

@dataclass

class StreamingResponse:

"""StreamingResponse object.

Returned if streaming=True.

Attributes:

response_gen: The response generator.

"""

response_gen: TokenGen

source_nodes: List[NodeWithScore] = field(default_factory=list)

metadata: Optional[Dict[str, Any]] = None

response_txt: Optional[str] = None

def __str__(self) -> str:

"""Convert to string representation."""

if self.response_txt is None and self.response_gen is not None:

response_txt = ""

for text in self.response_gen:

response_txt += text

self.response_txt = response_txt

return self.response_txt or "None"

def get_response(self) -> Response:

"""Get a standard response object."""

if self.response_txt is None and self.response_gen is not None:

response_txt = ""

for text in self.response_gen:

response_txt += text

self.response_txt = response_txt

return Response(self.response_txt, self.source_nodes, self.metadata)

def print_response_stream(self) -> None:

"""Print the response stream."""

if self.response_txt is None and self.response_gen is not None:

response_txt = ""

for text in self.response_gen:

print(text, end="", flush=True)

response_txt += text

self.response_txt = response_txt

else:

print(self.response_txt)

def get_formatted_sources(self, length: int = 100, trim_text: int = True) -> str:

"""Get formatted sources text."""

texts = []

for source_node in self.source_nodes:

fmt_text_chunk = source_node.node.get_content()

if trim_text:

fmt_text_chunk = truncate_text(fmt_text_chunk, length)

node_id = source_node.node.node_id or "None"

source_text = f"> Source (Node id: {node_id}): {fmt_text_chunk}"

texts.append(source_text)

return "\n\n".join(texts)

@dataclass

class AsyncStreamingResponse:

"""AsyncStreamingResponse object.

Returned if streaming=True while using async.

Attributes:

_async_response_gen: The response async generator.

"""

response_gen: TokenAsyncGen

source_nodes: List[NodeWithScore] = field(default_factory=list)

metadata: Optional[Dict[str, Any]] = None

response_txt: Optional[str] = None

def __post_init__(self) -> None:

self._lock = asyncio.Lock()

def __str__(self) -> str:

"""Convert to string representation."""

return asyncio_run(self._async_str())

async def _async_str(self) -> str:

"""Convert to string representation."""

async for _ in self._yield_response():

...

return self.response_txt or "None"

async def _yield_response(self) -> TokenAsyncGen:

"""Yield the string response."""

async with self._lock:

if self.response_txt is None and self.response_gen is not None:

self.response_txt = ""

async for text in self.response_gen:

self.response_txt += text

yield text

else:

yield self.response_txt

async def async_response_gen(self) -> TokenAsyncGen:

"""Yield the string response."""

async for text in self._yield_response():

yield text

async def get_response(self) -> Response:

"""Get a standard response object."""

async for _ in self._yield_response():

...

return Response(self.response_txt, self.source_nodes, self.metadata)

async def print_response_stream(self) -> None:

"""Print the response stream."""

streaming = True

async for text in self._yield_response():

print(text, end="", flush=True)

# do an empty print to print on the next line again next time

print()

def get_formatted_sources(self, length: int = 100, trim_text: int = True) -> str:

"""Get formatted sources text."""

texts = []

for source_node in self.source_nodes:

fmt_text_chunk = source_node.node.get_content()

if trim_text:

fmt_text_chunk = truncate_text(fmt_text_chunk, length)

node_id = source_node.node.node_id or "None"

source_text = f"> Source (Node id: {node_id}): {fmt_text_chunk}"

texts.append(source_text)

return "\n\n".join(texts)

RESPONSE_TYPE = Union[

Response, StreamingResponse, AsyncStreamingResponse, PydanticResponse

]

| |

174468

|

def test_nl_query_engine_parser(

patch_llm_predictor,

patch_token_text_splitter,

struct_kwargs: Tuple[Dict, Dict],

) -> None:

"""Test the sql response parser."""

index_kwargs, _ = struct_kwargs

docs = [Document(text="user_id:2,foo:bar"), Document(text="user_id:8,foo:hello")]

engine = create_engine("sqlite:///:memory:")

metadata_obj = MetaData()

table_name = "test_table"

# NOTE: table is created by tying to metadata_obj

Table(

table_name,

metadata_obj,

Column("user_id", Integer, primary_key=True),

Column("foo", String(16), nullable=False),

)

metadata_obj.create_all(engine)

sql_database = SQLDatabase(engine)

# NOTE: we can use the default output parser for this

index = SQLStructStoreIndex.from_documents(

docs,

sql_database=sql_database,

table_name=table_name,

**index_kwargs,

)

nl_query_engine = NLStructStoreQueryEngine(index)

# Response with SQLResult

response = "SELECT * FROM table; SQLResult: [(1, 'value')]"

assert nl_query_engine._parse_response_to_sql(response) == "SELECT * FROM table;"

# Response with SQLQuery

response = "SQLQuery: SELECT * FROM table;"

assert nl_query_engine._parse_response_to_sql(response) == "SELECT * FROM table;"

# Response with ```sql markdown

response = "```sql\nSELECT * FROM table;\n```"

assert nl_query_engine._parse_response_to_sql(response) == "SELECT * FROM table;"

# Response with extra text after semi-colon

response = "SELECT * FROM table; This is extra text."

assert nl_query_engine._parse_response_to_sql(response) == "SELECT * FROM table;"

# Response with escaped single quotes

response = "SELECT * FROM table WHERE name = \\'O\\'Reilly\\';"

assert (

nl_query_engine._parse_response_to_sql(response)

== "SELECT * FROM table WHERE name = ''O''Reilly'';"

)

# Response with escaped single quotes

response = "SQLQuery: ```sql\nSELECT * FROM table WHERE name = \\'O\\'Reilly\\';\n``` Extra test SQLResult: [(1, 'value')]"

assert (

nl_query_engine._parse_response_to_sql(response)

== "SELECT * FROM table WHERE name = ''O''Reilly'';"

)

| |

174545

|

from llama_index.core.node_parser.file.json import JSONNodeParser

from llama_index.core.schema import Document

def test_split_empty_text() -> None:

json_splitter = JSONNodeParser()

input_text = Document(text="")

result = json_splitter.get_nodes_from_documents([input_text])

assert result == []

def test_split_valid_json() -> None:

json_splitter = JSONNodeParser()

input_text = Document(

text='[{"name": "John", "age": 30}, {"name": "Alice", "age": 25}]'

)

result = json_splitter.get_nodes_from_documents([input_text])

assert len(result) == 2

assert result[0].text == "name John\nage 30"

assert result[1].text == "name Alice\nage 25"

def test_split_valid_json_defaults() -> None:

json_splitter = JSONNodeParser()

input_text = Document(text='[{"name": "John", "age": 30}]')

result = json_splitter.get_nodes_from_documents([input_text])

assert len(result) == 1

assert result[0].text == "name John\nage 30"

def test_split_valid_dict_json() -> None:

json_splitter = JSONNodeParser()

input_text = Document(text='{"name": "John", "age": 30}')

result = json_splitter.get_nodes_from_documents([input_text])

assert len(result) == 1

assert result[0].text == "name John\nage 30"

def test_split_invalid_json() -> None:

json_splitter = JSONNodeParser()

input_text = Document(text='{"name": "John", "age": 30,}')

result = json_splitter.get_nodes_from_documents([input_text])

assert result == []

| |

174547

|

def test_complex_md() -> None:

test_data = Document(

text="""

# Using LLMs

## Concept

Picking the proper Large Language Model (LLM) is one of the first steps you need to consider when building any LLM application over your data.

LLMs are a core component of LlamaIndex. They can be used as standalone modules or plugged into other core LlamaIndex modules (indices, retrievers, query engines). They are always used during the response synthesis step (e.g. after retrieval). Depending on the type of index being used, LLMs may also be used during index construction, insertion, and query traversal.

LlamaIndex provides a unified interface for defining LLM modules, whether it's from OpenAI, Hugging Face, or LangChain, so that you

don't have to write the boilerplate code of defining the LLM interface yourself. This interface consists of the following (more details below):

- Support for **text completion** and **chat** endpoints (details below)

- Support for **streaming** and **non-streaming** endpoints

- Support for **synchronous** and **asynchronous** endpoints

## Usage Pattern

The following code snippet shows how you can get started using LLMs.

```python

from llama_index.core.llms import OpenAI

# non-streaming

resp = OpenAI().complete("Paul Graham is ")

print(resp)

```

```{toctree}

---

maxdepth: 1

---

llms/usage_standalone.md

llms/usage_custom.md

```

## A Note on Tokenization

By default, LlamaIndex uses a global tokenizer for all token counting. This defaults to `cl100k` from tiktoken, which is the tokenizer to match the default LLM `gpt-3.5-turbo`.

If you change the LLM, you may need to update this tokenizer to ensure accurate token counts, chunking, and prompting.

The single requirement for a tokenizer is that it is a callable function, that takes a string, and returns a list.

You can set a global tokenizer like so:

```python

from llama_index.core import set_global_tokenizer

# tiktoken

import tiktoken

set_global_tokenizer(tiktoken.encoding_for_model("gpt-3.5-turbo").encode)

# huggingface

from transformers import AutoTokenizer # pants: no-infer-dep

set_global_tokenizer(

AutoTokenizer.from_pretrained("HuggingFaceH4/zephyr-7b-beta").encode

)

```

## LLM Compatibility Tracking

While LLMs are powerful, not every LLM is easy to set up. Furthermore, even with proper setup, some LLMs have trouble performing tasks that require strict instruction following.

LlamaIndex offers integrations with nearly every LLM, but it can be often unclear if the LLM will work well out of the box, or if further customization is needed.

The tables below attempt to validate the **initial** experience with various LlamaIndex features for various LLMs. These notebooks serve as a best attempt to gauge performance, as well as how much effort and tweaking is needed to get things to function properly.

Generally, paid APIs such as OpenAI or Anthropic are viewed as more reliable. However, local open-source models have been gaining popularity due to their customizability and approach to transparency.

**Contributing:** Anyone is welcome to contribute new LLMs to the documentation. Simply copy an existing notebook, setup and test your LLM, and open a PR with your results.

If you have ways to improve the setup for existing notebooks, contributions to change this are welcome!

**Legend**

- ✅ = should work fine

- ⚠️ = sometimes unreliable, may need prompt engineering to improve

- 🛑 = usually unreliable, would need prompt engineering/fine-tuning to improve

### Paid LLM APIs

| Model Name | Basic Query Engines | Router Query Engine | Sub Question Query Engine | Text2SQL | Pydantic Programs | Data Agents | <div style="width:290px">Notes</div> |

| ------------------------------------------------------------------------------------------------------------------------ | ------------------- | ------------------- | ------------------------- | -------- | ----------------- | ----------- | --------------------------------------- |

| [gpt-3.5-turbo](https://colab.research.google.com/drive/1oVqUAkn0GCBG5OCs3oMUPlNQDdpDTH_c?usp=sharing) (openai) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | |

| [gpt-3.5-turbo-instruct](https://colab.research.google.com/drive/1DrVdx-VZ3dXwkwUVZQpacJRgX7sOa4ow?usp=sharing) (openai) | ✅ | ✅ | ✅ | ✅ | ✅ | ⚠️ | Tool usage in data-agents seems flakey. |

| [gpt-4](https://colab.research.google.com/drive/1RsBoT96esj1uDID-QE8xLrOboyHKp65L?usp=sharing) (openai) | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | |

| [claude-2](https://colab.research.google.com/drive/1os4BuDS3KcI8FCcUM_2cJma7oI2PGN7N?usp=sharing) (anthropic) | ✅ | ✅ | ✅ | ✅ | ✅ | ⚠️ | Prone to hallucinating tool inputs. |

| [claude-instant-1.2](https://colab.research.google.com/drive/1wt3Rt2OWBbqyeRYdiLfmB0_OIUOGit_D?usp=sharing) (anthropic) | ✅ | ✅ | ✅ | ✅ | ✅ | ⚠️ | Prone to hallucinating tool inputs. |

### Open Source LLMs

Since open source LLMs require large amounts of resources, the quantization is reported. Quantization is just a method for reducing the size of an LLM by shrinking the accuracy of calculations within the model. Research has shown that up to 4Bit quantization can be achieved for large LLMs without impacting performance too severely.

| Model Name | Basic Query Engines | Router Query Engine | SubQuestion Query Engine | Text2SQL | Pydantic Programs | Data Agents | <div style="width:290px">Notes</div> |

| ------------------------------------------------------------------------------------------------------------------------------------ | ------------------- | ------------------- | ------------------------ | -------- | ----------------- | ----------- | ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| [llama2-chat-7b 4bit](https://colab.research.google.com/drive/14N-hmJ87wZsFqHktrw40OU6sVcsiSzlQ?usp=sharing) (huggingface) | ✅ | 🛑 | 🛑 | 🛑 | 🛑 | ⚠️ | Llama2 seems to be quite chatty, which makes parsing structured outputs difficult. Fine-tuning and prompt engineering likely required for better performance on structured outputs. |

| [llama2-13b-chat](https://colab.research.google.com/drive/1S3eCZ8goKjFktF9hIakzcHqDE72g0Ggb?usp=sharing) (replicate) | ✅ | ✅ | 🛑 | ✅ | 🛑 | 🛑 | Our ReAct prompt expects structured outputs, which llama-13b struggles at |

| [llama2-70b-chat](https://colab.research.google.com/drive/1BeOuVI8StygKFTLSpZ0vGCouxar2V5UW?usp=sharing) (replicate) | ✅ | ✅ | ✅ | ✅ | 🛑 | ⚠️ | There are still some issues with parsing structured outputs, especially with pydantic programs. |

| [Mistral-7B-instruct-v0.1 4bit](https://colab.research.google.com/drive/1ZAdrabTJmZ_etDp10rjij_zME2Q3umAQ?usp=sharing) (huggingface) | ✅ | 🛑 | 🛑 | ⚠️ | ⚠️ | ⚠️ | Mistral seems slightly more reliable for structured outputs compared to Llama2. Likely with some prompt engineering, it may do b

| |

174659

|

"""Test pydantic output parser."""

import pytest

from llama_index.core.bridge.pydantic import BaseModel

from llama_index.core.output_parsers.pydantic import PydanticOutputParser

class AttrDict(BaseModel):

test_attr: str

foo: int

class TestModel(BaseModel):

__test__ = False

title: str

attr_dict: AttrDict

def test_pydantic() -> None:

"""Test pydantic output parser."""

output = """\

Here is the valid JSON:

{

"title": "TestModel",

"attr_dict": {

"test_attr": "test_attr",

"foo": 2

}

}

"""

parser = PydanticOutputParser(output_cls=TestModel)

parsed_output = parser.parse(output)

assert isinstance(parsed_output, TestModel)

assert parsed_output.title == "TestModel"

assert isinstance(parsed_output.attr_dict, AttrDict)

assert parsed_output.attr_dict.test_attr == "test_attr"

assert parsed_output.attr_dict.foo == 2

# TODO: figure out testing conditions

with pytest.raises(ValueError):

output = "hello world"

parsed_output = parser.parse(output)

def test_pydantic_format() -> None:

"""Test pydantic format."""

query = "hello world"

parser = PydanticOutputParser(output_cls=AttrDict)

formatted_query = parser.format(query)

assert "hello world" in formatted_query

| |

174805

|

"generate_cohere_reranker_finetuning_dataset": "llama_index.finetuning",

"CohereRerankerFinetuneEngine": "llama_index.finetuning",

"MistralAIFinetuneEngine": "llama_index.finetuning",

"BaseFinetuningHandler": "llama_index.finetuning.callbacks",

"OpenAIFineTuningHandler": "llama_index.finetuning.callbacks",

"MistralAIFineTuningHandler": "llama_index.finetuning.callbacks",

"CrossEncoderFinetuneEngine": "llama_index.finetuning.cross_encoders",

"CohereRerankerFinetuneDataset": "llama_index.finetuning.rerankers",

"SingleStoreVectorStore": "llama_index.vector_stores.singlestoredb",

"QdrantVectorStore": "llama_index.vector_stores.qdrant",

"PineconeVectorStore": "llama_index.vector_stores.pinecone",

"AWSDocDbVectorStore": "llama_index.vector_stores.awsdocdb",

"DuckDBVectorStore": "llama_index.vector_stores.duckdb",

"SupabaseVectorStore": "llama_index.vector_stores.supabase",

"UpstashVectorStore": "llama_index.vector_stores.upstash",

"LanceDBVectorStore": "llama_index.vector_stores.lancedb",

"AsyncBM25Strategy": "llama_index.vector_stores.elasticsearch",

"AsyncDenseVectorStrategy": "llama_index.vector_stores.elasticsearch",

"AsyncRetrievalStrategy": "llama_index.vector_stores.elasticsearch",

"AsyncSparseVectorStrategy": "llama_index.vector_stores.elasticsearch",

"ElasticsearchStore": "llama_index.vector_stores.elasticsearch",

"PGVectorStore": "llama_index.vector_stores.postgres",

"VertexAIVectorStore": "llama_index.vector_stores.vertexaivectorsearch",

"CassandraVectorStore": "llama_index.vector_stores.cassandra",

"ZepVectorStore": "llama_index.vector_stores.zep",

"RocksetVectorStore": "llama_index.vector_stores.rocksetdb",

"MyScaleVectorStore": "llama_index.vector_stores.myscale",

"KDBAIVectorStore": "llama_index.vector_stores.kdbai",

"AlibabaCloudOpenSearchConfig": "llama_index.vector_stores.alibabacloud_opensearch",

"AlibabaCloudOpenSearchStore": "llama_index.vector_stores.alibabacloud_opensearch",

"TencentVectorDB": "llama_index.vector_stores.tencentvectordb",

"CollectionParams": "llama_index.vector_stores.tencentvectordb",

"MilvusVectorStore": "llama_index.vector_stores.milvus",

"AnalyticDBVectorStore": "llama_index.vector_stores.analyticdb",

"Neo4jVectorStore": "llama_index.vector_stores.neo4jvector",

"DeepLakeVectorStore": "llama_index.vector_stores.deeplake",

"CouchbaseVectorStore": "llama_index.vector_stores.couchbase",

"WeaviateVectorStore": "llama_index.vector_stores.weaviate",

"BaiduVectorDB": "llama_index.vector_stores.baiduvectordb",

"TableParams": "llama_index.vector_stores.baiduvectordb",

"TableField": "llama_index.vector_stores.baiduvectordb",

"TimescaleVectorStore": "llama_index.vector_stores.timescalevector",

"TablestoreVectorStore": "llama_index.vector_stores.tablestore",

"DashVectorStore": "llama_index.vector_stores.dashvector",

"JaguarVectorStore": "llama_index.vector_stores.jaguar",

"FaissVectorStore": "llama_index.vector_stores.faiss",

"AzureAISearchVectorStore": "llama_index.vector_stores.azureaisearch",

"CognitiveSearchVectorStore": "llama_index.vector_stores.azureaisearch",

"IndexManagement": "llama_index.vector_stores.azureaisearch",

"MetadataIndexFieldType": "llama_index.vector_stores.azureaisearch",

"MongoDBAtlasVectorSearch": "llama_index.vector_stores.mongodb",

"AstraDBVectorStore": "llama_index.vector_stores.astra_db",

"ChromaVectorStore": "llama_index.vector_stores.chroma",

"VearchVectorStore": "llama_index.vector_stores.vearch",

"BagelVectorStore": "llama_index.vector_stores.bagel",

"NeptuneAnalyticsVectorStore": "llama_index.vector_stores.neptune",

"ClickHouseVectorStore": "llama_index.vector_stores.clickhouse",

"TxtaiVectorStore": "llama_index.vector_stores.txtai",

"EpsillaVectorStore": "llama_index.vector_stores.epsilla",

"LanternVectorStore": "llama_index.vector_stores.lantern",

"RelytVectorStore": "llama_index.vector_stores.relyt",

"FirestoreVectorStore": "llama_index.vector_stores.firestore",

"HologresVectorStore": "llama_index.vector_stores.hologres",

"AwaDBVectorStore": "llama_index.vector_stores.awadb",

"WordliftVectorStore": "llama_index.vector_stores.wordlift",

"DatabricksVectorSearch": "llama_index.vector_stores.databricks",

"AzureCosmosDBMongoDBVectorSearch": "llama_index.vector_stores.azurecosmosmongo",

"TypesenseVectorStore": "llama_index.vector_stores.typesense",

"PGVectoRsStore": "llama_index.vector_stores.pgvecto_rs",

"OpensearchVectorStore": "llama_index.vector_stores.opensearch",

"OpensearchVectorClient": "llama_index.vector_stores.opensearch",

"TiDBVectorStore": "llama_index.vector_stores.tidbvector",

"DocArrayInMemoryVectorStore": "llama_index.vector_stores.docarray",

"DocArrayHnswVectorStore": "llama_index.vector_stores.docarray",

"DynamoDBVectorStore": "llama_index.vector_stores.dynamodb",

"ChatGPTRetrievalPluginClient": "llama_index.vector_stores.chatgpt_plugin",

"TairVectorStore": "llama_index.vector_stores.tair",

"RedisVectorStore": "llama_index.vector_stores.redis",

"set_google_config": "llama_index.vector_stores.google",

"GoogleVectorStore": "llama_index.vector_stores.google",

"VespaVectorStore": "llama_index.vector_stores.vespa",

"hybrid_template": "llama_index.vector_stores.vespa",

"MetalVectorStore": "llama_index.vector_stores.metal",

"DuckDBRetriever": "llama_index.retrievers.duckdb_retriever",

"PathwayRetriever": "llama_index.retrievers.pathway",

"AmazonKnowledgeBasesRetriever": "llama_index.retrievers.bedrock",

"MongoDBAtlasBM25Retriever": "llama_index.retrievers.mongodb_atlas_bm25_retriever",

"VideoDBRetriever": "llama_index.retrievers.videodb",

"YouRetriever": "llama_index.retrievers.you",

"DashScopeCloudIndex": "llama_index.indices.managed.dashscope",

"DashScopeCloudRetriever": "llama_index.indices.managed.dashscope",

"PostgresMLIndex": "llama_index.indices.managed.postgresml",

"PostgresMLRetriever": "llama_index.indices.managed.postgresml",

"ZillizCloudPipelineIndex": "llama_index.indices.managed.zilliz",

"ZillizCloudPipelineRetriever": "llama_index.indices.managed.zilliz",

"LlamaCloudIndex": "llama_index.indices.managed.llama_cloud",

"LlamaCloudRetriever": "llama_index.indices.managed.llama_cloud",

"ColbertIndex": "llama_index.indices.managed.colbert",

"VectaraIndex": "llama_index.indices.managed.vectara",

"VectaraRetriever": "llama_index.indices.managed.vectara",

"VectaraAutoRetriever": "llama_index.indices.managed.vectara",

"GoogleIndex": "llama_index.indices.managed.google",

"VertexAIIndex": "llama_index.indices.managed.vertexai",

"VertexAIRetriever": "llama_index.indices.managed.vertexai",

"SalesforceToolSpec": "llama_index.tools.salesforce",

"PythonFileToolSpec": "llama_index.tools.python_file",

| |

174841

|

# How to work with large language models

## How large language models work

[Large language models][Large language models Blog Post] are functions that map text to text. Given an input string of text, a large language model predicts the text that should come next.

The magic of large language models is that by being trained to minimize this prediction error over vast quantities of text, the models end up learning concepts useful for these predictions. For example, they learn:

- how to spell

- how grammar works

- how to paraphrase

- how to answer questions

- how to hold a conversation

- how to write in many languages

- how to code

- etc.

They do this by “reading” a large amount of existing text and learning how words tend to appear in context with other words, and uses what it has learned to predict the next most likely word that might appear in response to a user request, and each subsequent word after that.

GPT-3 and GPT-4 power [many software products][OpenAI Customer Stories], including productivity apps, education apps, games, and more.

## How to control a large language model

Of all the inputs to a large language model, by far the most influential is the text prompt.

Large language models can be prompted to produce output in a few ways:

- **Instruction**: Tell the model what you want

- **Completion**: Induce the model to complete the beginning of what you want

- **Scenario**: Give the model a situation to play out

- **Demonstration**: Show the model what you want, with either:

- A few examples in the prompt

- Many hundreds or thousands of examples in a fine-tuning training dataset

An example of each is shown below.

### Instruction prompts

Write your instruction at the top of the prompt (or at the bottom, or both), and the model will do its best to follow the instruction and then stop. Instructions can be detailed, so don't be afraid to write a paragraph explicitly detailing the output you want, just stay aware of how many [tokens](https://help.openai.com/en/articles/4936856-what-are-tokens-and-how-to-count-them) the model can process.

Example instruction prompt:

```text

Extract the name of the author from the quotation below.

“Some humans theorize that intelligent species go extinct before they can expand into outer space. If they're correct, then the hush of the night sky is the silence of the graveyard.”

― Ted Chiang, Exhalation

```

Output:

```text

Ted Chiang

```

### Completion prompt example

Completion-style prompts take advantage of how large language models try to write text they think is most likely to come next. To steer the model, try beginning a pattern or sentence that will be completed by the output you want to see. Relative to direct instructions, this mode of steering large language models can take more care and experimentation. In addition, the models won't necessarily know where to stop, so you will often need stop sequences or post-processing to cut off text generated beyond the desired output.

Example completion prompt:

```text

“Some humans theorize that intelligent species go extinct before they can expand into outer space. If they're correct, then the hush of the night sky is the silence of the graveyard.”

― Ted Chiang, Exhalation

The author of this quote is

```

Output:

```text

Ted Chiang

```

### Scenario prompt example

Giving the model a scenario to follow or role to play out can be helpful for complex queries or when seeking imaginative responses. When using a hypothetical prompt, you set up a situation, problem, or story, and then ask the model to respond as if it were a character in that scenario or an expert on the topic.

Example scenario prompt:

```text

Your role is to extract the name of the author from any given text

“Some humans theorize that intelligent species go extinct before they can expand into outer space. If they're correct, then the hush of the night sky is the silence of the graveyard.”

― Ted Chiang, Exhalation

```

Output:

```text

Ted Chiang

```

### Demonstration prompt example (few-shot learning)

Similar to completion-style prompts, demonstrations can show the model what you want it to do. This approach is sometimes called few-shot learning, as the model learns from a few examples provided in the prompt.

Example demonstration prompt:

```text

Quote:

“When the reasoning mind is forced to confront the impossible again and again, it has no choice but to adapt.”

― N.K. Jemisin, The Fifth Season

Author: N.K. Jemisin

Quote:

“Some humans theorize that intelligent species go extinct before they can expand into outer space. If they're correct, then the hush of the night sky is the silence of the graveyard.”

― Ted Chiang, Exhalation

Author:

```

Output:

```text

Ted Chiang

```

### Fine-tuned prompt example

With enough training examples, you can [fine-tune][Fine Tuning Docs] a custom model. In this case, instructions become unnecessary, as the model can learn the task from the training data provided. However, it can be helpful to include separator sequences (e.g., `->` or `###` or any string that doesn't commonly appear in your inputs) to tell the model when the prompt has ended and the output should begin. Without separator sequences, there is a risk that the model continues elaborating on the input text rather than starting on the answer you want to see.

Example fine-tuned prompt (for a model that has been custom trained on similar prompt-completion pairs):

```text

“Some humans theorize that intelligent species go extinct before they can expand into outer space. If they're correct, then the hush of the night sky is the silence of the graveyard.”

― Ted Chiang, Exhalation

###

```

Output:

```text

Ted Chiang

```

## Code Capabilities

Large language models aren't only great at text - they can be great at code too. OpenAI's [GPT-4][GPT-4 and GPT-4 Turbo] model is a prime example.

GPT-4 powers [numerous innovative products][OpenAI Customer Stories], including:

- [GitHub Copilot] (autocompletes code in Visual Studio and other IDEs)

- [Replit](https://replit.com/) (can complete, explain, edit and generate code)

- [Cursor](https://cursor.sh/) (build software faster in an editor designed for pair-programming with AI)

GPT-4 is more advanced than previous models like `gpt-3.5-turbo-instruct`. But, to get the best out of GPT-4 for coding tasks, it's still important to give clear and specific instructions. As a result, designing good prompts can take more care.

### More prompt advice

For more prompt examples, visit [OpenAI Examples][OpenAI Examples].

In general, the input prompt is the best lever for improving model outputs. You can try tricks like:

- **Be more specific** E.g., if you want the output to be a comma separated list, ask it to return a comma separated list. If you want it to say "I don't know" when it doesn't know the answer, tell it 'Say "I don't know" if you do not know the answer.' The more specific your instructions, the better the model can respond.

- **Provide Context**: Help the model understand the bigger picture of your request. This could be background information, examples/demonstrations of what you want or explaining the purpose of your task.

- **Ask the model to answer as if it was an expert.** Explicitly asking the model to produce high quality output or output as if it was written by an expert can induce the model to give higher quality answers that it thinks an expert would write. Phrases like "Explain in detail" or "Describe step-by-step" can be effective.

- **Prompt the model to write down the series of steps explaining its reasoning.** If understanding the 'why' behind an answer is important, prompt the model to include its reasoning. This can be done by simply adding a line like "[Let's think step by step](https://arxiv.org/abs/2205.11916)" before each answer.

[Fine Tuning Docs]: https://platform.openai.com/docs/guides/fine-tuning

[OpenAI Customer Stories]: https://openai.com/customer-stories

[Large language models Blog Post]: https://openai.com/research/better-language-models

[GitHub Copilot]: https://github.com/features/copilot/

[GPT-4 and GPT-4 Turbo]: https://platform.openai.com/docs/models/gpt-4-and-gpt-4-turbo

[GPT3 Apps Blog Post]: https://openai.com/blog/gpt-3-apps/

[OpenAI Examples]: https://platform.openai.com/examples

| |

174842

|

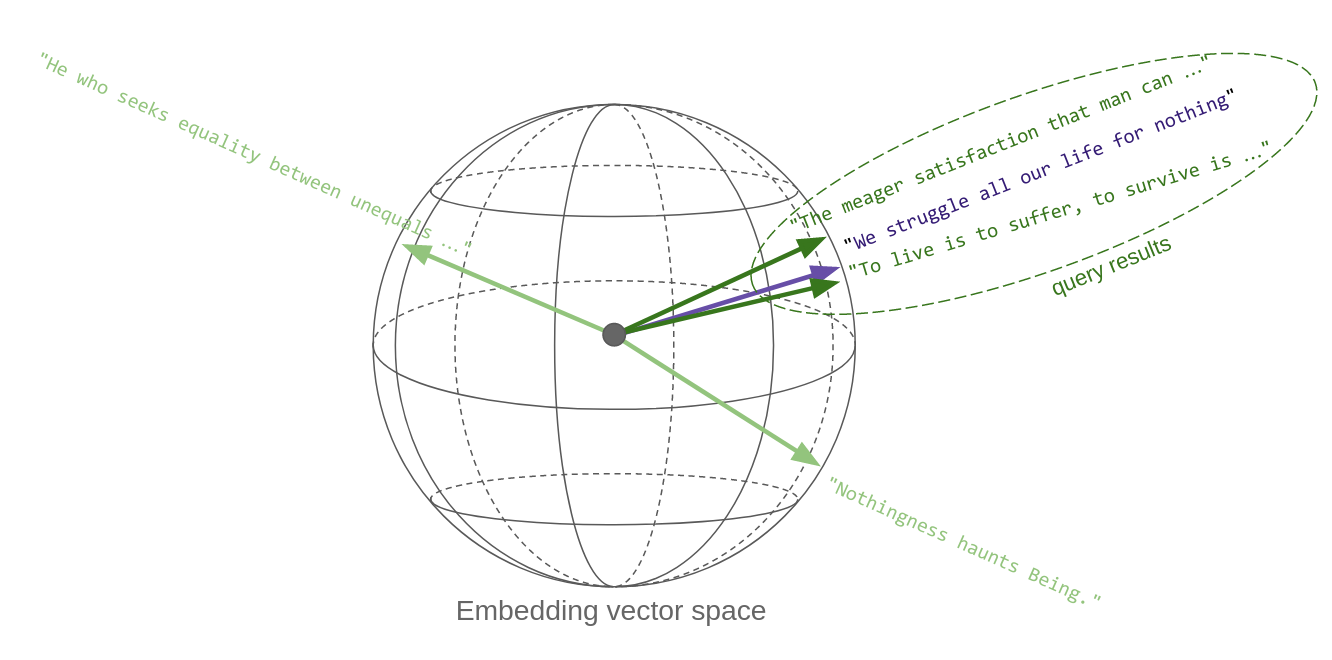

# Text comparison examples

The [OpenAI API embeddings endpoint](https://beta.openai.com/docs/guides/embeddings) can be used to measure relatedness or similarity between pieces of text.

By leveraging GPT-3's understanding of text, these embeddings [achieved state-of-the-art results](https://arxiv.org/abs/2201.10005) on benchmarks in unsupervised learning and transfer learning settings.

Embeddings can be used for semantic search, recommendations, cluster analysis, near-duplicate detection, and more.

For more information, read OpenAI's blog post announcements:

- [Introducing Text and Code Embeddings (Jan 2022)](https://openai.com/blog/introducing-text-and-code-embeddings/)

- [New and Improved Embedding Model (Dec 2022)](https://openai.com/blog/new-and-improved-embedding-model/)

For comparison with other embedding models, see [Massive Text Embedding Benchmark (MTEB) Leaderboard](https://huggingface.co/spaces/mteb/leaderboard)

## Semantic search

Embeddings can be used for search either by themselves or as a feature in a larger system.

The simplest way to use embeddings for search is as follows:

- Before the search (precompute):

- Split your text corpus into chunks smaller than the token limit (8,191 tokens for `text-embedding-3-small`)

- Embed each chunk of text

- Store those embeddings in your own database or in a vector search provider like [Pinecone](https://www.pinecone.io), [Weaviate](https://weaviate.io) or [Qdrant](https://qdrant.tech)

- At the time of the search (live compute):

- Embed the search query

- Find the closest embeddings in your database

- Return the top results

An example of how to use embeddings for search is shown in [Semantic_text_search_using_embeddings.ipynb](../examples/Semantic_text_search_using_embeddings.ipynb).

In more advanced search systems, the cosine similarity of embeddings can be used as one feature among many in ranking search results.

## Question answering

The best way to get reliably honest answers from GPT-3 is to give it source documents in which it can locate correct answers. Using the semantic search procedure above, you can cheaply search through a corpus of documents for relevant information and then give that information to GPT-3 via the prompt to answer a question. We demonstrate this in [Question_answering_using_embeddings.ipynb](../examples/Question_answering_using_embeddings.ipynb).

## Recommendations

Recommendations are quite similar to search, except that instead of a free-form text query, the inputs are items in a set.

An example of how to use embeddings for recommendations is shown in [Recommendation_using_embeddings.ipynb](../examples/Recommendation_using_embeddings.ipynb).

Similar to search, these cosine similarity scores can either be used on their own to rank items or as features in larger ranking algorithms.

## Customizing Embeddings

Although OpenAI's embedding model weights cannot be fine-tuned, you can nevertheless use training data to customize embeddings to your application.

In [Customizing_embeddings.ipynb](../examples/Customizing_embeddings.ipynb), we provide an example method for customizing your embeddings using training data. The idea of the method is to train a custom matrix to multiply embedding vectors by in order to get new customized embeddings. With good training data, this custom matrix will help emphasize the features relevant to your training labels. You can equivalently consider the matrix multiplication as (a) a modification of the embeddings or (b) a modification of the distance function used to measure the distances between embeddings.

| |

174848

|

# Techniques to improve reliability

When GPT-3 fails on a task, what should you do?

- Search for a better prompt that elicits more reliable answers?

- Invest in thousands of examples to fine-tune a custom model?

- Assume the model is incapable of the task, and move on?

There is no simple answer - it depends. However, if your task involves logical reasoning or complexity, consider trying the techniques in this article to build more reliable, high-performing prompts.

## Why GPT-3 fails on complex tasks

If you were asked to multiply 13 by 17, would the answer pop immediately into your mind? For most of us, probably not. Yet, that doesn't mean humans are incapable of two-digit multiplication. With a few seconds, and some pen and paper, it's not too taxing to work out that 13 x 17 = 130 + 70 + 21 = 221.

Similarly, if you give GPT-3 a task that's too complex to do in the time it takes to calculate its next token, it may confabulate an incorrect guess. Yet, akin to humans, that doesn't necessarily mean the model is incapable of the task. With some time and space to reason things out, the model still may be able to answer reliably.

As an example, if you ask `gpt-3.5-turbo-instruct` the following math problem about juggling balls, it answers incorrectly:

```gpt-3.5-turbo-instruct

Q: A juggler has 16 balls. Half of the balls are golf balls and half of the golf balls are blue. How many blue golf balls are there?

A:

```

```gpt-3.5-turbo-instruct

There are 8 blue golf balls.

```

Does this mean that GPT-3 cannot do simple math problems? No; in fact, it turns out that by prompting the model with `Let's think step by step`, the model solves the problem reliably:

```gpt-3.5-turbo-instruct

Q: A juggler has 16 balls. Half of the balls are golf balls and half of the golf balls are blue. How many blue golf balls are there?

A: Let's think step by step.

```

```gpt-3.5-turbo-instruct

There are 16 balls in total.

Half of the balls are golf balls.

That means that there are 8 golf balls.

Half of the golf balls are blue.

That means that there are 4 blue golf balls.

```

Of course, it's hard to tell from only a single example whether this `Let's think step by step` trick actually works in general or just got lucky on this particular problem. But it really does work. On a benchmark of word math problems, the `Let's think step by step` trick raised GPT-3's solve rate massively, from a worthless 18% to a decent 79%!

## Model capabilities depend on context

When learning to work with GPT-3, one common conceptual mistake is to believe that its capabilities are fixed across all contexts. E.g., if GPT-3 gets a simple logic question wrong, then it must be incapable of simple logic.

But as the `Let's think step by step` example illustrates, apparent failures of GPT-3 can sometimes be remedied with a better prompt that helps the model steer itself toward the correct output.

## How to improve reliability on complex tasks

The rest of this article shares techniques for improving reliability of large language models on complex tasks. Although some of the techniques are specific to certain types of problems, many of them are built upon general principles that can be applied to a wide range of tasks, e.g.:

- Give clearer instructions

- Split complex tasks into simpler subtasks

- Structure the instruction to keep the model on task

- Prompt the model to explain before answering

- Ask for justifications of many possible answers, and then synthesize

- Generate many outputs, and then use the model to pick the best one

- Fine-tune custom models to maximize performance

| |

174983

|

{

"cells": [

{

"cell_type": "markdown",

"id": "dd290eb8-ad4f-461d-b5c5-64c22fc9cc24",

"metadata": {},

"source": [

"# Using Tool Required for Customer Service\n",

"\n",

"The `ChatCompletion` endpoint now includes the ability to specify whether a tool **must** be called every time, by adding `tool_choice='required'` as a parameter. \n",

"\n",

"This adds an element of determinism to how you build your wrapping application, as you can count on a tool being provided with every call. We'll demonstrate here how this can be useful for a contained flow like customer service, where having the ability to define specific exit points gives more control.\n",

"\n",